在k8s集群部署ELK

目录

1 准备环境

使用kubeadm或者其他方式部署一套k8s集群。

在k8s集群创建一个namespace:halashow

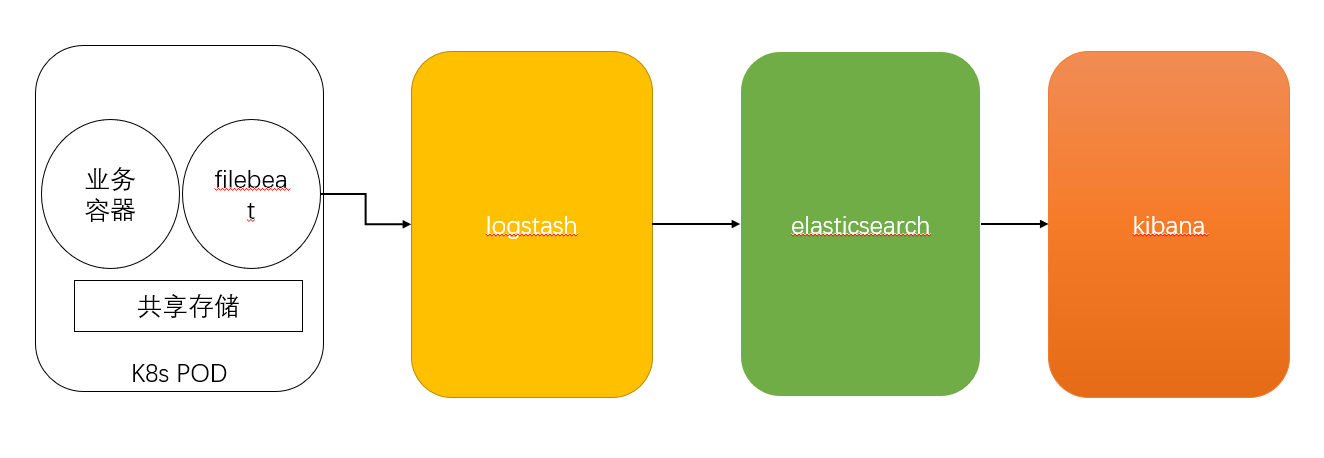

kubectl create ns halashow2 ELK部署架构

3 部署elasticSearch

这是一个单节点部署,高可用方案我在研究研究。。。

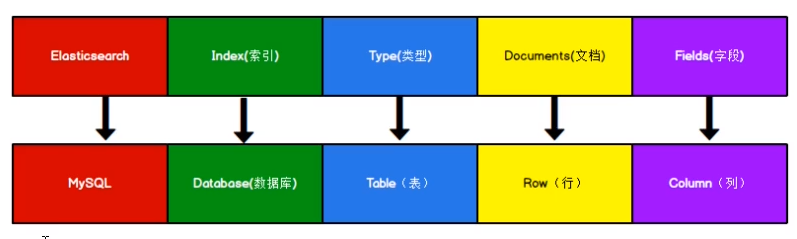

es与mysql的概念对应关系。

es倒排索引。7.X版本type已经删除。

3.1 准备资源配置清单

Deployment中存在一个es的业务容器,和一个init容器,init容器主要是配置vm.max_map_count=262144。

service暴露了9200端口,其他服务可通过service name加端口访问es。

---

apiVersion: apps/v1

kind: Deployment

metadata:

generation: 1

labels:

app: elasticsearch-logging

version: v1

name: elasticsearch

namespace: halashow

spec:

minReadySeconds: 10

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: elasticsearch-logging

version: v1

strategy:

type: Recreate

template:

metadata:

creationTimestamp: null

labels:

app: elasticsearch-logging

version: v1

spec:

affinity:

nodeAffinity: {}

containers:

- env:

- name: discovery.type

value: single-node

- name: ES_JAVA_OPTS

value: -Xms512m -Xmx512m

- name: MINIMUM_MASTER_NODES

value: "1"

image: docker.elastic.co/elasticsearch/elasticsearch:7.12.0-amd64

imagePullPolicy: IfNotPresent

name: elasticsearch-logging

ports:

- containerPort: 9200

name: db

protocol: TCP

- containerPort: 9300

name: transport

protocol: TCP

resources:

limits:

cpu: "1"

memory: 1Gi

requests:

cpu: "1"

memory: 1Gi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /data

name: es-persistent-storage

dnsPolicy: ClusterFirst

imagePullSecrets:

- name: user-1-registrysecret

initContainers:

- command:

- /sbin/sysctl

- -w

- vm.max_map_count=262144

image: alpine:3.6

imagePullPolicy: IfNotPresent

name: elasticsearch-logging-init

resources: {}

securityContext:

privileged: true

procMount: Default

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- hostPath:

path: /opt/paas/hanju/es_data

type: ""

name: es-persistent-storage

---

apiVersion: v1

kind: Service

metadata:

namespace: halashow

name: elasticsearch

labels:

app: elasticsearch-logging

spec:

type: ClusterIP

ports:

- port: 9200

name: elasticsearch

selector:

app: elasticsearch-loggingy

3.2 交付至k8s集群

执行如下代码创建es

kubectl apply -f elaticsearch.yaml查看容器是否运行

kubectl get pod -n halashow | ``grep` `ela执行如下代码查看es状态

curl 172.31.141.130:9200

{

"name" : "elasticsearch-6755f64866-f9jr2",

"cluster_name" : "docker-cluster",

"cluster_uuid" : "AnWOO_hJTyqEjS3pLG0E9A",

"version" : {

"number" : "7.12.0",

"build_flavor" : "default",

"build_type" : "docker",

"build_hash" : "78722783c38caa25a70982b5b042074cde5d3b3a",

"build_date" : "2021-03-18T06:17:15.410153305Z",

"build_snapshot" : false,

"lucene_version" : "8.8.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

4 部署logstash

4.1 准备资源配置清单

创建configMap定义logstash相关配置项,主要包括一下几项。

input:定义输入到logstash的源。

filter:定义过滤条件。

output:可以定义输出到es,redis,kafka等等。

---

apiVersion: v1

kind: ConfigMap

metadata:

name: logstash-config

namespace: halashow

data:

logstash.conf: |-

input {

beats {

port => 5044

}

}

filter {

json {

source => "message"

}

}

output {

elasticsearch {

hosts => ["elasticsearch:9200"]

}

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: logstash

namespace: halashow

labels:

name: logstash

spec:

replicas: 1

selector:

matchLabels:

name: logstash

template:

metadata:

labels:

app: logstash

name: logstash

spec:

containers:

- name: logstash

image: docker.elastic.co/logstash/logstash:7.12.0

ports:

- containerPort: 5044

protocol: TCP

- containerPort: 9600

protocol: TCP

volumeMounts:

- name: logstash-config

#mountPath: /usr/share/logstash/logstash-simple.conf

#mountPath: /usr/share/logstash/config/logstash-sample.conf

mountPath: /usr/share/logstash/pipeline/logstash.conf

subPath: logstash.conf

#ports:

# - containerPort: 80

# protocol: TCP

volumes:

- name: logstash-config

configMap:

#defaultMode: 0644

name: logstash-config

---

apiVersion: v1

kind: Service

metadata:

namespace: halashow

name: logstash

labels:

app: logstash

spec:

type: ClusterIP

ports:

- port: 5044

name: logstash

selector:

app: logstash4.2 交付至k8s集群

[root@k8s-master logstash]``# kubectl apply -f logstash.yaml``[root@k8s-master logstash]``# kubectl get pod -n halashow | grep logst``logstash-65bb74d7c5-5n5j9 1``/1` `Running 0 16h5 部署nginx和filebeat

5.1 准备资源配置清单

nginx日志需要修改为json格式化输出。

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-config-to-logstash

namespace: halashow

data:

filebeat.yml: |-

filebeat.inputs:

- type: log

paths:

- /logm/*.log

output.logstash:

hosts: ['logstash:5044']

---

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-conf

namespace: halashow

data:

nginx.conf: |-

user nginx;

worker_processes 1;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format log_json '{"@timestamp": "$time_local","user_ip":"$http_x_real_ip","lan_ip":"$remote_addr","log_time":"$time_iso8601","user_req":"$request","http_code":"$status","body_bytes_sents":"$body_bytes_sent","req_time":"$request_time","user_ua":"$http_user_agent"}';

access_log /var/log/nginx/access.log log_json;

sendfile on;

keepalive_timeout 65;

include /etc/nginx/conf.d/*.conf;

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

namespace: halashow

labels:

name: nginx

spec:

replicas: 1

selector:

matchLabels:

name: nginx

template:

metadata:

labels:

app: nginx

name: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

protocol: TCP

volumeMounts:

- name: logm

mountPath: /var/log/nginx/

- name: nginx-conf

mountPath: /etc/nginx/nginx.conf

subPath: nginx.conf

- name: filebeat

image: docker.elastic.co/beats/filebeat:7.12.0

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

volumeMounts:

- mountPath: /logm

name: logm

- name: config

mountPath: /etc/filebeat.yml

readOnly: true

subPath: filebeat.yml

volumes:

- name: logm

emptyDir: {}

- name: config

configMap:

defaultMode: 0640

name: filebeat-config-to-logstash

- name: nginx-conf

configMap:

defaultMode: 0640

name: nginx-conf5.2 交付至k8s集群

[root@k8s-master filebeat]``# #kubectl apply -f nginx_With_filebeat_to_logstash.yaml``[root@k8s-master filebeat]``# kubectl get pod -n halashow | grep logst``logstash-65bb74d7c5-5n5j9 1``/1` `Running 0 16h6 部署kibana

6.1 准备资源配置清单

kibana服务暴露采用了ingress形式,也可以采用nodePort,这里k8s集群安装了ingress插件,所以采用了ingress方式。

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: halashow

labels:

name: kibana

spec:

replicas: 1

selector:

matchLabels:

name: kibana

template:

metadata:

labels:

app: kibana

name: kibana

spec:

containers:

- name: kibana

image: docker.elastic.co/kibana/kibana:7.12.0

ports:

- containerPort: 5601

protocol: TCP

env:

- name: ELASTICSEARCH_URL

value: http://elasticsearch:9200

---

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: halashow

spec:

ports:

- protocol: TCP

port: 80

targetPort: 5601

selector:

app: kibana

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kibana

namespace: halashow

spec:

rules:

- host: kibana.com

http:

paths:

- path: /

backend:

serviceName: kibana

servicePort: 806.2 交付至k8s集群

[root@k8s-master kibana]``# #kubectl apply -f kibana.yaml``[root@k8s-master kibana]``# kubectl get pod -n halashow | grep kibana``kibana-85954595c4-rc5sj 1``/1` `Running 0 16h7 验证

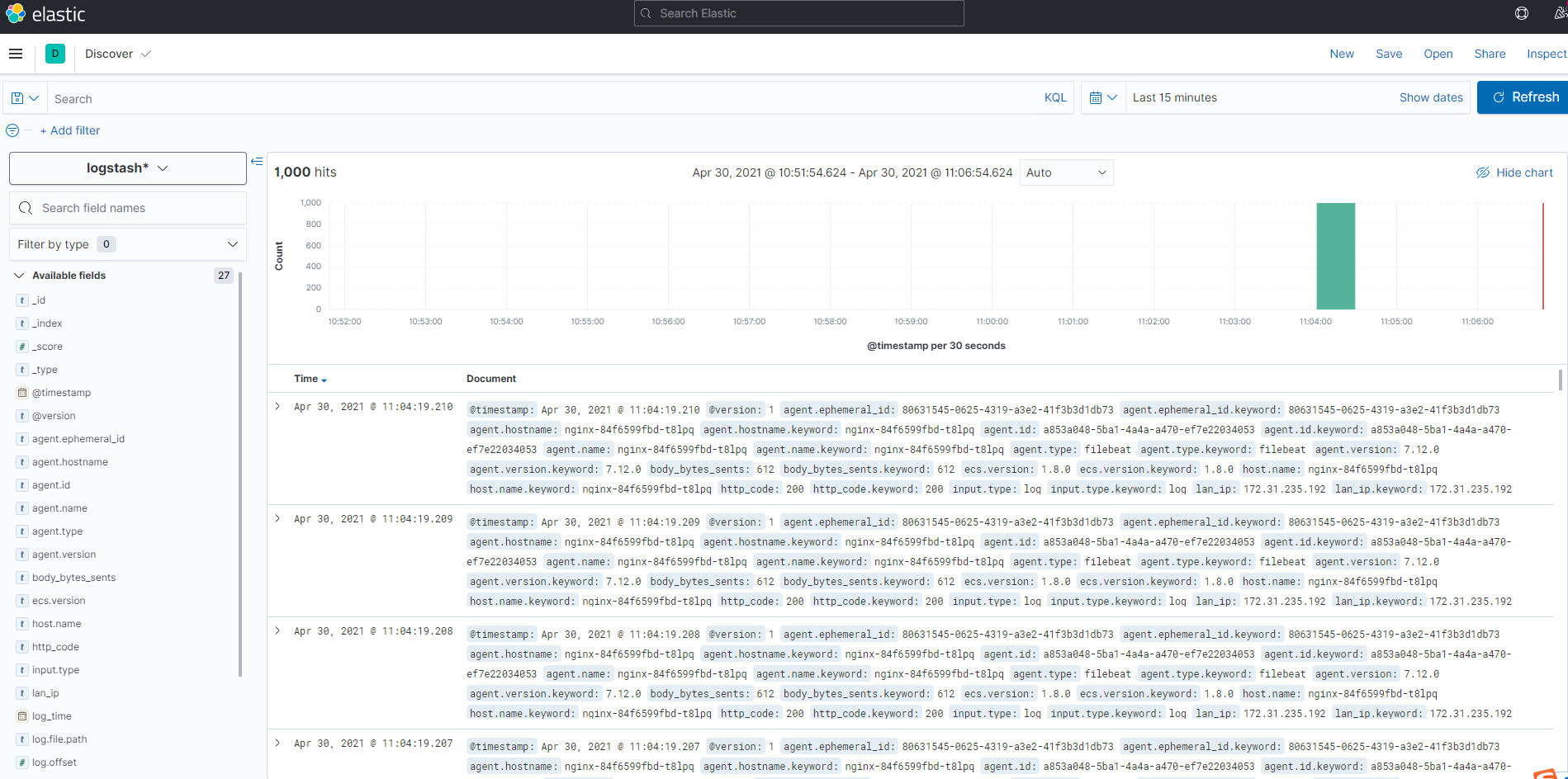

在本地安装访问nginx容器,并通过kibana查看日志。

[root@k8s-master kibana]``# yum -y install httpd-tools``[root@k8s-master kibana]``# kubectl get pod -n halashow -owide | grep nginx``nginx-84f6599fbd-t8lpq 2``/2` `Running 0 17h 172.31.119.35 k8s-slave-1 <none> <none>``[root@k8s-master kibana]``# ab -c 100 -n 1000 http://172.31.119.35:80/在外部通过kibana.com进行访问时,需要做域名解析,解析到的IP地址为ingress pod的IP

[root@k8s-master kibana]``# kubectl get pod -n kube-system -owide | grep ingress``ingress-nginx-controller-6df896b675-r9dr7 1``/1` `Running 0 9d 192.168.29.28 k8s-slave-4 <none> <none>

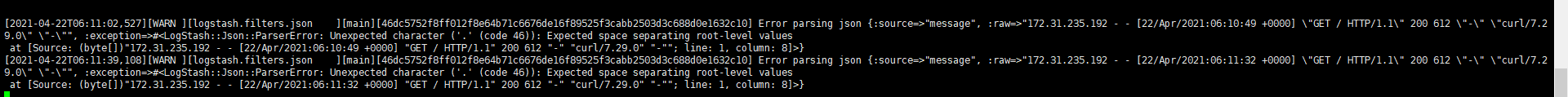

遇到的问题:是由于nginx日志没有做json格式化输出。