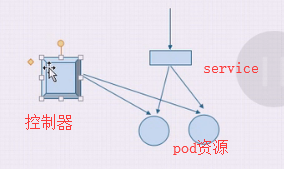

Pod控制器:

ReplicationController,已废弃;

ReplicaSet,新一代的ReplicationController,管理无状态的pod资源,核心资源有3个,1用户期望的pod副本(按用户期望的创建指定的pod多退少补),2标签选择器,选定和管理自己的pod副本,3pod资源模板,完成pod资源的新建(自动扩缩容),k8s不建议直接使用ReplicaSet,而推荐用Deployment;

Deployment,建构在ReplicaSet之上,除支持ReplicaSet的功能,还支持滚动更新及回滚,还提供声明式配置(基于声明的逻辑重新定义,在运行中定义),管理无状态应用(关注群体而不关注个体);

DaemonSet,系统级的功能,保证在集群中一个node上只运行1个Pod,无状态应用,必须是守护进程类的(不会终止);

Job,要不要重新创建,要看任务是否完成,如要执行DB备份;

Cronjob,周期性的运行,如果前一个任务还没完,下次任务就来了;

StatefulSet,每个节点都有数据(有状态)要存储,把操作步骤写成脚本放入模板中,故障后启动会自动执行;

注:

TPR,third party resources,第三方资源,1.2+支持,1.7废弃;

CDR,custom defined resources,自定义资源,1.8+支持;

Operator,可将运维技能(操作步骤)灌进;

Helm,同linux的yum,简化运维技能;

kubectl explain rs #replicaSet,apps/v1,apps群组下的v1

kubectl explain rs.spec #replicas副本数量|selector|template

kubectl explain rs.spec.template #pod的metadata|spec,模板中定义的标签一定要与选择器中是一样的,否则会一直创建下去直接到k8s撑爆

kubectl explain rs.spec.template.spec

例:

vim rs-demo.yaml

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: myapp

namespace: default

spec:

replicas: 2

selector:

matchLabels:

app: myapp

release: canary

template:

metadata:

name: myapp-pod

labels:

app: myapp

release: canary

environment: qa

spec:

containers:

- name: myapp-container

image: ikubernetes/myapp:v1

ports:

- name: http

containerPort: 80

kubectl create -f rs-demo.yaml

kubectl get rs

kubectl get pods --show-labels

kubectl describe pods myapp-XXX

curl 10.244.2.26

kubectl delete pods myapp-XXX #会自动创建

kubectl label pods pod-demo release=canary

kubectl get pods --show-labels #只剩1个了

kubectl delete pods pod-demo

kubectl get pods

kubectl edit rs myapp #replicas: 5,image: ikubenetes/myapp:v2

kubectl get pods

kubectl get rs -o wide

curl 10.244.1.17 #只有重建出的pod资源才是新版本的

kubectl delete pods myapp-XXX

kubectl get pods

curl 10.244.2.28 #v2,金丝雀发布,要手动干预,比如有5台服务器,先用2台跑v2,跑2天没问题后,再依次关掉其它3台,依次更新,

注:

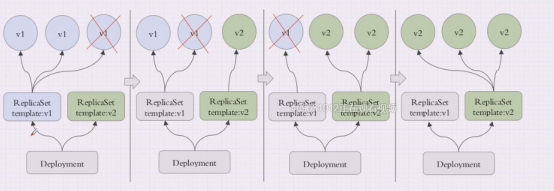

蓝绿发布,replicaSet1有2个容器,v1,replicaSet2为新创建的,也2个容器,将service指向改到rs2上的2个;

以上金丝雀发布和蓝绿发布,都需要人为参与,而Deployment控制器可帮助我们专门做这个,而且可方便回滚;

Deployment,

提供滚动式自定义自控制式(如控制更新粒度)更新,

控制更新节奏和更新逻辑;

kubectl explain deploy #使用apps/v1

kubectl explain deploy.spec #多了strategy更新策略,revisionHistoryLimit默认10,paused

kubectl explain deploy.spec.strategy #type有Recreate|RollingUpdate

kubectl explain deploy.spec.strategy.rollingUpdate #控制更新粒度,maxSurge更新过程中超出目标副本的数量(可number或百分比)|maxUnavailable,这2个参数不能同时指定0,否则不能完成滚动更新

kubectl explain deploy.spec.template #pod的metadata|spec

例:

vim deploy-demo.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-deploy

namespace: default

spec:

replicas: 2

selector:

matchLabels:

app: myapp

release: canary

template:

metadata:

labels:

app: myapp

release: canary

spec:

containers:

- name: myapp

image: ikubernetes/myapp:v1

ports:

- name: http

containerPort: 80

kubectl apply -f deploy-demo.yaml #既可创建也可更新

kubectl get deploy

kubectl get rs #Deployment自动创建的,myapp-deploy-69b47bc96d,69b47bc96d为模板的hash值,不是随机的,可根据这个hash值追踪到模板sss

kubectl get pods

vim deploy-demo.yaml #改replicas为3

kubectl apply -f deploy-demo.yaml

kubectl get pods

kubectl describe deploy myapp-deploy #会自动添加一些Annotations,apply自动维护的,默认StrategyType是RollingUpdate,RollingUpdateStrategy:25% max unavailable 25% max surge

kubectl get pods -w #监控

kubectl get pods -l app=myapp -w

vim deploy-demo.yaml #ikubernetes/v2

kubectl apply deploy-demo.yaml #用-w查看更新过程

kubectl get rs -o wide

kubectl rollout history deployment myapp-deploy #rollout ondo用来回滚,rollout pause

kubectl patch deployment myapp-deploy -p '{"spec":{"replicas":5}}' #通过打补丁方式修改资源清单

kubectl get pods

kubectl patch deployment myapp-deploy -p '{"spec":{"strategy":{"rollingUpdate":{"maxSurge":1,"maxUnavailable":0}}}}'

kubectl describe deployment myapp-deploy #RollingUpdateStrategy

kubectl set image deployment myapp-deploy myapp=ikubernetes/myapp:v3 && kubectl rollout pause deployment myapp-deploy #金丝雀发布

kubectl get pods -l app=myapp -w

kubectl rollout status deployment myapp-deploy

kubectl rollout resume deployment myapp-deploy

kubectl get rs -o wide

kubectl rollout history deployment myapp-deploy #123

kubectl rollout undo deployment myapp-deploy --to-revisinotallow=1 #回滚到v1,v1就没有了,还原后为v4

kubectl get rs -o wide

kubectl explain ds

kubectl explain ds.spec

例:

vim ds-demo.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: redis

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: redis

role: logstore

template:

metadata:

labels:

app: redis

role: logstore

spec:

containers:

- name: redis

image: redis:4.8-alpine

ports:

- name: redis

containerPort: 6379

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: filebeat-ds

namespace: default

spec:

selector:

matchLabels:

app: filebeat

release: stable

template:

metadata:

labels:

app: filebeat

release: stable

spec:

containers:

- name: filebeat

image: ikubernetes/filebeat:5.6.5-alpine

env:

- name: REDIS_HOST

value: redis.default.svc.cluster.local

- name: REDIS_LOG_LEVEL

value: info

docker pull ikubernetes/filebeat:5.6.5-alpine

docker image inspect ikubernetes/filebeat:5.6.5-alpine

kubectl explain pods.spec.containers

kubectl apply -f ds-demo.yaml

kubectl get pods

kubectl logs myapp-ds-XXX

kubectl apply -f ds-demo.yaml

kubectl expose deployment redis --port=6379

kubectl get svc

kubectl get pods

kubectl exec -it redis-XXX-XXX -- /bin/sh

netstat -tnl

ls

nslookup redis.default.svc.cluster.local

redis-cli -h redis.default.svc.clsuter.local

keys *

exit

kubectl exec -it filebeat-ds-XXX -- /bin/sh

ps aux

cat /etc/filebeat/filebeat.yml

printenv

kill -1 1 #1为PID,-1为重载

kubectl describe ds filebeat

kubectl get pods -l app=filebeat -o wide #1个节点只运行1个

kubectl explain ds.spec.updateStrategy #OnDelete|RollingUpdate

kubectl explain ds.spec.updateStrategy.RollingUpdate #只有maxUnavailable

kubectl set image daemonsets filebeat filebeat=ikubernetes/filebeat:5.6.6-alpine

kubectl get pods -w

kubectl explain pods.spec #hostNetwork <boolean>,hostIPC <boolean>,hostPID <boolean>