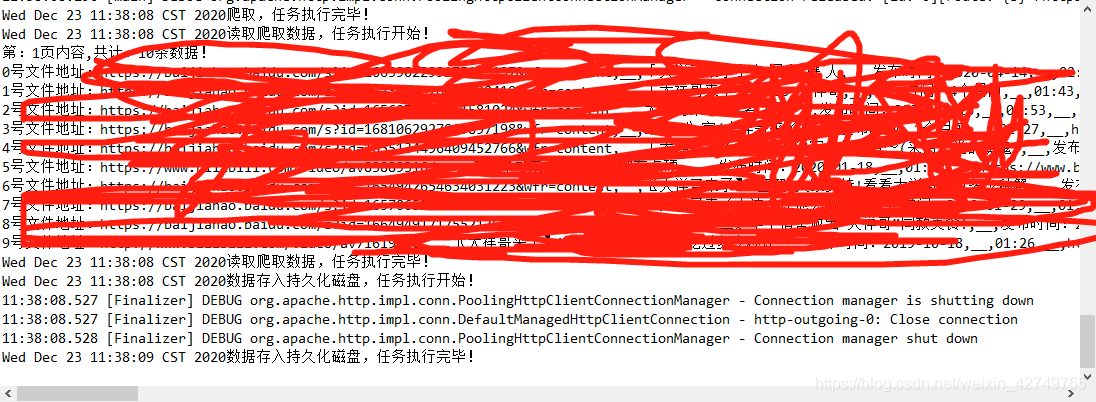

预览结果视图

目录

预览结果视图

介绍

代码

依赖

爬取网页数据代码

解析代码

解析介绍

完整代码

介绍

1.爬取通过org.jsoup 和HttpClients实现

2.爬取多页内容的时候进行循环,多页进行爬取

3.爬取来数据解析到jsonoup

4.取回数据使用文件保存直接保存到本地

5.文件保存成excel 可以产考我的另一篇文章

6.最后直接用完整代码即可

代码

依赖

import java.io.IOException;

import java.util.ArrayList;

import java.util.Date;

import java.util.List;

import org.apache.http.HttpEntity;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.util.EntityUtils;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.nodes.Element;

import org.jsoup.select.Elements;

import java.io.File;

import java.io.FileOutputStream;

import java.io.OutputStream;

import org.apache.poi.xssf.usermodel.XSSFCell;

import org.apache.poi.xssf.usermodel.XSSFRow;

import org.apache.poi.xssf.usermodel.XSSFSheet;

import org.apache.poi.xssf.usermodel.XSSFWorkbook;

import com.alibaba.fastjson.JSONArray;

调用循环代码

/**

* 爬取 任务 001 爬取xx数据

* 爬取xx网高中xx学xx版本100页数据

*

* @param url

* @throws InterruptedException

*/

public static void crawlingTask001() throws InterruptedException {

// ----------------------------------代办任务开始--------------------------

// 爬取xx网 试卷栏目 文字版 其他选项不限

// 创建存储

List<List<String>> list0 = new ArrayList<List<String>>();

// ----------------------------------爬取,任务--------------------------

System.out.println(new Date() + "爬取,任务执行开始!");

// 爬取数据

for (int i = 1; i < 2; i++) {

Thread.sleep(500);

String url = "https://www.xx.com/sf/vsearch?pd=igI&rsv_bp=1&f=8&async=1&pn="+ i;

List<String> list = crawlingData(url);

list0.add(list);

}

System.out.println(new Date() + "爬取,任务执行完毕!");

// ----------------------------------读取爬取数据,任务--------------------------

System.out.println(new Date() + "读取爬取数据,任务执行开始!");

JSONArray ja = new JSONArray();

// 读取数据

for (int i = 0; i < list0.size(); i++) {

List<String> list01 = list0.get(i);

System.out.println("第:" + (i + 1) + "页内容,共计:" + list01.size() + "条数据!");

for (int j = 0; j < list01.size(); j++) {

System.out.println(j + "号文件地址:" + list01.get(j));

ja.add(list01.get(j));

// TODO 1.之后这里进行对每个页面进行读取 2.获取文件内容中图片 3.下载到本地 并生成对应文件夹河对应word文档中

}

}

System.out.println(new Date() + "读取爬取数据,任务执行完毕!");

// ----------------------------------数据存入持久化磁盘,任务--------------------------

System.out.println(new Date() + "数据存入持久化磁盘,任务执行开始!");

spider(ja);

System.out.println(new Date() + "数据存入持久化磁盘,任务执行完毕!");

// ----------------------------------代办任务结束--------------------------

}

爬取网页数据代码

// 根据url地址获取对应页面的HTML内容,我们将上一节中的内容打包成了一个方法,方便调用

public static String getHTMLContent(String url) throws IOException {

// 建立一个新的请求客户端

CloseableHttpClient httpClient = HttpClients.createDefault();

// 使用HttpGet方式请求网址

HttpGet httpGet = new HttpGet(url);

httpGet.setHeader("User-Agent","Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:50.0) Gecko/20100101 Firefox/50.0"); // 设置请求头消息User-Agent

// 获取网址的返回结果

CloseableHttpResponse response = httpClient.execute(httpGet);

// 获取返回结果中的实体

HttpEntity entity = response.getEntity();

String content = EntityUtils.toString(entity);

// 关闭HttpEntity流

EntityUtils.consume(entity);

return content;

}

解析代码

/**

* 爬取数据 获取出url

*

* @param url

*/

public static List<String> crawlingData(String url) {

String rawHTML = null;

try {

rawHTML = getHTMLContent(url);

} catch (IOException e) {

e.printStackTrace();

System.out.println(e.toString());

}

// System.out.println("抓取网页:"+rawHTML);

// 将当前页面转换成Jsoup的Document对象

Document doc = Jsoup.parse(rawHTML);

// 获取所有的博客列表集合

// Elements blogList = doc.select("p[class=content]");

Elements blogList = doc.select("div[class=video_list video_short]");

// System.out.println("解析列表:"+rawHTML);

List<String> list = new ArrayList<String>();

// 针对每个博客内容进行解析,并输出

for (Element element : blogList) {

String href = element.select("a[class=small_img_con border-radius]").attr("href");

String vidoeTime = element.select("div[class=time_con]").select("span[class=video_play_timer]").text();

String name = element.select("div[class=video_small_intro]").select("a[class=video-title c-link]").text();

String ly = element.select("div[class=c-color-gray2 video-no-font]").select("span[class=wetSource c-font-normal]").text();

String uploadtime = element.select("div[class=c-color-gray2 video-no-font]").select("span[class=c-font-normal]").text();

list.add(href

+ ",__," + name

+ ",__," + uploadtime

+ ",__," + vidoeTime

+ ",__," + href

+ ",__," +href

+ ",__," + ly);

// System.out.println(href + ",__," + name+ ",__," + datetime+ ",__," + uploadname+ ",__," + uploadnamemasterurl);

}

return list;

}

解析介绍

1.这里的 element.select("div[class=time_con]") 和js中jquery 中的选择器材差不多

2.父子及,可以使用select xx .select 这样使用

3.出结果可以attr("xx") 或 text()

4.jsonup 和poi 的maven 依从网上随便用没啥版本影响

完整代码

package com.superman.test;

import java.io.IOException;

import java.util.ArrayList;

import java.util.Date;

import java.util.List;

import org.apache.http.HttpEntity;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.util.EntityUtils;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.nodes.Element;

import org.jsoup.select.Elements;

import java.io.File;

import java.io.FileOutputStream;

import java.io.OutputStream;

import org.apache.poi.xssf.usermodel.XSSFCell;

import org.apache.poi.xssf.usermodel.XSSFRow;

import org.apache.poi.xssf.usermodel.XSSFSheet;

import org.apache.poi.xssf.usermodel.XSSFWorkbook;

import com.alibaba.fastjson.JSONArray;

/**

* 爬取xx数据全网视频xx词搜索对应视频

*

* 调用xx查询

* 爬取服务 根据关键词爬取视频网站数据

* 侵权线索

*

* 导出excel

*

* @author jianghy

*

*/

public class DataLearnerCrawlerSPWZv2 {

public static void main(String[] args) throws InterruptedException {

crawlingTask001();

}

/**

* 爬取 任务 001 爬取 xx数据

* 爬取xxxx字版本100页数据

*

* @param url

* @throws InterruptedException

*/

public static void crawlingTask001() throws InterruptedException {

// ----------------------------------代办任务开始--------------------------

// 爬xx xx栏目 文字版 其他选项不限

// 创建存储

List<List<String>> list0 = new ArrayList<List<String>>();

// ----------------------------------爬取,任务--------------------------

System.out.println(new Date() + "爬取,任务执行开始!");

// 爬取数据

for (int i = 1; i < 2; i++) {

Thread.sleep(500);

String url = "https://www.xx.com/sf/vsearch?pd=video&tn=vsearch&lid=b3581e7000011f50&ie=utf-8&rsv_pq=b3581e7000011f50&wdxhJiHsELdcqFigI&rsv_bp=1&f=8&async=1&pn="+ i;

List<String> list = crawlingData(url);

list0.add(list);

}

System.out.println(new Date() + "爬取,任务执行完毕!");

// ----------------------------------读取爬取数据,任务--------------------------

System.out.println(new Date() + "读取爬取数据,任务执行开始!");

JSONArray ja = new JSONArray();

// 读取数据

for (int i = 0; i < list0.size(); i++) {

List<String> list01 = list0.get(i);

System.out.println("第:" + (i + 1) + "页内容,共计:" + list01.size() + "条数据!");

for (int j = 0; j < list01.size(); j++) {

System.out.println(j + "号文件地址:" + list01.get(j));

ja.add(list01.get(j));

// TODO 1.之后这里进行对每个页面进行读取 2.获取文件内容中图片 3.下载到本地 并生成对应文件夹河对应word文档中

}

}

System.out.println(new Date() + "读取爬取数据,任务执行完毕!");

// ----------------------------------数据存入持久化磁盘,任务--------------------------

System.out.println(new Date() + "数据存入持久化磁盘,任务执行开始!");

spider(ja);

System.out.println(new Date() + "数据存入持久化磁盘,任务执行完毕!");

// ----------------------------------代办任务结束--------------------------

}

/**

* 爬取数据 获取出url

*

* @param url

*/

public static List<String> crawlingData(String url) {

String rawHTML = null;

try {

rawHTML = getHTMLContent(url);

} catch (IOException e) {

e.printStackTrace();

System.out.println(e.toString());

}

// System.out.println("抓取网页:"+rawHTML);

// 将当前页面转换成Jsoup的Document对象

Document doc = Jsoup.parse(rawHTML);

// 获取所有的博客列表集合

// Elements blogList = doc.select("p[class=content]");

Elements blogList = doc.select("div[class=video_list video_short]");

// System.out.println("解析列表:"+rawHTML);

List<String> list = new ArrayList<String>();

// 针对每个博客内容进行解析,并输出

for (Element element : blogList) {

String href = element.select("a[class=small_img_con border-radius]").attr("href");

String vidoeTime = element.select("div[class=time_con]").select("span[class=video_play_timer]").text();

String name = element.select("div[class=video_small_intro]").select("a[class=video-title c-link]").text();

String ly = element.select("div[class=c-color-gray2 video-no-font]").select("span[class=wetSource c-font-normal]").text();

String uploadtime = element.select("div[class=c-color-gray2 video-no-font]").select("span[class=c-font-normal]").text();

list.add(href

+ ",__," + name

+ ",__," + uploadtime

+ ",__," + vidoeTime

+ ",__," + href

+ ",__," +href

+ ",__," + ly);

// System.out.println(href + ",__," + name+ ",__," + datetime+ ",__," + uploadname+ ",__," + uploadnamemasterurl);

}

return list;

}

// 根据url地址获取对应页面的HTML内容,我们将上一节中的内容打包成了一个方法,方便调用

public static String getHTMLContent(String url) throws IOException {

// 建立一个新的请求客户端

CloseableHttpClient httpClient = HttpClients.createDefault();

// 使用HttpGet方式请求网址

HttpGet httpGet = new HttpGet(url);

httpGet.setHeader("User-Agent","Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:50.0) Gecko/20100101 Firefox/50.0"); // 设置请求头消息User-Agent

// 获取网址的返回结果

CloseableHttpResponse response = httpClient.execute(httpGet);

// 获取返回结果中的实体

HttpEntity entity = response.getEntity();

String content = EntityUtils.toString(entity);

// 关闭HttpEntity流

EntityUtils.consume(entity);

return content;

}

}

文中很多地方换成了xx ,使用的时候自己患上自己要爬取的网站信息即可ok

有需要随时留言,唠唠 ^_^

ok

持续更新