在RAC里面,最重要的也是最核心的也就是内存的机制。主要是内存的数据块要在多个实例之间通过网络互相传递。互相传递也不是问题,复杂就复杂在数据块一致性的问题。比如说有6个节点的RAC,可能每个用户都链接到不同的实例上面,最后某个实例上面都会有某个数据块在操作,也就是相同的数据块可能在6个实例上面都有拷贝,尽管RAC可以做负载均衡,但是为了保证数据的准确性,Oracle需要一套复杂的机制来对数据块在6个实例上面的修改是可控的。同时通过一系列锁的机制来保证数据一致性。

为了保证数据块的一致性就是保证实例在请求数据块的时候都要去获取一个资源,这个资源就是访问数据块的资源,也就是一个锁。按照自己的访问方式来请求不同的锁。如果是查询操作那么请求的就是共享锁,如果是要修改一个数据块那么就要申请排他锁。

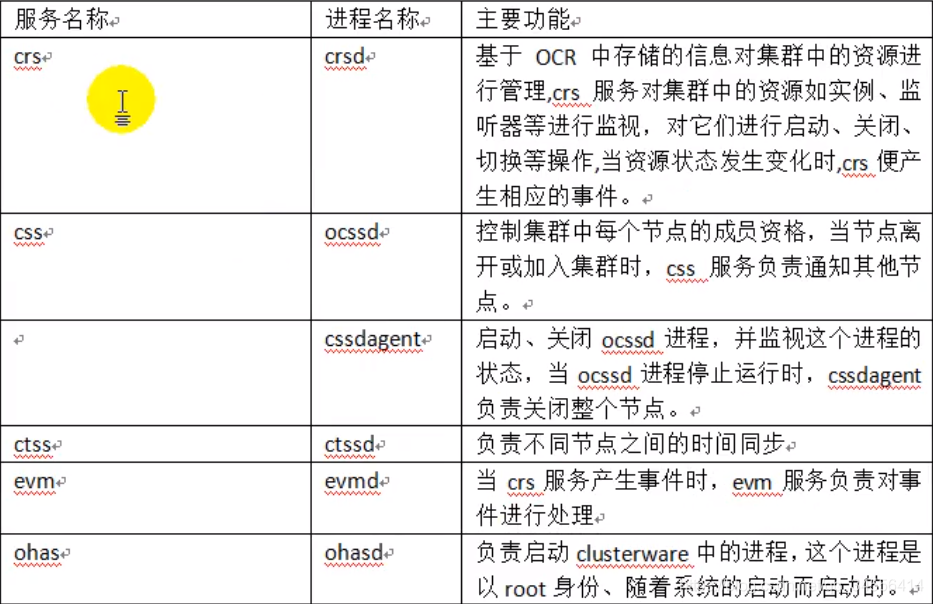

RAC守护进程

(OCR里面存储了集群的配置信息。11G集群时间同步使用rac自带的ctss服务,不使用ntp服务)

RAC的守护进程

/etc/init.d/init.*

[root@rac3 init.d]# ls init.*

init.crs init.crsd init.cssd init.evmd

[root@rac3 init.d]# ./init.crs

Usage: ./init.crs {stop|start|enable|disable}

[root@RAC1 init.d]# ps -ef | grep css

root 2319 1 0 21:09 ? 00:00:01 /u01/app/11.2.0/grid/bin/cssdmonitor

root 2335 1 0 21:09 ? 00:00:01 /u01/app/11.2.0/grid/bin/cssdagent

grid 2346 1 0 21:09 ? 00:00:07 /u01/app/11.2.0/grid/bin/ocssd.bin

root 4557 2499 0 21:22 pts/0 00:00:00 grep css

[root@RAC1 init.d]# ps -ef | grep crs

root 2915 1 1 21:11 ? 00:00:08 /u01/app/11.2.0/grid/bin/crsd.bin

root 4559 2499 0 21:22 pts/0 00:00:00 grep crs

[root@RAC1 init.d]# ps -ef | grep evmd

grid 2586 1 0 21:10 ? 00:00:03 /u01/app/11.2.0/grid/bin/evmd.bin

root 4632 2499 0 21:23 pts/0 00:00:00 grep evmd

init.crs 是后面 init.crsd init.cssd init.evmd启动的脚本。启动CRS就需要启动这三个核心的进程。

CRS(集群就绪服务)是完成整个数据库资源管理的一个进程,CSS(集群同步服务)是维护各个节点之间关系的,通过表决磁盘来判断节点各个的状态,如果节点状态不对就将其从集群里面踢出。EVMD(事件通知服务)是一些事件相关的一些进程,主要是通过这三个将CRS这个平台构建起来了。

这三个进程在默认情况下是自启动的,随着系统启动而启动。即CRS是自启动的,每次在操作系统启动之后查看会发现CRS服务都是启动的,因为上面这些守护进程都是自启动。

[grid@RAC1 ~]$ crsctl check crs

CRS-4638: Oracle High Availability Services is online

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

各种组件状态查询(命令所在位置/u01/app/11.2.0/grid/bin/)

• 节点层(lsnodes)

• 网络层(oifcfg)

• 集群层(就是用来管理RAC本身底层的一些信息的,比如说OCR,表决磁盘等(crsctl(对集群组件进行管理),ocrcheck,ocrdump,ocrconfig,crs_stat -t))

• 应用层 (就是注册到RAC里面的各种资源,数据库,监听器,虚拟IP,srvctl(对集群资源进行管理))

srvctl经常和crsctl经常搞混,只要明白两个命令操作的对选哪个不同就不会搞混淆

节点层olsnodes

[grid@rac1 ~]$ olsnodes --查看集群有多少个节点

rac1

rac2

[grid@rac1 ~]$ olsnodes -h --如果需要了解lsnodes更多的功能,需要使用-h来查看

Usage: olsnodes [ [-n] [-i] [-s] [-t] [<node> | -l [-p]] | [-c] ] [-g] [-v]

where

-n print node number with the node name

-p print private interconnect address for the local node

-i print virtual IP address with the node name

<node> print information for the specified node

-l print information for the local node

-s print node status - active or inactive

-t print node type - pinned or unpinned

-g turn on logging

-v Run in debug mode; use at direction of Oracle Support only.

-c print clusterware name

[grid@rac1 ~]$ olsnodes -i -n -s --可以看到两个节点都是active状态,如果其中一个节点挂掉了,那么就不会死active状态

rac1 1 rac1-vip Active

rac2 2 rac2-vip Active

[root@rac1 ~]# olsnodes -p -l --这里root用户可以使用grid用户下的命令是因为配置了root的环境变量,红色标识

rac1 10.10.10.1

[root@rac1 ~]# cat .bash_profile

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

PATH=$PATH:$HOME/bin:/u01/app/11.2.0/grid/bin

export PATH

网络层oifcfg(查询,定义和修改Oracle 集群需要的网卡属性,这些属性包括网卡的网段地址,子网掩码,接口类型等。)

[grid@rac1 ~]$ oifcfg iflist --查看网卡地址

eth0 192.168.11.0

eth1 10.10.10.0

eth1 169.254.0.0 --这个地址是oracle安装的时候产生的地址对应下面红色部分

[grid@rac1 ~]$ ifconfig

eth0 Link encap:Ethernet HWaddr 00:0C:29:30:BB:9C

inet addr:192.168.11.100 Bcast:192.168.11.255 Mask:255.255.255.0

eth0:1 Link encap:Ethernet HWaddr 00:0C:29:30:BB:9C

inet addr:192.168.11.101 Bcast:192.168.11.255 Mask:255.255.255.0

eth1 Link encap:Ethernet HWaddr 00:0C:29:30:BB:A6

inet addr:10.10.10.1 Bcast:10.10.10.255 Mask:255.255.255.0

eth1:1 Link encap:Ethernet HWaddr 00:0C:29:30:BB:A6

inet addr:169.254.192.118 Bcast:169.254.255.255 Mask:255.255.0.0

[root@rac2 ~]# ping 169.254.192.118 --在节点2上ping这个地址是通的

PING 169.254.192.118 (169.254.192.118) 56(84) bytes of data.

64 bytes from 169.254.192.118: icmp_seq=1 ttl=64 time=0.212 ms

[grid@rac1 ~]$ oifcfg getif

eth0 192.168.11.0 global public --这个共有ip和虚拟ip是让外部程序连接的ip

eth1 10.10.10.0 global cluster_interconnect --两个节点之间相互通讯的ip

集群层(重点crsctl(对集群组件进行操作,如crs,css),ocrcheck,ocrdump,ocrconfig,crs_stat -t)

[grid@rac1 ~]$ crs_stat -t

Name Type Target State Host

------------------------------------------------------------

ora.ARCHIVE.dg ora....up.type ONLINE ONLINE rac1

ora.DATA.dg ora....up.type ONLINE ONLINE rac1

ora.FRA.dg ora....up.type ONLINE ONLINE rac1

ora....ER.lsnr ora....er.type ONLINE ONLINE rac1

ora....N1.lsnr ora....er.type ONLINE ONLINE rac2

ora.asm ora.asm.type ONLINE ONLINE rac1

ora.gsd ora.gsd.type OFFLINE OFFLINE

ora....network ora....rk.type ONLINE ONLINE rac1

ora.oc4j ora.oc4j.type OFFLINE OFFLINE

ora.ons ora.ons.type ONLINE ONLINE rac1

ora.oradb.db ora....se.type ONLINE ONLINE rac1

ora....SM1.asm application ONLINE ONLINE rac1

ora....C1.lsnr application ONLINE ONLINE rac1

ora.rac1.gsd application OFFLINE OFFLINE

ora.rac1.ons application ONLINE ONLINE rac1

ora.rac1.vip ora....t1.type ONLINE ONLINE rac1

ora....SM2.asm application ONLINE ONLINE rac2

ora....C2.lsnr application ONLINE ONLINE rac2

ora.rac2.gsd application OFFLINE OFFLINE

ora.rac2.ons application ONLINE ONLINE rac2

ora.rac2.vip ora....t1.type ONLINE ONLINE rac2

ora....ry.acfs ora....fs.type ONLINE ONLINE rac1

ora.scan1.vip ora....ip.type ONLINE ONLINE rac2

[grid@rac1 ~]$ crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.ARCHIVE.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.DATA.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.FRA.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.LISTENER.lsnr

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.asm

ONLINE ONLINE rac1 Started

ONLINE ONLINE rac2 Started

ora.gsd

OFFLINE OFFLINE rac1

OFFLINE OFFLINE rac2

ora.net1.network

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.ons

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.registry.acfs

ONLINE ONLINE rac1

ONLINE ONLINE rac2

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac2

ora.oc4j

1 OFFLINE OFFLINE

ora.oradb.db

1 ONLINE ONLINE rac1 Open

2 OFFLINE OFFLINE Instance Shutdown

ora.rac1.vip

1 ONLINE ONLINE rac1

ora.rac2.vip

1 ONLINE ONLINE rac2

ora.scan1.vip

1 ONLINE ONLINE rac2

[grid@rac1 ~]$ crsctl check crs --查看当前节点crs,css,evm,ohas这些组件的状态

CRS-4638: Oracle High Availability Services is online

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

[grid@rac1 ~]$ crsctl check ctss

CRS-4700: The Cluster Time Synchronization Service is in Observer mode. --也可以单独查看的时间同步服务组件状态

[grid@rac1 ~]$ crsctl check cluster -all --查看所有节点的组件的状态

**************************************************************

rac1:

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

**************************************************************

rac2:

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

**************************************************************

下面对集群的启动和停止都在root用户下面操作

[root@rac1 ~]# crsctl stop cluster --本节点的集群服务停止

CRS-2673: Attempting to stop 'ora.crsd' on 'rac1'

CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on 'rac1'

[root@rac1 ~]# crsctl start cluster --本节点的集群服务开启

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'rac1'

CRS-2676: Start of 'ora.cssdmonitor' on 'rac1' succeeded

CRS-4622: Oracle High Availability Services autostart is enabled.

[root@rac1 ~]# crsctl stop has --本节点的集群服务停止

[root@rac1 ~]# crsctl stop crs --本节点的集群服务停止

[root@rac1 ~]# crsctl stop cluster -all停止所有节点的集群服务

[root@rac1 ~]# crsctl enable crs --允许集群服务的开机自启动

[root@rac1 ~]# crsctl enable -h

Usage:

crsctl enable crs

Enable OHAS autostart on this server

应用层管理(SRVCTL)

这个工具可以操作下面的几种资源:Database,Instance,ASM,Service,Listener 和 Node

Application,其中Node application又包括GSD,ONS,VIP。 这些资源除了使用srvctl工具

统一管理外,某些资源还有自己独立的管理工具,比如ONS可以使用onsctl命令进行管理;

Listener 可以通过lsnrctl 管理。

[root@rac1 ~]# srvctl -h

Usage: srvctl [-V]

Usage: srvctl add database -d <db_unique_name> -o <oracle_home> [-c {RACONENODE | RAC | SINGLE} [-e <server_list>] [-i <inst_name>] [-w <timeout>]] [-m <domain_name>] [-p <spfile>] [-r {PRIMARY | PHYSICAL_STANDBY | LOGICAL_STANDBY | SNAPSHOT_STANDBY}] [-s <start_options>] [-t <stop_options>] [-n <db_name>] [-y {AUTOMATIC | MANUAL | NORESTART}] [-g "<serverpool_list>"] [-x <node_name>] [-a "<diskgroup_list>"] [-j "<acfs_path_list>"]

Usage: srvctl config database [-d <db_unique_name> [-a] ] [-v]

Usage: srvctl start database -d <db_unique_name> [-o <start_options>] [-n <node>]

Usage: srvctl stop database -d <db_unique_name> [-o <stop_options>] [-f]

Usage: srvctl status database -d <db_unique_name> [-f] [-v]

Usage: srvctl enable database -d <db_unique_name> [-n <node_name>]

Usage: srvctl disable database -d <db_unique_name> [-n <node_name>]

(1)查看注册到CRS中的数据库,实例等资源信息

srvctl config database(显示数据库配置列表)

srvctl config database -d databasename(数据库配置信息详细显示)

srvctl config database -d racdb -a

srvctl config listener -n rac3

srvctl config asm -n rac3

[root@rac1 ~]# srvctl config asm -n rac1

Warning:-n option has been deprecated and will be ignored.

ASM home: /u01/app/11.2.0/grid

ASM listener: LISTENER

[root@rac1 ~]# srvctl config listener -n rac1

Warning:-n option has been deprecated and will be ignored.

Name: LISTENER

Network: 1, Owner: grid

Home: <CRS home>

End points: TCP:1521

(2)查看资源状态

srvctl status database -d database_name

srvctl status instance -d database_name -i instance_name [,instance_name-list]

[root@rac1 ~]# srvctl status database -d oradb --查看所有节点数据库状态

Instance oradb1 is running on node rac1

Instance oradb2 is not running on node rac2

[root@rac1 ~]# srvctl status database -d oradb -v -- -v选项将详细信息展示

Instance oradb1 is running on node rac1. Instance status: Open.

Instance oradb2 is not running on node rac2

[root@rac1 ~]# srvctl status instance -d oradb -i oradb1 --单独检查实例

Instance oradb1 is running on node rac1

[root@rac1 ~]# srvctl status instance -d oradb -i oradb2

Instance oradb2 is not running on node rac2

[root@rac1 ~]# srvctl status nodeapps --查看节点应用程序

VIP rac1-vip is enabled

VIP rac1-vip is running on node: rac1

VIP rac2-vip is enabled

VIP rac2-vip is running on node: rac1

Network is enabled

Network is running on node: rac1

Network is not running on node: rac2

GSD is disabled

GSD is not running on node: rac1

GSD is not running on node: rac2

ONS is enabled

ONS daemon is running on node: rac1

ONS daemon is not running on node: rac2

[root@rac1 ~]# srvctl status asm

ASM is running on rac1

[root@rac1 ~]# srvctl status listener -n rac1 --查看节点rac1上监听状态

Listener LISTENER is enabled on node(s): rac1

Listener LISTENER is running on node(s): rac1

[root@rac1 ~]# srvctl status asm -n rac1 --查看节点rac1上asm状态

ASM is running on rac1

(3)停止或启动相关的资源(启动stop改为start)

[root@rac1 ~]# srvctl stop -h

The SRVCTL stop command stops, Oracle Clusterware enabled, starting or running objects.

Usage: srvctl stop database -d <db_unique_name> [-o <stop_options>] [-f]

Usage: srvctl stop instance -d <db_unique_name> {-n <node_name> | -i <inst_name_list>} [-o <stop_options>] [-f]

Usage: srvctl stop service -d <db_unique_name> [-s "<service_name_list>" [-n <node_name> | -i <inst_name>] ] [-f]

Usage: srvctl stop asm [-n <node_name>] [-o <stop_options>] [-f]

Usage: srvctl stop listener [-l <lsnr_name>] [-n <node_name>] [-f]

[root@rac1 ~]# srvctl stop listener -n rac1 --关闭节点rac1上的监听

[root@rac1 ~]# srvctl status listener -n rac1

Listener LISTENER is enabled on node(s): rac1

Listener LISTENER is not running on node(s): rac1

[root@rac1 ~]# srvctl stop instance -d oradb -n rac1 --关闭单个数据库实例

[root@rac1 ~]# srvctl status instance -d oradb -n rac1

Instance oradb1 is not running on node rac1

[root@rac1 ~]# srvctl stop database -d oradb --关闭所有数据库实例

PRCC-1016 : oradb was already stopped

(4)启用或禁止某些资源随crs启动

srvctl disable(enable) database -d racdb

srvctl disable(enable) instance -d racdb -i rac3

srvctl disable(enable) service -d racdb -s myservice -i rac3

[root@rac1 ~]# srvctl enable database -d oradb

PRCC-1010 : oradb was already enabled

PRCR-1002 : Resource ora.oradb.db is already enabled

[root@rac1 ~]# srvctl enable database -d oradb -n rac1

(5)添加和删除资源到CRS中

srvctl add database -d newdb -o $ORACLE_HOME

srvctl add instance -d newdb -n rac1 -i newdb1

srvctl add instance -d newdb -n rac2 -i newdb2

srvctl add service -d newdb -s myservice -r rac3 -a rac4 -P BASIC

srvctl remove instance -d racdb -i rac3

srvctl remove database -d racdb

srvctl remove service -d racdb -s myservice