赛题介绍

http://challenge.ai.iqiyi.com/detail?raceId=61600f6cef1b65639cd5eaa6

https://www.datafountain.cn/competitions/551

运行说明【非常重要】

data.zip是比赛原始数据集,wsdm_model_data.zip是比赛提取的特征。- 代码第一部分是EDA,第二部分是提取特征,第三部分是训练模型。建议分开运行。

- 代码需要20G以上内存,需要有GPU,代码训练模型部分使用paddlepaddle搭建。

赛题描述

爱奇艺是中国和世界领先的高品质视频娱乐流媒体平台,每个月有超过5亿的用户在爱奇艺上享受娱乐服务。爱奇艺秉承“悦享品质”的品牌口号,打造涵盖影剧、综艺、动漫在内的专业正版视频内容库,和“随刻”等海量的用户原创内容,为用户提供丰富的专业视频体验。

爱奇艺手机端APP,通过深度学习等最新的AI技术,提升用户个性化的产品体验,更好地让用户享受定制化的娱乐服务。我们用“N日留存分”这一关键指标来衡量用户的满意程度。例如,如果一个用户10月1日的“7日留存分”等于3,代表这个用户接下来的7天里(10月2日~8日),有3天会访问爱奇艺APP。预测用户的留存分是个充满挑战的难题:不同用户本身的偏好、活跃度差异很大,另外用户可支配的娱乐时间、热门内容的流行趋势等其他因素,也有很强的周期性特征。

本次大赛基于爱奇艺APP脱敏和采样后的数据信息,预测用户的7日留存分。参赛队伍需要设计相应的算法进行数据分析和预测。

数据描述

本次比赛提供了丰富的数据集,包含视频数据、用户画像数据、用户启动日志、用户观影和互动行为日志等。针对测试集用户,需要预测每一位用户某一日的“7日留存分”。7日留存分取值范围从0到7,预测结果保留小数点后2位。

评价指标

本次比赛是一个数值预测类问题。评价函数使用: 100 − ( 1 − 1 n ∑ i = 1 n ∣ F i − A i 7 ∣ ) 100-(1-\frac{1}{n}\sum_{i=1}^{n}{|\frac{F_i-A_i}{7}|}) 100−(1−n1∑i=1n∣7Fi−Ai∣)。

n n n是测试集用户数量, F F F是参赛者对用户的7日留存分预测值, A A A是真实的7日留存分真实值。

评审说明

选手的提交应为UTF-8编码的csv文件。文件的格式和顺序需要和测试集保持一致。参见竞赛数据集下载部分“sample-a”。所有预测数据保留小数点后2位有效数字。不符合提交格式的文件被视为无效,并浪费一次提交机会。

本次比赛分为A、B 2个阶段。2个阶段的训练集是一样的,但需要选手预测的测试集不同。

- A阶段截止2022.01.17。A阶段测试集包含15001个需要预测的用户,用于A阶段比赛和排行榜。每个用户提供用户id和end_date日期。选手需要预测这个用户,对应[end_date+1 ~ end_date+7],这未来7天里的7日留存分。

- B阶段从2022.01.17开始,截止2022.01.20。届时系统会重新提供B阶段测试集。B阶段测试集更大,包含35000个需要预测的用户。B阶段使用单独的排行榜,其余细节和A阶段一致。

最后比赛结果以B阶段成绩为准,同时选手需要提交辅助性材料,证明其成绩合法有效。

特别说明

- 爱奇艺AI竞赛平台作为大赛官网,是挑战赛主战场。若参与主赛场比赛,选手需登录大赛官网完成注册报名,并务必在大赛官网主赛场提交预测结果。

- 每支参赛队伍的队伍人数最多5人。

- DataFountain竞赛平台作为2022WSDM用户留存预测挑战赛的练习场,在A榜阶段为参赛选手提供每天额外2次的成绩测试提交机会,助力大家在大赛官网主赛场中取得优异成绩。

- A榜阶段,DataFountain竞赛平台和大赛官网主赛场均可提交预测结果;B榜阶段,请参赛选手前往大赛官网主赛场提交预测结果。该赛题最终排名榜单以大赛官网主赛场发布的结果为准。

数据集解释

1. User portrait data

| Field name | Description |

|---|---|

| user_id | |

| device_type | iOS, Android |

| device_rom | rom of the device |

| device_ram | ram of the device |

| sex | |

| age | |

| education | |

| occupation_status | |

| territory_code |

2. App launch logs

| Field name | Description |

|---|---|

| user_id | |

| date | Desensitization, started from 0 |

| launch_type | spontaneous or launched by other apps & deep-links |

3. Video related data

| Field name | Description |

|---|---|

| item_id | id of the video |

| father_id | album id, if the video is an episode of an album collection |

| cast | a list of actors/actresses |

| duration | video length |

| tag_list | a list of tags |

4. User playback data

| Field name | Description |

|---|---|

| user_id | |

| item_id | |

| playtime | video playback time |

| date | timestamp of the behavior |

5. User interaction data

| Field name | Description |

|---|---|

| user_id | |

| item_id | |

| interact_type | interaction types such as posting comments, etc. |

| date | timestamp of the behavior |

时间线

- 2021.10.15:赛事启动,赛题正式发布,开放赛题数据集,开放组队报名。

- 2021.11.15:开放公开排名榜,参赛者可以提交预测结果。2021.12.20: 报名截止

- 2022.01.17: A阶段停止提交结果,B阶段测试集、排行榜开放。

- 2022.01.20: B阶段停止提交结果

- 2022.01.21: B阶段TOP5团队解释文档停止提交(提交方式稍后公布)

- 2022.01.25: 公布最终成绩

- 2022.02.17: Top 3队伍报告会及奖项颁发

奖项设置

- 冠军队伍: 一支 ($2000)

- 亚军队伍: 一支 ($800)

- 季军队伍: 一支 ($500)

!unzip data.zip

Archive: data.zip

inflating: app_launch_logs.csv

inflating: sample-a.csv

inflating: test-a.csv

inflating: user_interaction_data.csv

inflating: user_playback_data.csv

inflating: user_portrait_data.csv

inflating: video_related_data.csv

import pandas as pd

import numpy as np

from itertools import groupby

%pylab inline

import seaborn as sns

PATH = './'

Populating the interactive namespace from numpy and matplotlib

# 读取数据集

user_interaction = pd.read_csv(PATH + 'user_interaction_data.csv')

user_portrait = pd.read_csv(PATH + 'user_portrait_data.csv')

user_playback = pd.read_csv(PATH + 'user_playback_data.csv')

app_launch = pd.read_csv(PATH + 'app_launch_logs.csv')

video_related = pd.read_csv(PATH + 'video_related_data.csv')

基础字段分析

user_portrait

| Field name | Description |

|---|---|

| user_id | |

| device_type | iOS, Android |

| device_rom | rom of the device |

| device_ram | ram of the device |

| sex | |

| age | |

| education | |

| occupation_status | |

| territory_code |

user_portrait.head(2)

| user_id | device_type | device_ram | device_rom | sex | age | education | occupation_status | territory_code | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 10209854 | 2.0 | 5731 | 109581 | 1.0 | 2.0 | 0.0 | 1.0 | 865101.0 |

| 1 | 10230057 | 2.0 | 1877 | 20888 | 1.0 | 4.0 | 0.0 | 1.0 | 864102.0 |

print(user_portrait.shape)

for col in user_portrait.columns:

print(f'{col} \t {user_portrait.dtypes[col]} {user_portrait[col].nunique()}')

(596906, 9)

user_id int64 596905

device_type float64 4

device_ram object 2049

device_rom object 6217

sex float64 2

age float64 5

education float64 3

occupation_status float64 2

territory_code float64 373

user_portrait['user_id'].value_counts()

10268855 2

10280241 1

10444097 1

10442048 1

10267967 1

..

10037872 1

10080879 1

10082926 1

10076781 1

10485760 1

Name: user_id, Length: 596905, dtype: int64

user_portrait[user_portrait['user_id'] == 10268855]

| user_id | device_type | device_ram | device_rom | sex | age | education | occupation_status | territory_code | |

|---|---|---|---|---|---|---|---|---|---|

| 596800 | 10268855 | 2.0 | NaN | NaN | 1.0 | 3.0 | NaN | NaN | NaN |

| 596801 | 10268855 | 2.0 | NaN | NaN | 1.0 | 3.0 | NaN | NaN | NaN |

有一个用户记录存在重复,考虑剔除。

user_portrait = user_portrait.drop_duplicates()

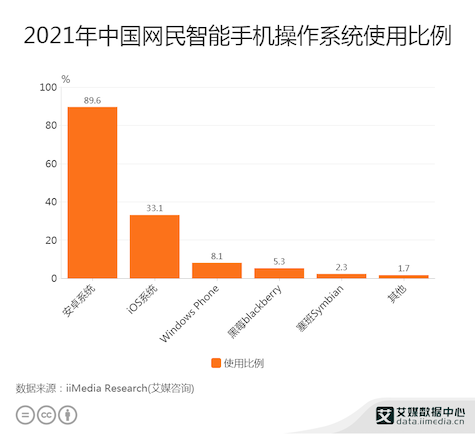

device_type

device_type 为类别类型,根据手机系统占比,猜测2为安卓,1为ios,3为wp,4为未知或其他

user_portrait['device_type'].value_counts()

2.0 480055

1.0 85322

3.0 28909

4.0 2280

Name: device_type, dtype: int64

ram 和 rom

在手机上,ROM用来存放数据,如系统程序,应用程序,音频,视频和文档的,由于视频等存储空间大,所以ROM比RAM大很多,现在主流手机都是8G的空间

RAM又叫运行内存,存放临时程序的,速度要远大于ROM,现在主流手机都是1G的RAM,RAM越大,手机运行越快,玩大型游戏,也就越流畅

# 提取手机信息

user_portrait['device_ram'] = user_portrait['device_ram'].apply(lambda x: str(x).split(';')[0])

user_portrait['device_rom'] = user_portrait['device_rom'].apply(lambda x: str(x).split(';')[0])

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/ipykernel_launcher.py:1: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy

"""Entry point for launching an IPython kernel.

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/ipykernel_launcher.py:2: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy

sns.distplot(user_portrait['device_ram'])

<matplotlib.axes._subplots.AxesSubplot at 0x7f97602fc650>

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-wzt0EGWL-1646533563012)(output_16_1.png)]

sns.distplot(user_portrait['device_rom'])

<matplotlib.axes._subplots.AxesSubplot at 0x7f97602597d0>

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-ImigXbza-1646533563012)(output_17_1.png)]](https://file.cfanz.cn/uploads/png/2022/03/11/12/C8Z0105882.png)

sex

user_portrait['sex'].value_counts()

1.0 308846

2.0 281612

Name: sex, dtype: int64

age

sns.distplot(user_portrait['age'])

<matplotlib.axes._subplots.AxesSubplot at 0x7f9760069950>

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-vMViCO5F-1646533563012)(output_22_1.png)]](https://file.cfanz.cn/uploads/png/2022/03/11/12/58Wa6578dP.png)

education

sns.distplot(user_portrait['education'])

<matplotlib.axes._subplots.AxesSubplot at 0x7f0cc1cd5610>

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-PPH6tcRx-1646533563013)(output_24_1.png)]](https://file.cfanz.cn/uploads/png/2022/03/11/12/405eID9P90.png)

occupation_status

sns.distplot(user_portrait['occupation_status'])

<matplotlib.axes._subplots.AxesSubplot at 0x7f0c793e0d90>

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-9q9Ix85I-1646533563013)(output_26_1.png)]](https://file.cfanz.cn/uploads/png/2022/03/11/12/0f03V099SL.png)

territory_code

用户常驻地域编号

sns.distplot(user_portrait['territory_code'])

<matplotlib.axes._subplots.AxesSubplot at 0x7f0c791543d0>

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-uOlfRaHJ-1646533563013)(output_28_1.png)]](https://file.cfanz.cn/uploads/png/2022/03/11/12/0Ob0671cce.png)

app_launch

| Field name | Description |

|---|---|

| user_id | |

| date | Desensitization, started from 0 |

| launch_type | spontaneous or launched by other apps & deep-links |

app_launch.head(2)

| user_id | launch_type | date | |

|---|---|---|---|

| 0 | 10157996 | 0 | 129 |

| 1 | 10139583 | 0 | 129 |

print(app_launch.shape)

for col in app_launch.columns:

print(f'{col} \t {app_launch.dtypes[col]} {app_launch[col].nunique()}')

(8493878, 3)

user_id int64 600000

launch_type int64 2

date int64 123

app_launch['launch_type'].value_counts()

0 8110781

1 383097

Name: launch_type, dtype: int64

app_launch.groupby('user_id')['launch_type'].mean()

user_id

10000000 0.000000

10000001 0.000000

10000002 0.000000

10000003 0.500000

10000004 0.000000

...

10599995 0.166667

10599996 0.000000

10599997 0.000000

10599998 0.000000

10599999 0.000000

Name: launch_type, Length: 600000, dtype: float64

app_launch = app_launch.sort_values(by=['user_id', 'date'])

app_launch.head()

| user_id | launch_type | date | |

|---|---|---|---|

| 4722964 | 10000000 | 0 | 131 |

| 4675122 | 10000000 | 0 | 132 |

| 1150520 | 10000000 | 0 | 141 |

| 4796452 | 10000000 | 0 | 164 |

| 6023992 | 10000000 | 0 | 179 |

video_related

| Field name | Description |

|---|---|

| item_id | id of the video |

| father_id | album id, if the video is an episode of an album collection |

| cast | a list of actors/actresses |

| duration | video length |

| tag_list | a list of tags |

video_related.head(2)

| item_id | duration | father_id | tag_list | cast | |

|---|---|---|---|---|---|

| 0 | 24403453.0 | 6.0 | NaN | 50365080;50338575;50313222;50165986 | NaN |

| 1 | 22838795.0 | 7.0 | NaN | 50001708;50323515;50125414 | NaN |

sns.distplot(video_related['duration'])

<matplotlib.axes._subplots.AxesSubplot at 0x7f0c4f09ab50>

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-SYuibkoc-1646533563014)(output_38_1.png)]](https://file.cfanz.cn/uploads/png/2022/03/11/12/Kda4Z70MY7.png)

user_playback

user_playback.head()

| user_id | item_id | playtime | date | |

|---|---|---|---|---|

| 0 | 10057286 | 20628283.0 | 2208.612 | 145 |

| 1 | 10522615 | 23930557.0 | 31.054 | 145 |

| 2 | 10494028 | 20173699.0 | 115.952 | 145 |

| 3 | 10181987 | 21350426.0 | 1.585 | 145 |

| 4 | 10439175 | 22946929.0 | 51.726 | 145 |

user_interaction

| Field name | Description |

|---|---|

| user_id | |

| item_id | |

| interact_type | interaction types such as posting comments, etc. |

| date | timestamp of the behavior |

user_interaction.head(2)

| user_id | item_id | interact_type | date | |

|---|---|---|---|---|

| 0 | 10243056 | 22635954 | 1 | 213 |

| 1 | 10203565 | 24723827 | 3 | 213 |

探索性数据分析

- app_launch

- 历史一天、三天、一周、一个月、三个月的行为

def count_launch_by_day(day1, day2):

u1 = set(app_launch[app_launch['date'].isin(day1)]['user_id'].unique())

u2 = set(app_launch[app_launch['date'].isin(day2)]['user_id'].unique())

print(len(u1&u2)/len(u1))

count_launch_by_day([131], [132])

0.49971594390170543

app_launch['date'].min(), app_launch['date'].max()

(100, 222)

app_launch[app_launch['user_id'] == 10052988]

| user_id | launch_type | date | |

|---|---|---|---|

| 6547213 | 10052988 | 0 | 147 |

| 2524483 | 10052988 | 0 | 149 |

test_a = pd.read_csv('test-a.csv')

test_a

| user_id | end_date | |

|---|---|---|

| 0 | 10007813 | 205 |

| 1 | 10052988 | 210 |

| 2 | 10279068 | 200 |

| 3 | 10546696 | 216 |

| 4 | 10406659 | 183 |

| ... | ... | ... |

| 14996 | 10355586 | 205 |

| 14997 | 10589773 | 210 |

| 14998 | 10181954 | 218 |

| 14999 | 10544736 | 164 |

| 15000 | 10354569 | 187 |

15001 rows × 2 columns

特征工程

# del user_interaction, user_portrait, user_playback, app_launch, video_related

!mkdir wsdm_model_data

!python3 baseline_feature_engineering.py

mkdir: cannot create directory ‘wsdm_model_data’: File exists

构建模型 + 训练

!unzip data.zip

Archive: data.zip

replace app_launch_logs.csv? [y]es, [n]o, [A]ll, [N]one, [r]ename: ^C

import pandas as pd

import numpy as np

import json

import math

data_dir = "./wsdm_model_data/"

# 处理训练集数据

data = pd.read_csv(data_dir + "train_data.txt", sep="\t")

data["launch_seq"] = data.launch_seq.apply(lambda x: json.loads(x))

data["playtime_seq"] = data.playtime_seq.apply(lambda x: json.loads(x))

data["duration_prefer"] = data.duration_prefer.apply(lambda x: json.loads(x))

data["interact_prefer"] = data.interact_prefer.apply(lambda x: json.loads(x))

# shuffle data

data = data.sample(frac=1).reset_index(drop=True)

data.columns

Index(['user_id', 'end_date', 'label', 'launch_seq', 'playtime_seq',

'duration_prefer', 'father_id_score', 'cast_id_score', 'tag_score',

'device_type', 'device_ram', 'device_rom', 'sex', 'age', 'education',

'occupation_status', 'territory_score', 'interact_prefer'],

dtype='object')

data

| user_id | end_date | label | launch_seq | playtime_seq | duration_prefer | father_id_score | cast_id_score | tag_score | device_type | device_ram | device_rom | sex | age | education | occupation_status | territory_score | interact_prefer | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 10309777 | 165 | 6 | [0, 0, 0, 0, 1, 0, 1, 0, 0, 1, 1, 1, 1, 1, 0, ... | [0, 0, 0, 0, 0, 0, 0.9414, 0, 0, 0.9998, 0.943... | [0.0, 0.0, 0.0, 0.0, 0.08, 0.0, 0.04, 0.0, 0.0... | 1.209317 | 1.353447 | 0.178947 | 0.194954 | -0.740852 | 1.043355 | -0.955892 | -0.319111 | -0.544818 | 0.746096 | 0.167180 | [0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 1.0, 0.0, 0.0, ... |

| 1 | 10117035 | 123 | 0 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, ... | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, ... | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0] | 0.000000 | 0.000000 | 0.000000 | 0.194954 | -1.195884 | -1.173106 | -0.955892 | -0.319111 | -0.544818 | -1.340308 | 0.000000 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0] |

| 2 | 10413843 | 149 | 0 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, ... | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, ... | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0] | 0.000000 | 0.000000 | 0.000000 | -2.041925 | -0.637283 | -0.701308 | -0.955892 | -0.319111 | 0.755516 | 0.746096 | -1.106625 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0] |

| 3 | 10209341 | 165 | 0 | [0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, ... | [0, 0, 0, 0, 0, 0, 0, 0.0475, 0, 0, 0, 0, 0, 0... | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0] | 0.000000 | 0.000000 | 0.000000 | 0.194954 | 0.150032 | -0.117076 | -0.955892 | -0.319111 | -0.544818 | 0.746096 | 0.940850 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0] |

| 4 | 10430657 | 162 | 0 | [1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, ... | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.0492... | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0] | 0.000000 | 0.000000 | 0.000000 | 0.194954 | 1.012626 | -0.145958 | 1.046141 | 0.000000 | -0.544818 | 0.000000 | -0.743187 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0] |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 599996 | 10070331 | 122 | 1 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, ... | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, ... | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0] | 0.000000 | 0.000000 | 0.000000 | 0.194954 | 0.191747 | 1.228884 | -0.955892 | -0.319111 | -0.544818 | 0.746096 | -0.480041 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0] |

| 599997 | 10056030 | 115 | 2 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, ... | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, ... | [0.0, 0.0, 0.0, 1.0, 0.0, 1.0, 0.0, 0.0, 1.0, ... | -0.299726 | 0.000000 | 0.388082 | 0.194954 | -1.195884 | -0.834187 | 1.046141 | 0.828011 | -0.544818 | -1.340308 | -1.524485 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0] |

| 599998 | 10235314 | 137 | 0 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, ... | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, ... | [0.0, 0.0, 0.0, 0.0, 0.0, 0.5, 1.0, 0.5, 0.0, ... | -0.866054 | 0.000000 | -0.084836 | 0.194954 | 1.020778 | 1.262729 | -0.955892 | -0.319111 | -0.544818 | 0.746096 | 0.838748 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0] |

| 599999 | 10014483 | 195 | 1 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, ... | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, ... | [0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, ... | 0.288450 | 0.760564 | 0.511767 | 0.194954 | -0.796952 | -0.111235 | -0.955892 | 1.975134 | -0.544818 | 0.746096 | -1.638692 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0] |

| 600000 | 10446094 | 157 | 0 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, ... | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, ... | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0] | 0.000000 | 0.000000 | 0.000000 | 0.194954 | 0.000000 | -0.857147 | -0.955892 | -0.319111 | -0.544818 | -1.340308 | -0.891480 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0] |

600001 rows × 18 columns

import paddle

from paddle.io import DataLoader, Dataset

# 定义模型数据集

class CoggleDataset(Dataset):

def __init__(self, df):

super(CoggleDataset, self).__init__()

self.df = df

self.feat_col = list(set(self.df.columns) - set(['user_id', 'end_date', 'label', 'launch_seq', 'playtime_seq',

'duration_prefer', 'interact_prefer']))

self.df_feat = self.df[self.feat_col]

# 定义需要参与训练的字段

def __getitem__(self, index):

launch_seq = self.df['launch_seq'].iloc[index]

playtime_seq = self.df['playtime_seq'].iloc[index]

duration_prefer = self.df['duration_prefer'].iloc[index]

interact_prefer = self.df['interact_prefer'].iloc[index]

feat = self.df_feat.iloc[index].values.astype(np.float32)

launch_seq = paddle.to_tensor(launch_seq).astype(paddle.float32)

playtime_seq = paddle.to_tensor(playtime_seq).astype(paddle.float32)

duration_prefer = paddle.to_tensor(duration_prefer).astype(paddle.float32)

interact_prefer = paddle.to_tensor(interact_prefer).astype(paddle.float32)

feat = paddle.to_tensor(feat).astype(paddle.float32)

label = paddle.to_tensor(self.df['label'].iloc[index]).astype(paddle.float32)

return launch_seq, playtime_seq, duration_prefer, interact_prefer, feat, label

def __len__(self):

return len(self.df)

import paddle

# 定义模型,这里是LSTM + FC

class CoggleModel(paddle.nn.Layer):

def __init__(self):

super(CoggleModel, self).__init__()

# 序列建模

self.launch_seq_gru = paddle.nn.GRU(1, 32)

self.playtime_seq_gru = paddle.nn.GRU(1, 32)

# 全连接层

self.fc1 = paddle.nn.Linear(102, 64)

self.fc2 = paddle.nn.Linear(64, 1)

def forward(self, launch_seq, playtime_seq, duration_prefer, interact_prefer, feat):

launch_seq = launch_seq.reshape((-1, 32, 1))

playtime_seq = playtime_seq.reshape((-1, 32, 1))

launch_seq_feat = self.launch_seq_gru(launch_seq)[0][:, :, 0]

playtime_seq_feat = self.playtime_seq_gru(playtime_seq)[0][:, :, 0]

all_feat = paddle.concat([launch_seq_feat, playtime_seq_feat, duration_prefer, interact_prefer, feat], 1)

all_feat_fc1 = self.fc1(all_feat)

all_feat_fc2 = self.fc2(all_feat_fc1)

return all_feat_fc2

模型训练

from tqdm import tqdm

import warnings

warnings.filterwarnings("ignore")

# 模型训练函数

def train(model, train_loader, optimizer, criterion):

model.train()

train_loss = []

for launch_seq, playtime_seq, duration_prefer, interact_prefer, feat, label in tqdm(train_loader):

pred = model(launch_seq, playtime_seq, duration_prefer, interact_prefer, feat)

loss = criterion(pred, label)

loss.backward()

optimizer.step()

optimizer.clear_grad()

train_loss.append(loss.item())

return np.mean(train_loss)

# 模型验证函数

def validate(model, val_loader, optimizer, criterion):

model.eval()

val_loss = []

for launch_seq, playtime_seq, duration_prefer, interact_prefer, feat, label in tqdm(val_loader):

pred = model(launch_seq, playtime_seq, duration_prefer, interact_prefer, feat)

loss = criterion(pred, label)

loss.backward()

optimizer.step()

optimizer.clear_grad()

val_loss.append(loss.item())

return np.mean(val_loss)

# 模型预测函数

def predict(model, test_loader):

model.eval()

test_pred = []

for launch_seq, playtime_seq, duration_prefer, interact_prefer, feat, label in tqdm(test_loader):

pred = model(launch_seq, playtime_seq, duration_prefer, interact_prefer, feat)

test_pred.append(pred.numpy())

return test_pred

from sklearn.model_selection import StratifiedKFold

# 模型多折训练

skf = StratifiedKFold(n_splits=7)

fold = 0

for tr_idx, val_idx in skf.split(data, data['label']):

train_dataset = CoggleDataset(data.iloc[tr_idx])

val_dataset = CoggleDataset(data.iloc[val_idx])

# 定义模型、损失函数和优化器

model = CoggleModel()

optimizer = paddle.optimizer.Adam(parameters=model.parameters(), learning_rate=0.001)

criterion = paddle.nn.MSELoss()

train_loader = DataLoader(train_dataset, batch_size=32, shuffle=True, num_workers=4)

val_loader = DataLoader(val_dataset, batch_size=32, shuffle=False, num_workers=4)

# 每个epoch训练

for epoch in range(3):

train_loss = train(model, train_loader, optimizer, criterion)

val_loss = validate(model, val_loader, optimizer, criterion)

print(fold, epoch, train_loss, val_loss)

paddle.save(model.state_dict(), f"model_{fold}.pdparams")

fold += 1

W1128 20:18:14.128268 128 device_context.cc:447] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1

W1128 20:18:14.132313 128 device_context.cc:465] device: 0, cuDNN Version: 7.6.

1%| | 131/16072 [00:05<09:05, 29.24it/s]

模型预测

test_data = pd.read_csv(data_dir + "test_data.txt", sep="\t")

test_data["launch_seq"] = test_data.launch_seq.apply(lambda x: json.loads(x))

test_data["playtime_seq"] = test_data.playtime_seq.apply(lambda x: json.loads(x))

test_data["duration_prefer"] = test_data.duration_prefer.apply(lambda x: json.loads(x))

test_data["interact_prefer"] = test_data.interact_prefer.apply(lambda x: json.loads(x))

test_data['label'] = 0

test_dataset = CoggleDataset(test_data)

test_loader = DataLoader(test_dataset, batch_size=64, shuffle=False, num_workers=4)

test_pred_fold = np.zeros(test_data.shape[0])

# 模型多折预测

for idx in range(7):

model = CoggleModel()

layer_state_dict = paddle.load(f"model_{idx}.pdparams")

model.set_state_dict(layer_state_dict)

model.eval()

test_pred = predict(model, test_loader)

test_pred = np.vstack(test_pred)

test_pred_fold += test_pred[:, 0]

test_pred_fold /= 7

100%|██████████| 235/235 [00:02<00:00, 98.58it/s]

100%|██████████| 235/235 [00:02<00:00, 79.41it/s]

100%|██████████| 235/235 [00:02<00:00, 78.44it/s]

100%|██████████| 235/235 [00:02<00:00, 78.63it/s]

100%|██████████| 235/235 [00:03<00:00, 77.96it/s]

100%|██████████| 235/235 [00:02<00:00, 78.47it/s]

100%|██████████| 235/235 [00:03<00:00, 77.44it/s]

test_data["prediction"] = test_pred[:, 0]

test_data = test_data[["user_id", "prediction"]]

# can clip outputs to [0, 7] or use other tricks

test_data.to_csv("./baseline_submission.csv", index=False, header=False, float_format="%.2f")

总结

- 本项目基于已有的比赛的数据,构建时序模型,对用户的留存进行预测。

- 与原有的keras代码相比,本项目将特征工程与构建模型进行拆分,更加适合迭代。

- 本项目在模型加入了序列建模,可以使用LSTM或GRU等。

改进思路

- 用户行为特征可以加入注意力机制。

- 视频行为 与 用户行为可以进行交叉,参考DeepFM。