前言:我很认同英国科幻作家道格拉斯·亚当斯的科技三定律:

1)任何在我出生时已经有的科技都是稀松平常的世界本来秩序的一部分。

2)任何在我15-35岁之间诞生的科技都是将会改变世界的革命性产物。

3)任何在我35岁之后诞生的科技都是违反自然规律要遭天谴的。

这么多年,个人技术有限也因为生存需要,从熟悉企业信息化到新旧技术的浅尝辄止发现都和自己想做的事情相去甚远。去日本工作了5年,也看到一些实验室级别的虚拟现实项目,心里五味杂陈,那个圈子离我太遥远,但是心里还是很向往的,那我啃点边边角角的技术,也在提升自己各方面的能力。那这个边边角角的技术就是云原生了。“任何在我15-35岁之间诞生的科技都是将会改变世界的革命性产物”,我深以为然,未来并不遥远,相信接下来的数年新的技术会让生产力发生根本性变化,不过无论怎么变化,技术上可以大致归纳为两个层面,无穷的算力+革命性的算法,算力支撑算法,算法发现新的规则,新的规则影响是深远的...总之趁年轻再接触接触新的内容开开眼界,为了拥抱未来嘛。

Kubernetes介绍

使用golang编写的容器编排应用,是基于google内部数十年的容器编排经验的总结,开源,同时也是CNCF的第一个产品,顺带一提第二个被CNCF推出的产品是Prometheus云原生监控应用

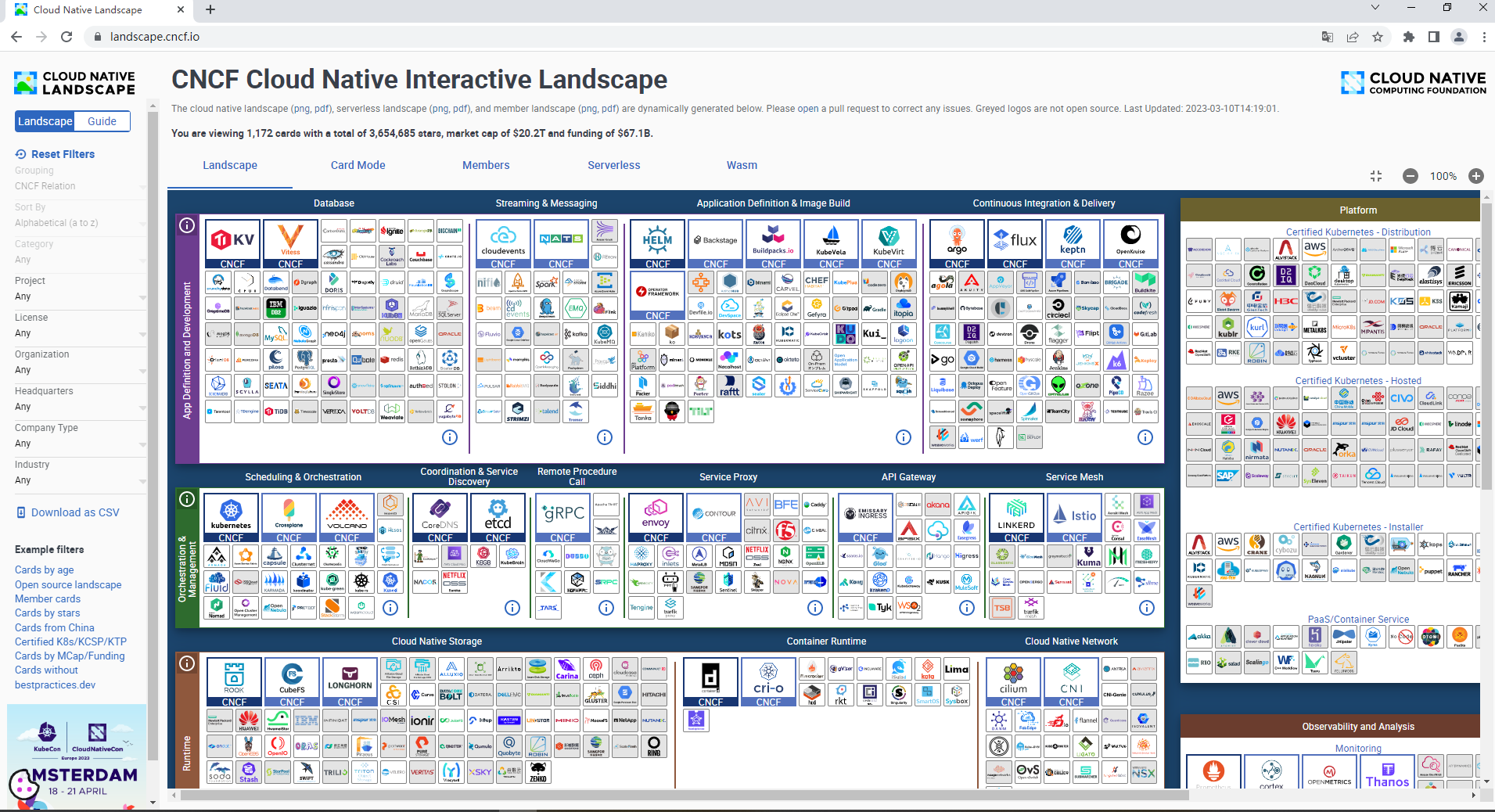

#CNCF官网

landscape.cncf.io

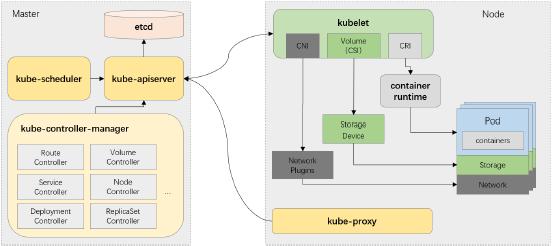

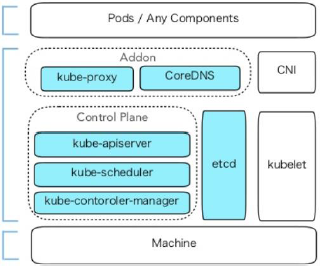

Kubernetes集群架构

Kubernetes属于典型的Server-Client形式的二层架构

- Master主要由API Server、Controller-Manager和Scheduler三个组件,以及一个用于集群状态存储的Etcd存储服务组成,它们构成整个集群的控制平面

- Node节点则主要包含Kubelet、Kube Proxy及容器运行时(docker是最为常用的实现)三个组件,它们承载运行各类应用容器

计算和存储接口通过Master之上的API Server暴露,客户端通过API提交应用程序的运行请求,而后由Master通过调度算法将其自动指派至某特定的工作节点以Pod对象的形式运行,Master会自动处理因工作节点的添加、故障或移除等变动对Pod的影响

Master:控制节点

- API Server

- 整个集群的API网关,相关应用程序为kube-apiserver

- 基于http/https协议以REST风格提供,几乎所有功能全部抽象为“资源”及相关的“对象”

- 声明式API,用于只需要声明对象的“终态”,具体的业务逻辑由各资源相关的Controller负责完成

- 无状态,数据存储于etcd中

- Cluster Store

- 集群状态数据存储系统,通常指的就是etcd

- 仅会同API Server交互

- Controller-Manager

- 负责实现客户端通过API提交的终态声明,相应应用程序为kube-controller-manager

- 由相关代码通过一系列步骤驱动API对象的“实际状态”接近或等同“期望状态

- 工作于loop模式

- Scheduler

- 调度器,负责为Pod挑选出(评估这一刻)最合适的运行节点

- 相关程序为kube-scheduler

WorkerNode:工作节点

- Kubelet

- Kubernetes集群于每个Worker节点上的代理,相应程序为kubelet

- 接收并执行Master发来的指令,管理由Scheduler绑定至当前节点上的Pod对象的容器

- 通过API Server接收Pod资源定义,或从节点本地目录中加载静态Pod配置

- 借助于兼容CRI的容器运行时管理和监控Pod相关的容器

- Kube Proxy

- 运行于每个Worker节点上,专用于负责将Service资源的定义转为节点本地的实现

- iptables模式:将Service资源的定义转为适配当前节点视角的iptables规则

- ipvs模式:将Service资源的定义转为适配当前节点视角的ipvs和少量iptables规则

当集群中service数量庞大时,iptables配置上相对于ipvs更简单,但生成的service规则冗余,当集群服务量大的时候iptables的规则数量庞大,对性能影响不可忽视,故而使用ipvs能极大的减少iptables类似的规则,性能上要更好

- 是打通Pod网络在Service网络的关键所在

Kubernetes Add-ons:Kubernetes 附件(区别插件,附件是可以单独运行不依赖于应用)

- 负责扩展Kubernetes集群的功能的应用程序,通常以Pod形式托管运行于Kubernetes集群之上

- 必选插件

- Network Plugin:网络插件,经由CNI接口,负责为Pod提供专用的通信网络,有多种实现

网络插件比较多,插件选型是云原生工程师的重要职责之一

Flannel,ProjectCalico,Cilium,WeaveNet,...

- Cluster DNS:集群DNS服务器,负责服务注册、发现和名称解析,当下的实现是CoreDNS

SkyDNS,KubeDNS,CoreDNS,...

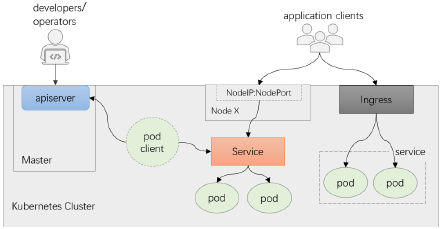

- 重要插件

- Ingress Controller:Ingress控制器,负责为Ingress资源提供具体的实现,实现http/https协议的七层路由和流量调度,有多种实现

Ingress Nginx,Traefik,Contour,Kone,Gloo,APISIX Ingress,...

- Dashboard:基于Web的UI

Kubernetes Dashboard,Kuboard,...

- 指标监控系统:Prometheus

- 日志系统:ELK、PLG等

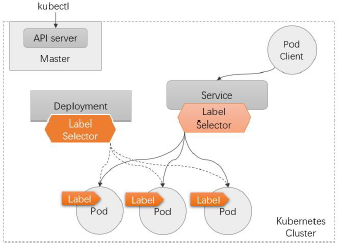

Kubernetes应用编排的基本工作逻辑

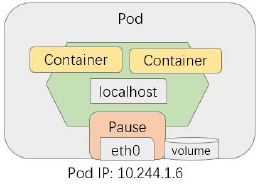

Kubernetes本质上是“以应用为中心”的现代应用基础设施,Pod是其运行应用及应用调度的最小逻辑单元

- 本质上是共享Network、IPC和UTS名称空间以及存储资源的容器集

- 可将其想象成一台物理机或虚拟机,各容器就是该主机上的进程

- 各容器共享网络协议栈、网络设备、路由、IP地址和端口等,但Mount、PID和USER仍隔离

文件,进程,用户名称空间是默认隔离的,PID的共享是需要明确声明的

- 每个Pod上还可附加一个“存储卷(Volume)”作为该“主机”的外部存储,独立于Pod的生命周期,可由Pod内的各容器共享

- 模拟“不可变基础设施”,删除后可通过资源清单重建

- 生产更新pod时采用删除重建的方式,可以有效建立资产历史记录,便于状态回溯

- 具有动态性,可容忍误删除或主机故障等异常

- 存储卷可以确保数据能超越Pod的生命周期

- 在设计上,仅应该将具有“超亲密”关系的应用分别以不同容器的形式运行于同一Pod内部

- 每个Pod中的容器都共享Pause名称空间,中文译为暂停,是一个属于暂停状态且不占用任何资源的容器,核心目的就是为该Pod提供能够被加入该Pod容器正常使用所需的名称空间

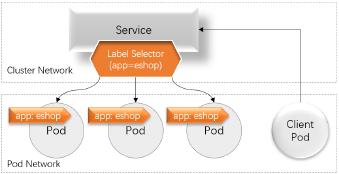

设计Service资源的逻辑

- Pod具有动态性,其IP地址也会在基于配置清单重构后重新进行分配,因而需要服务发现机制的支撑

- Kubernetes使用Service资源和DNS服务(CoreDNS)进行服务发现

- Service能够为一组提供了相同服务的Pod提供负载均衡机制,其IP地址(Service IP,也称为Cluster IP)即为客户端流量入口

- 一个Service对象存在于集群中的各节点之上,不会因个别节点故障而丢失,可为Pod提供固定的前端入口

- Service使用标签选择器(Label Selector)筛选并匹配Pod对象上的标签(Label),从而发现Pod

- 仅具有符合其标签选择器筛选条件的标签的Pod才可

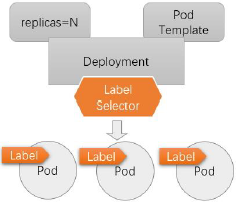

Pod 和工作负载型控制器

- Pod是运行应用的原子单元,其生命周期管理和健康状态监测由kubelet负责完成,而诸如更新、扩缩容和重建等应用编排功能需要由专用的控制器实现,这类控制器即工作负载型控制器

#基于某个工作负载型控制器,创建一个同名的资源

ReplicaSet和Deployment - 编排无状态应用

DaemonSet - 编排系统级应用

StatefulSet - 编排有状态应用

Job和CronJob - 编排周期性任务应用

- 作负载型控制器也通过标签选择器筛选Pod标签从而完成关联、

- 作负载型控制器的工作重心

- 确保选定的Pod精确符合期望的数量

- 数量不足时依据Pod模板创建,超出时销毁多余的对象

- 按配置定义进行扩容和缩容

- 依照策略和配置进行应用更新

部署应用

- 依照编排需求,选定合适类型的工作负载型控制器

- 创建工作负载型控制器对象,由其确保运行合适数量的Pod对象

- 创建Service对象,为该组Pod对象提供固定的访问入口

请求访问Service对象上的服务

- 集群内部的通信流量也称为东西向流量,客户端也是集群上的Pod对象

- Service同集群外部的客户端之间的通信流量称为南北向流量,客户端是集群外部的进程

- 集群上的Pod也可能会与集群外部的服务进程通信

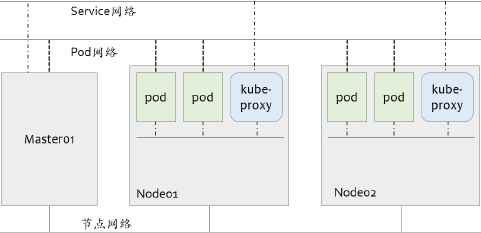

Kubernetes网络基础

Kubernetes网络模型

- Kubernetes集群上会存在三个分别用于节点、Pod和Service的网络

- 于worker上完成交汇

三个网络可以通过内核完成三个网络的路由暴露,进而三网通信

节点网络需要我们自定义,Pod网络借用CNI工具生成,只需要用户指定网段,Service有Kubernetes自己管理,也只需要用户指定网段

- 由节点内核中的路由模块,以及iptables/netfilter和ipvs等完成网络间的流量转发

#集群内部访问内部的方式

集群中节点网络中的某一主机 -> 主机转发至集群内Service网络 -> 由Service网络做分发处理 -> 集群内Pod网络

#集群外部访问内部的方式

集群外部访问内部的方式是先访问节点网络中的某一主机,再通过该主机转发才能到Service网络,最后由Service网络做分发处理最终到达Pod网络:

集群外部 -> 集群中节点网络中的某一主机 -> 主机转发至集群内Service网络 -> 由Service网络做分发处理 -> 集群内Pod网络

- 节点网络

- 集群节点间的通信网络,并负责打通与集群外部端点间的通信

各个Master和Node节点之间有网卡连接,如果没有跨路由,默认处于同一个二层网络中,如果跨路由,默认节点之间处于三层网络或者跨二层网络。这些网络有多少节点有多少接口都需要我们预先规划,而后就可以部署Kubernetes集群

- 网络及各节点地址需要于Kubernetes部署前完成配置,非由Kubernetes管理,因而,需要由管理员手动进行,或借助于主机虚拟化管理程序进行

- Pod网络

- 为集群上的Pod对象提供的网络

存在于Pod之间,每个Pod的虚拟网卡接口上都有一个特定的地址。根据使用网络插件的不同,默认地址也会有差异

例如:

使用Flannel时,Pod默认IP是10.244.0.0/12

使用ProjectCalico,Pod默认IP是192.168.0.0/12

- 虚拟网络,需要经由CNI网络插件实现,例如Flannel、Calico、Cilium等

- Service网络

- 在部署Kubernetes集群时指定,各Service对象使用的地址将从该网络中分配

Service网络的网段与节点网段分属于不同网络。

Service网络不存在于任何节点,当使用特定工具进行集群部署时会有一个默认网段10.96.0.0/12

- Service对象的IP地址存在于其相关的iptables或ipvs规则中

- 由Kubernetes集群自行管理

Kubernetes集群中的通信流量

- Kubernetes网络中主要存在4种类型的通信流量

- 同一Pod内的容器间通信 - LoopBack Interface

- Pod间的通信

- Pod与Service间的通信

- 集群外部流量与Service间的通信

#Pod间可以直接通信,为什么要靠Service来分发网络进行通信

Pod节点是可以随时删除重建的,网络是动态的。为了能发现Pod,必须有一个Pod服务注册中心,同时有一个服务发现总线,Service处理这些任务;如果服务有多个,Service还会自动做负载均衡

- Pod网络需要借助于第三方兼容CNI规范的网络插件完成,这些插件需要满足以下功能要求

- 所有Pod间均可不经NAT机制而直接通信

- 所有节点均可不经NAT机制直接与所有Pod通信

- 所有Pod对象都位于同一平面网络中

部署Kubernetes集群

Kubernetes集群组件运行模式

- 独立组件模式

- 除Add-ons以外,各关键组件以二进制方式部署于节点上,并运行于守护进程

- 各Add-ons以Pod形式运行

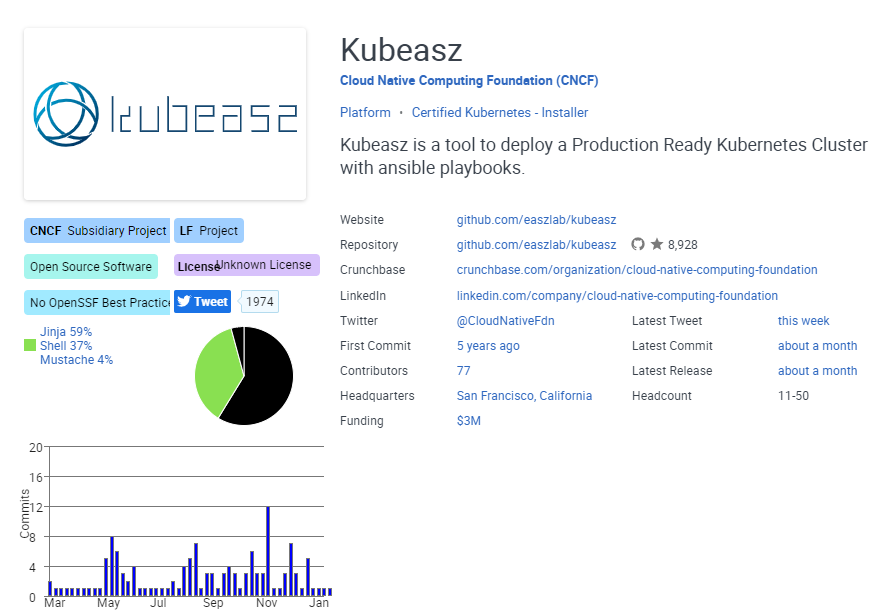

可以发现每一个组件都需要以Pod方式运行,每个节点都需要部署相应的插件,Pod之间通信需要非常多的证书,非常复杂

#有一个中国人开发的基于Ansible进行独立组件模式安装的应用叫做Kubeasz,被landscape官方认可,也被CNCF收录

https://github.com/easzlab/kubeasz

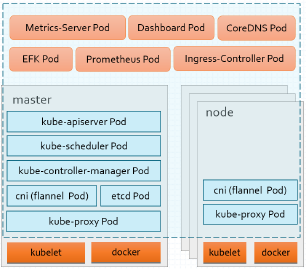

- 静态Pod模式

- 控制平面各组件以静态Pod对象运行于Master主机之上

- kubelet和docker以二进制部署,运行为守护进程

- kube-proxy等则以Pod形式运行

- Kubernetes组件的镜像仓库:registry.k8s.io

Kubernetes官方维护的Kubeadm帮助用户部署用的就是静态Pod模式

kubeadm

- Kubernetes社区提供的集群构建工具

- 负责执行构建一个最小化可用集群并将其启动等必要的基本步骤

- Kubernetes集群全生命周期管理工具,可用于实现集群的部署、升级/降级及卸载等

- kubeadm仅关心如何初始化并拉起一个集群,其职责仅限于下图中背景着色的部分

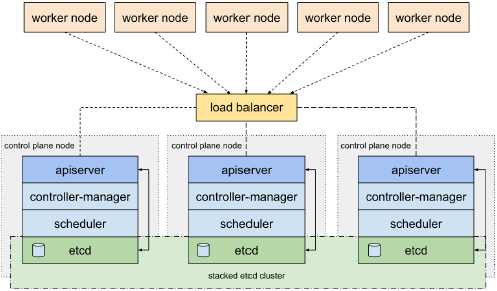

- kubeadm支持的控制平面高可用模型

- kubeadm HA topology - stacked etcd - 适用于中小规模集群

堆栈模式下的Pod节点中apiserver只访问节点中的etcd,etcd部署在所有的节点之上

所有的Pod之间controller-manager,scheduler做各自的集群,使用Active/Passive模式,执行任务的节点持有互斥锁

etcd集群采用raft协议,类似于zookeeper的zab协议,是Paxos(分布式协同)协议的简化版

ZAB(ZooKeeper Atomic Broadcast 原子广播) 协议是为分布式协调服务 ZooKeeper 专门设计的一

种支持崩溃恢复的原子广播协议。 在 ZooKeeper 中,主要依赖 ZAB 协议来实现分布式数据一致性,基于

该协议,ZooKeeper 实现了一种主备模式的系统架构来保持集群中各个副本之间的数据一致性。

当发生主节点宕机时会触发Leader election

注意:后续博客中的实验都是采用stacked etcd模型

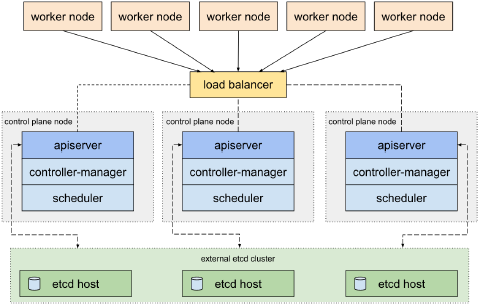

- kubeadm HA topology - external etcd - 使用于大型规模集群

独立etcd集群模式下etcd用主机单独存放

部署前提

- 使用kubeadm部署Kubernetes集群的前提条件

- 支持Kubernetes运行的Linux主机,例如Debian、RedHat及其变体等

- 每主机2GB以上的内存,以及2颗以上的CPU

- 各主机间能够通过网络无障碍通信

- 独占的hostname、MAC地址以及product_uuid,主机名能够正常解析

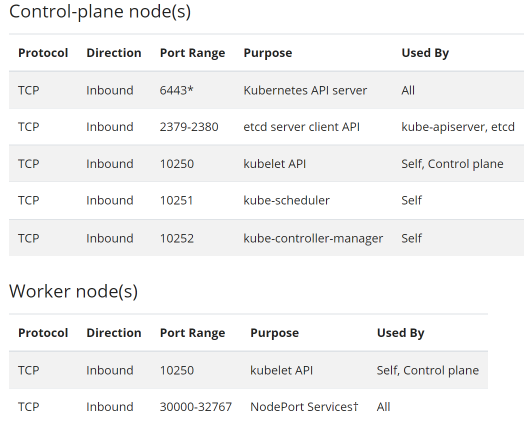

- 放行由Kubernetes使用到的各端口,或直接禁用iptables

- 禁用各主机的上的Swap设备

- 准备代理服务,以便接入registry.k8s.io,或根据部署过程提示的方法获取相应的Image

集群部署需要开放的端口

部署Kubernetes v1.26

- 主机环境预设

OS:Ubuntu 2204 LTS

Docker: 最新版即可

Kubernetes:v1.26 - 官方一年更新四个版本

网络环境:

节点网络:192.168.11.0/24

Pod网络:10.244.0.0/16

Service网络:10.96.0.0/12

-----------------------------------------------------------------------------------------

IP地址 主机名 角色

192.168.11.253 Kubeapi.mooreyxia.com

192.168.11.211 K8s-master01.mooreyxia.com,K8s-master01 Master

192.168.11.212 K8s-master02.mooreyxia.com,K8s-master02 Master

192.168.11.213 K8s-master03.mooreyxia.com,K8s-master03 Master

192.168.11.214 K8s-node01.mooreyxia.com,K8s-node01 Worker

192.168.11.215 K8s-node02.mooreyxia.com,K8s-node02 Worker

192.168.11.216 K8s-node03.mooreyxia.com,K8s-node03 Worker

- 集群部署步骤

- 验证各前提条件是否已然满足

- 在各节点上安装容器运行时

- 在各节点上安装kubeadm、kubelet和kubect

- 创建集群

- 在控制平面的第一个节点上,使用kubeadm init命令拉起控制平面

- 会生成token以认证后续加入的节点

- 将其它几个控制平面节点使用kubeadm join命令加入到控制平面集群中(实验环境可选)

- 提示:需要先从第一个控制平面节点上拿到CA及API Server等相应的证书

- 将各worker节点使用kubeadm join命令加入到集群中

- 确认各节点状态正常转为“Ready”

- 部署案例

- 制作k8s模板

[root@k8s-template ~]#apt update

#时间同步

[root@k8s-template ~]#apt install chrony -y

[root@k8s-template ~]#systemctl enable --now chrony.service

#禁用Swap设备

[root@k8s-template ~]#swapoff -a

[root@k8s-template ~]#vim /etc/fstab

[root@k8s-template ~]#cat /etc/fstab |grep swap

#/swap.img none swap sw 0 0

[root@k8s-template ~]#reboot

#检查是否存在使用swap的设备,如果有使用 “systemctl mask 设备” 命令加以禁用

[root@k8s-template ~]#systemctl --type swap

UNIT LOAD ACTIVE SUB DESCRIPTION

0 loaded units listed. Pass --all to see loaded but inactive units, too.

To show all installed unit files use 'systemctl list-unit-files'.

#禁用防火墙服务

省略

#安装并启动docker - 参考阿里云官方文档

#Ubuntu

# step 1: 安装必要的一些系统工具

[root@k8s-template ~]sudo apt-get update

[root@k8s-template ~]sudo apt-get -y install apt-transport-https ca-certificates curl software-properties-common

# step 2: 安装GPG证书

[root@k8s-template ~]curl -fsSL https://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -

# Step 3: 写入软件源信息

[root@k8s-template ~]sudo add-apt-repository "deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable"

# Step 4: 更新并安装Docker-CE

[root@k8s-template ~]sudo apt-get -y update

[root@k8s-template ~]sudo apt-get -y install docker-ce

[root@k8s-template ~]#systemctl enable --now docker

#做Docker镜像加速

[root@k8s-template ~]#echo '{"registry-mirrors": ["https://registry.docker-cn.com", "http://hub-mirror.c.163.com", "https://docker.mirrors.ustc.edu.cn"]}' > /etc/docker/daemon.json

[root@k8s-template ~]#systemctl restart docker

[root@k8s-template ~]#docker info

#为Docker设定使用的代理服务(可选)

编辑/lib/systemd/system/docker.service文件,在[Service]配置段中添加类似如下内容,其中的PROXY_SERVER_IP和PROXY_PORT要按照实际情况修改。

Envirnotallow="HTTP_PROXY=http://$PROXY_SERVER_IP:$PROXY_PORT"

Envirnotallow="HTTPS_PROXY=https://$PROXY_SERVER_IP:$PROXY_PORT"

Envirnotallow="NO_PROXY=127.0.0.0/8,172.17.0.0/16,172.29.0.0/16,10.244.0.0/16,192.168.0.0/16,mooreyxia.com,cluster.local"

配置完成后需要重载systemd,并重新启动docker服务:

#安装cri-dockerd

[root@k8s-template ~]#curl -LO https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.0/cri-dockerd_0.3.0.3-0.ubuntu-focal_amd64.deb

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

100 18.7M 100 18.7M 0 0 5296k 0 0:00:03 0:00:03 --:--:-- 7134k

[root@k8s-template ~]#ls

cri-dockerd_0.3.0.3-0.ubuntu-focal_amd64.deb

[root@k8s-template ~]#dpkg -i cri-dockerd_0.3.0.3-0.ubuntu-focal_amd64.deb

[root@k8s-template ~]#systemctl status cri-docker.service

● cri-docker.service - CRI Interface for Docker Application Container Engine

Loaded: loaded (/lib/systemd/system/cri-docker.service; enabled; vendor preset: enabled)

Active: active (running) since Sat 2023-03-11 15:41:12 UTC; 35s ago

...

#安装kubelet、kubeadm和kubectl

#首先,在各主机上生成kubelet和kubeadm等相关程序包的仓库

[root@k8s-template ~]# apt install -y apt-transport-https curl

[root@k8s-template ~]#curl -fsSL https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

[root@k8s-template ~]#cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

> deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

> EOF

[root@k8s-template ~]#apt update

#接着,在各主机安装kubelet、kubeadm和kubectl等程序包,并将其设置为随系统启动而自动引导

[root@k8s-template ~]#apt install -y kubelet kubeadm kubectl

[root@k8s-template ~]# systemctl enable --now kubelet

#安装完成后,要确保kubeadm等程序文件的版本,这将也是后面初始化Kubernetes集群时需要明确指定的版本号。

[root@k8s-template ~]#kubelet --version

[root@k8s-template ~]#kubeadm version

[root@k8s-template ~]#kubectl version

#整合kubelet和cri-dockerd

#仅支持CRI规范的kubelet需要经由遵循该规范的cri-dockerd完成与docker-ce的整合。

#配置cri-dockerd

[root@k8s-template ~]#vim /usr/lib/systemd/system/cri-docker.service

[root@k8s-template ~]#cat /usr/lib/systemd/system/cri-docker.service|grep ExecStart

#ExecStart=/usr/bin/cri-dockerd --container-runtime-endpoint fd://

ExecStart=/usr/bin/cri-dockerd --container-runtime-endpoint fd:// --network-plugin=cni --cni-bin-dir=/opt/cni/bin --cni-cache-dir=/var/lib/cni/cache --cni-conf-dir=/etc/cni/net.d

[root@k8s-template ~]#systemctl daemon-reload && systemctl restart cri-docker.service

#配置kubelet,为其指定cri-dockerd在本地打开的Unix Sock文件的路径,该路径一般默认为“/run/cri-dockerd.sock“

#提示:若/etc/sysconfig目录不存在,则需要先创建该目录。

[root@k8s-template ~]#mkdir -p /etc/sysconfig/

[root@k8s-template ~]#touch /etc/sysconfig/kubelet

[root@k8s-template ~]#cat <<EOF >/etc/sysconfig/kubelet

> KUBELET_KUBEADM_ARGS="--container-runtime=remote --container-runtime-endpoint=/run/cri-dockerd.sock"

> EOF

[root@k8s-template ~]#cat /etc/sysconfig/kubelet

KUBELET_KUBEADM_ARGS="--container-runtime=remote --container-runtime-endpoint=/run/cri-dockerd.sock"

模板制作完成,之后可以用此模板做集群节点配置即可

- 准备预设的主机环境

IP地址 主机名 角色

192.168.11.211 K8s-master01.mooreyxia.com,K8s-master01,kubeapi.mooreyxia.com Kubeapi

192.168.11.212 K8s-master02.mooreyxia.com,K8s-master02 Master

192.168.11.213 K8s-master03.mooreyxia.com,K8s-master03 Master

192.168.11.214 K8s-node01.mooreyxia.com,K8s-node01 Worker

192.168.11.215 K8s-node02.mooreyxia.com,K8s-node02 Worker

192.168.11.216 K8s-node03.mooreyxia.com,K8s-node03 Worker

------------------------------------------------------------------------------

#各节点时间同步

[root@k8s-template ~]#systemctl restart chrony && date

[root@k8s-template ~]#swapoff -a && systemctl --type swap

- 主机名称解析

- 出于简化配置步骤的目的,本测试环境使用hosts文件进行各节点名称解析。我们使用kubeapi主机名作为API Server在高可用环境中的专用接入名称,也为控制平面的高可用配置留下便于配置的余地。

[root@K8s-master01 ~]#hostname

K8s-master01.mooreyxia.com

#配置一个kubeapi主机名作为API Server在高可用环境中的专用接入名称

-----------------------

#注意:192.168.11.253是配置在第一个master节点k8s-master01上的第二个地址。在高可用的master环境中,它可作为VIP,由 keepalived进行管理。但为了简化本示例先期的部署过程,这里直接将其作为辅助IP地址,配置在k8s-master01节点之上。

-----------------------

[root@K8s-master01 ~]#cat /etc/netplan/01-netcfg.yaml

# This file describes the network interfaces available on your system

# For more information, see netplan(5).

network:

version: 2

renderer: networkd

ethernets:

eth0:

addresses:

- 192.168.11.211/24

- 192.168.11.253/24

gateway4: 192.168.11.1

nameservers:

search: [mooreyxia.org, mooreyxia.com]

addresses: [127.0.0.1]

#在Master01上安装named服务,其他节点域名解析指向Master01

#配置域名解析

[root@K8s-master01 ~]#cat /etc/bind/named.conf.default-zones

...

zone "mooreyxia.com" IN {

type master;

file "/etc/bind/mooreyxia.com.zone";

};

[root@K8s-master01 ~]#cat /etc/bind/mooreyxia.com.zone

$TTL 1D

@ IN SOA master admin (

1 ; serial

1D ; refresh

1H ; retry

1W ; expire

3H ) ; minimum

NS master

master A 192.168.11.211

kubeapi A 192.168.11.253

K8s-master01 A 192.168.11.211

K8s-master02 A 192.168.11.212

K8s-master03 A 192.168.11.213

K8s-node01 A 192.168.11.214

K8s-node02 A 192.168.11.215

K8s-node03 A 192.168.11.216

[root@K8s-master01 ~]#named-checkconf

[root@K8s-master01 ~]#rndc reload

server reload successful

#在其他待用节点上测试一下

[root@K8s-master02 ~]#apt install dnsutils -y && dig kubeapi.mooreyxia.com

...

No VM guests are running outdated hypervisor (qemu) binaries on this host.

; <<>> DiG 9.18.1-1ubuntu1.3-Ubuntu <<>> kubeapi.mooreyxia.com

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 11541

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 65494

;; QUESTION SECTION:

;kubeapi.mooreyxia.com. IN A

;; ANSWER SECTION:

kubeapi.mooreyxia.com. 86400 IN A 192.168.11.253

#设置开机启动

[root@K8s-master01 ~]#systemctl enable --now named

Synchronizing state of named.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable named

[root@K8s-master01 ~]#systemctl status named

● named.service - BIND Domain Name Server

Loaded: loaded (/lib/systemd/system/named.service; enabled; vendor preset: enabled)

Active: active (running) since Sun 2023-03-12 04:06:38 UTC; 11min ago

Docs: man:named(8)

Main PID: 3150 (named)

Tasks: 6 (limit: 2234)

Memory: 5.7M

CPU: 143ms

CGroup: /system.slice/named.service

└─3150 /usr/sbin/named -u bind

...

- 初始化第一个节点

- 构建Kubernetes集群的master节点,配置完成后,各worker节点直接加入到集群中的即可。需要特别说明的是,由kubeadm部署的Kubernetes集群上,集群核心组件kube-apiserver、kube-controller-manager、kube-scheduler和etcd等均会以静态Pod的形式运行,它们所依赖的镜像文件默认来自于registry.k8s.io这一Registry服务之上。但我们无法直接访问该服务,常用的解决办法有如下两种,本示例将选择使用更易于使用的前一种方式。

- 使用能够到达该服务的代理服务;

- 使用国内的镜像服务器上的服务,例如registry.aliyuncs.com/google_containers等。

#查看当前kubeadm需要使用的镜像 - 可以提前下载至环境

[root@K8s-master01 ~]#kubeadm config images list

registry.k8s.io/kube-apiserver:v1.26.2

registry.k8s.io/kube-controller-manager:v1.26.2

registry.k8s.io/kube-scheduler:v1.26.2

registry.k8s.io/kube-proxy:v1.26.2

registry.k8s.io/pause:3.9

registry.k8s.io/etcd:3.5.6-0

registry.k8s.io/coredns/coredns:v1.9.3

#将镜像导入到docker引擎中

[root@K8s-master01 ~]#kubeadm config images pull --cri-socket unix:///run/cri-dockerd.sock --image-repository=registry.aliyuncs.com/google_containers

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.26.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.26.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.26.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.26.2

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.9

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.6-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:v1.9.3

#kubeadm1.26版由于从国内镜像站下载会缺失一个pause3.6包,需要单独去谷歌官网下载

[root@K8s-master01 ~]#ls

pause-3.6.tar

[root@K8s-master01 ~]#docker load -i pause-3.6.tar

1021ef88c797: Loading layer [==================================================>] 684.5kB/684.5kB

Loaded image: registry.k8s.io/pause:3.6

[root@K8s-master01 ~]#kubeadm reset --cri-socket unix:///run/cri-dockerd.sock && iptables -F

W0312 09:17:33.268634 2664 preflight.go:56] [reset] WARNING: Changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted.

[reset] Are you sure you want to proceed? [y/N]: y

[preflight] Running pre-flight checks

W0312 09:17:35.085681 2664 removeetcdmember.go:106] [reset] No kubeadm config, using etcd pod spec to get data directory

[reset] Deleted contents of the etcd data directory: /var/lib/etcd

[reset] Stopping the kubelet service

[reset] Unmounting mounted directories in "/var/lib/kubelet"

W0312 09:17:35.102402 2664 cleanupnode.go:134] [reset] Failed to evaluate the "/var/lib/kubelet" directory. Skipping its unmount and cleanup: lstat /var/lib/kubelet: no such file or directory

[reset] Deleting contents of directories: [/etc/kubernetes/manifests /etc/kubernetes/pki]

[reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.d

The reset process does not reset or clean up iptables rules or IPVS tables.

If you wish to reset iptables, you must do so manually by using the "iptables" command.

If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

to reset your system's IPVS tables.

The reset process does not clean your kubeconfig files and you must remove them manually.

Please, check the contents of the $HOME/.kube/config file.

#至此镜像准备完成 - 上述镜像需要在每个节点都准备

[root@K8s-master01 ~]#docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-apiserver v1.26.2 63d3239c3c15 2 weeks ago 134MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.26.2 240e201d5b0d 2 weeks ago 123MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.26.2 db8f409d9a5d 2 weeks ago 56.3MB

registry.aliyuncs.com/google_containers/kube-proxy v1.26.2 6f64e7135a6e 2 weeks ago 65.6MB

registry.aliyuncs.com/google_containers/etcd 3.5.6-0 fce326961ae2 3 months ago 299MB

registry.aliyuncs.com/google_containers/pause 3.9 e6f181688397 4 months ago 744kB

registry.aliyuncs.com/google_containers/coredns v1.9.3 5185b96f0bec 9 months ago 48.8MB

registry.k8s.io/pause 3.6 6270bb605e12 18 months ago 683kB

#开始初始化

-----------------------------------------------------------------------

kubeadm init --control-plane-endpoint="kubeapi.mooreyxia.com" \ #接入集群端点

--kubernetes-versinotallow=v1.26.2 \ #k8s版本

--pod-network-cidr=10.244.0.0/16 \ #pod网络插件 - 使用Flannel时,Pod默认IP是10.244.0.0/12

--service-cidr=10.96.0.0/12 \ #默认网段10.96.0.0/12

--token-ttl=0 \ #共享令牌(token)的过期时长,默认为24小时,0表示永不过期

--cri-socket unix:///run/cri-dockerd.sock \ #指定默认的cri-docker运行目录

--upload-certs \ #上传证书以便于集群控制节点相互访问

--image-repository=registry.aliyuncs.com/google_containers #从国内的镜像服务中获取各Image

-----------------------------------------------------------------------

#初始化前确认kubernetes文件夹为空

[root@K8s-master01 ~]#tree /etc/kubernetes/

/etc/kubernetes/

└── manifests

1 directory, 0 files

#初始化成功后的提示里有很多接下来需要手动操作的提示,保存下来

[root@K8s-master01 ~]#kubeadm init --control-plane-endpoint="kubeapi.mooreyxia.com" --kubernetes-versinotallow=v1.26.2 \

--pod-network-cidr=10.244.0.0/16 \

--service-cidr=10.96.0.0/12 \

--token-ttl=0 \

--cri-socket unix:///run/cri-dockerd.sock \

--upload-certs \

--image-repository=registry.aliyuncs.com/google_containers

[init] Using Kubernetes version: v1.26.2

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master01.mooreyxia.com kubeapi.mooreyxia.com kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.11.211]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master01.mooreyxia.com localhost] and IPs [192.168.11.211 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master01.mooreyxia.com localhost] and IPs [192.168.11.211 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 12.508153 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

ffba177268f3f65ddd6e2876e8dfb8c69893584be8000b8d30753eaa77359b1a

[mark-control-plane] Marking the node k8s-master01.mooreyxia.com as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node k8s-master01.mooreyxia.com as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: cblyqd.y86m1g7yjxwe3rr1

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join kubeapi.mooreyxia.com:6443 --token cblyqd.y86m1g7yjxwe3rr1 \

--discovery-token-ca-cert-hash sha256:85051ab0e88b7348321c7072f423f781b0dfb92e17d6382d96bc7be6a72e8115 \

--control-plane --certificate-key ffba177268f3f65ddd6e2876e8dfb8c69893584be8000b8d30753eaa77359b1a

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join kubeapi.mooreyxia.com:6443 --token cblyqd.y86m1g7yjxwe3rr1 \

--discovery-token-ca-cert-hash sha256:85051ab0e88b7348321c7072f423f781b0dfb92e17d6382d96bc7be6a72e8115

#初始化成功后确认kubernetes文件夹生成相关配置文件和证书

#其中pki文件夹下的证书是二进制安装时需要手动生成的,这里kubeadm自动做了

[root@K8s-master01 ~]#tree /etc/kubernetes/

/etc/kubernetes/

├── admin.conf

├── controller-manager.conf

├── kubelet.conf

├── manifests

│ ├── etcd.yaml

│ ├── kube-apiserver.yaml

│ ├── kube-controller-manager.yaml

│ └── kube-scheduler.yaml

├── pki

│ ├── apiserver-etcd-client.crt

│ ├── apiserver-etcd-client.key

│ ├── apiserver-kubelet-client.crt

│ ├── apiserver-kubelet-client.key

│ ├── apiserver.crt

│ ├── apiserver.key

│ ├── ca.crt

│ ├── ca.key

│ ├── etcd

│ │ ├── ca.crt

│ │ ├── ca.key

│ │ ├── healthcheck-client.crt

│ │ ├── healthcheck-client.key

│ │ ├── peer.crt

│ │ ├── peer.key

│ │ ├── server.crt

│ │ └── server.key

│ ├── front-proxy-ca.crt

│ ├── front-proxy-ca.key

│ ├── front-proxy-client.crt

│ ├── front-proxy-client.key

│ ├── sa.key

│ └── sa.pub

└── scheduler.conf

3 directories, 30 files

- 初始化成功后按提示完成后续操作

#查看后续操作提醒

--------------------------------------------------------------------------

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join kubeapi.mooreyxia.com:6443 --token cblyqd.y86m1g7yjxwe3rr1 \

--discovery-token-ca-cert-hash sha256:85051ab0e88b7348321c7072f423f781b0dfb92e17d6382d96bc7be6a72e8115 \

--control-plane --certificate-key ffba177268f3f65ddd6e2876e8dfb8c69893584be8000b8d30753eaa77359b1a

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join kubeapi.mooreyxia.com:6443 --token cblyqd.y86m1g7yjxwe3rr1 \

--discovery-token-ca-cert-hash sha256:85051ab0e88b7348321c7072f423f781b0dfb92e17d6382d96bc7be6a72e8115

--------------------------------------------------------------------------

#第1个步骤提示, Kubernetes集群管理员认证到Kubernetes集群时使用的kubeconfig配置文件

[root@K8s-master01 ~]#mkdir -p $HOME/.kube

[root@K8s-master01 ~]#cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@K8s-master01 ~]#chown $(id -u):$(id -g) $HOME/.kube/config

[root@K8s-master01 ~]#ll .kube/

total 16

drwxr-xr-x 2 root root 4096 Mar 12 09:30 ./

drwx------ 6 root root 4096 Mar 12 09:29 ../

-rw------- 1 root root 5649 Mar 12 09:30 config

#【上面和这里二选一】如果是root,可以使用环境变量KUBECONFIG为kubectl等指定默认使用的kubeconfig;

export KUBECONFIG=/etc/kubernetes/admin.conf

----------------------------------------------------------------

#注意:建议家目录下创建隐藏配置后重新登录一下账户,使信息加载到内核中,否则可能会出现Service找不到用户域名的问题

如果发生API服务未找到的错误

很可能时域名配置的问题

如果域名配置没有问题,可能需要从当前用户退出重新登陆,加载第一步创建的文件内容到集群内。家目录下创建的内容需要手动加 载到内存中或者重新登录,内核中才有这个信息,k8s的Service网络才能找到

[root@K8s-master01 ~]#kubectl get nodes

E0312 11:13:05.979926 70465 memcache.go:238] couldn't get current server API group list: Get "https://kubeapi.mooreyxia.com:6443/api?timeout=32s": EOF

-------------------------------------------------------------------

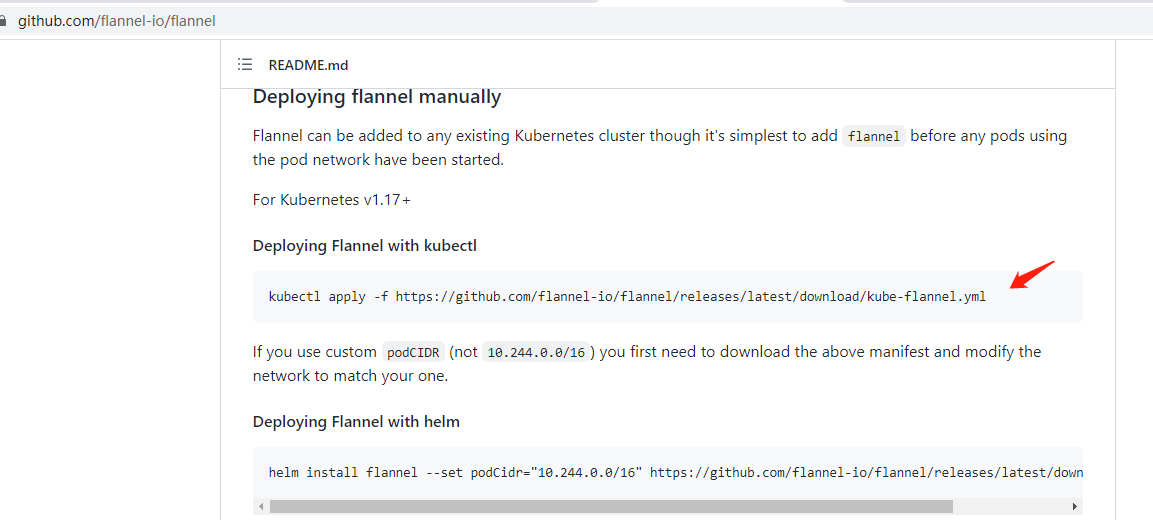

# 第2个步骤提示,为Kubernetes集群部署一个网络插件,具体选用的插件则取决于管理员;这里选取flannel

[root@K8s-master01 ~]#kubectl apply -f https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml

namespace/kube-flannel created

serviceaccount/flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

#此时可以看到创建了一个kube-flannel名称空间的Pod

[root@K8s-master01 ~]#kubectl get ns

NAME STATUS AGE

default Active 10m

kube-flannel Active 20s

kube-node-lease Active 10m

kube-public Active 10m

kube-system Active 10m

[root@K8s-master01 ~]#kubectl get pods -n kube-flannel

NAME READY STATUS RESTARTS AGE

kube-flannel-ds-2z2fd 0/1 Init:1/2 0 39s

#持续监控kube-flannel下载并创建的过程

[root@K8s-master01 ~]#kubectl get pods -n kube-flannel -w

NAME READY STATUS RESTARTS AGE

kube-flannel-ds-2z2fd 0/1 Init:1/2 0 51s

kube-flannel-ds-2z2fd 0/1 PodInitializing 0 68s

kube-flannel-ds-2z2fd 1/1 Running 0 69s

#确认集群节点是否生成

[root@K8s-master01 ~]#kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01.mooreyxia.com Ready control-plane 14m v1.26.2

#确认集群名称空间

[root@K8s-master01 ~]#kubectl get namespaces

NAME STATUS AGE

default Active 120m

kube-flannel Active 89s

kube-node-lease Active 120m

kube-public Active 120m

kube-system Active 120m

#接下来看第3个步骤提示,向集群添加额外的控制平面节点

--------------------------------------------------------------------------------

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join kubeapi.mooreyxia.com:6443 --token cblyqd.y86m1g7yjxwe3rr1 \

--discovery-token-ca-cert-hash sha256:85051ab0e88b7348321c7072f423f781b0dfb92e17d6382d96bc7be6a72e8115 \

--control-plane --certificate-key ffba177268f3f65ddd6e2876e8dfb8c69893584be8000b8d30753eaa77359b1a

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

--------------------------------------------------------------------------------

#复制命令并加上cri-dockerd.sock在待加入主控制节点上执行。其中token是本次集群节点加入的凭证,之后每次新加入节点都需要重新生成。【--cri-socket unix:///run/cri-dockerd.sock必须】

[root@K8s-master02 ~]#kubeadm join kubeapi.mooreyxia.com:6443 --token cblyqd.y86m1g7yjxwe3rr1 --discovery-token-ca-cert-hash sha256:85051ab0e88b7348321c7072f423f781b0dfb92e17d6382d96bc7be6a72e8115 --control-plane --certificate-key ffba177268f3f65ddd6e2876e8dfb8c69893584be8000b8d30753eaa77359b1a --cri-socket unix:///run/cri-dockerd.sock

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[preflight] Running pre-flight checks before initializing the new control plane instance

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[download-certs] Saving the certificates to the folder: "/etc/kubernetes/pki"

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master02.mooreyxia.com kubeapi.mooreyxia.com kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.11.212]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master02.mooreyxia.com localhost] and IPs [192.168.11.212 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master02.mooreyxia.com localhost] and IPs [192.168.11.212 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[certs] Using the existing "sa" key

[kubeconfig] Generating kubeconfig files

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[check-etcd] Checking that the etcd cluster is healthy

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[etcd] Announced new etcd member joining to the existing etcd cluster

[etcd] Creating static Pod manifest for "etcd"

[etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s

The 'update-status' phase is deprecated and will be removed in a future release. Currently it performs no operation

[mark-control-plane] Marking the node k8s-master02.mooreyxia.com as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node k8s-master02.mooreyxia.com as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

#加入后同样需要生成配置文件

----------------------------------------------------------

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

----------------------------------------------------------

[root@K8s-master02 ~]#mkdir -p $HOME/.kube

[root@K8s-master02 ~]#cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@K8s-master02 ~]#chown $(id -u):$(id -g) $HOME/.kube/config

#控制面板加入新的节点后可在任意节点查询集群

[root@K8s-master02 ~]#kubectl get ns

NAME STATUS AGE

default Active 32m

kube-flannel Active 22m

kube-node-lease Active 32m

kube-public Active 32m

kube-system Active 32m

[root@K8s-master02 ~]#kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01.mooreyxia.com Ready control-plane 32m v1.26.2

k8s-master02.mooreyxia.com Ready control-plane 9m35s v1.26.2

k8s-master03.mooreyxia.com Ready control-plane 8m35s v1.26.2

# 第4个步骤提示,向集群添加工作节点

---------------------------------------------

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join kubeapi.mooreyxia.com:6443 --token cblyqd.y86m1g7yjxwe3rr1 \

--discovery-token-ca-cert-hash sha256:85051ab0e88b7348321c7072f423f781b0dfb92e17d6382d96bc7be6a72e8115

---------------------------------------------

#复制命令并加上cri-dockerd.sock在待加入工作节点上执行。【--cri-socket unix:///run/cri-dockerd.sock必须】

[root@K8s-node01 ~]#kubeadm join kubeapi.mooreyxia.com:6443 --token cblyqd.y86m1g7yjxwe3rr1 \

--discovery-token-ca-cert-hash sha256:85051ab0e88b7348321c7072f423f781b0dfb92e17d6382d96bc7be6a72e8115 --cri-socket unix:///run/cri-dockerd.sock

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

- 确认集群搭建

#在主控制面板查看集群所有节点

[root@K8s-master01 ~]#kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01.mooreyxia.com Ready control-plane 41m v1.26.2

k8s-master02.mooreyxia.com Ready control-plane 18m v1.26.2

k8s-master03.mooreyxia.com Ready control-plane 17m v1.26.2

k8s-node01.mooreyxia.com Ready <none> 4m42s v1.26.2

k8s-node02.mooreyxia.com Ready <none> 4m31s v1.26.2

k8s-node03.mooreyxia.com Ready <none> 4m14s v1.26.2

#查看CNI网络插件,发现有节点数个pod被创建

[root@K8s-master01 ~]#kubectl get pods -n kube-flannel

NAME READY STATUS RESTARTS AGE

kube-flannel-ds-2z2fd 1/1 Running 0 33m

kube-flannel-ds-5795d 1/1 Running 0 19m

kube-flannel-ds-6k4vm 1/1 Running 0 20m

kube-flannel-ds-8rs8j 1/1 Running 0 6m28s

kube-flannel-ds-999kg 1/1 Running 0 6m1s

kube-flannel-ds-ngrwc 1/1 Running 0 6m18s

#观察核心组件kube-system

--------------------------------------------

节点类型:

Master:Control Plane #kubeadm自动创建

API Server: http/https, RESTful API, 声明式API

Scheduler

Controller Manager

Etcd

Worker:Data Plane

Kubelet

CRI --> Container Runtime #已经手动部署

kubelet --> cri-dockerd --> Docker Engine --> Containerd (CNCF) --> containerd-shim --> RunC

CNI --> Network Plugin #已经手动部署

CoreOS --> Flannel

CSI --> Volume Plugin #kubeadm自动创建

In-Tree: kubelet内置插件

Kube Proxy #kubeadm自动创建,每个节点都会部署

kubeadm会自动部署的该组件:DaemonSet

--------------------------------------------

[root@K8s-master01 ~]#kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-5bbd96d687-p64zh 0/1 CrashLoopBackOff 11 (2m38s ago) 44m

coredns-5bbd96d687-xcbwz 0/1 CrashLoopBackOff 11 (2m21s ago) 44m

etcd-k8s-master01.mooreyxia.com 1/1 Running 0 45m

etcd-k8s-master02.mooreyxia.com 1/1 Running 0 22m

etcd-k8s-master03.mooreyxia.com 1/1 Running 0 21m

kube-apiserver-k8s-master01.mooreyxia.com 1/1 Running 0 45m

kube-apiserver-k8s-master02.mooreyxia.com 1/1 Running 0 21m

kube-apiserver-k8s-master03.mooreyxia.com 1/1 Running 0 20m

kube-controller-manager-k8s-master01.mooreyxia.com 1/1 Running 0 45m

kube-controller-manager-k8s-master02.mooreyxia.com 1/1 Running 0 22m

kube-controller-manager-k8s-master03.mooreyxia.com 1/1 Running 0 21m

kube-proxy-4kf27 1/1 Running 0 22m

kube-proxy-b2hvp 1/1 Running 0 21m

kube-proxy-dlrx9 1/1 Running 0 8m34s

kube-proxy-jwwf5 1/1 Running 0 44m

kube-proxy-r5s8j 1/1 Running 0 8m24s

kube-proxy-w9dg4 1/1 Running 0 8m7s

kube-scheduler-k8s-master01.mooreyxia.com 1/1 Running 0 45m

kube-scheduler-k8s-master02.mooreyxia.com 1/1 Running 0 22m

kube-scheduler-k8s-master03.mooreyxia.com 1/1 Running 0 21m

测试应用编排及服务访问

测试案例:部署Nginx

- 创建Deployment部署Nginx:

#从docker镜像站拉取一个镜像并创建pod

[root@K8s-master01 ~]#kubectl create deployment nginx --image=nginx:1.22-alpine --replicas=1

deployment.apps/nginx created

[root@K8s-master01 ~]#kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-86f4df7f8b-lhkcb 0/1 ContainerCreating 0 18s

#发现在03工作节点上调度并执行

[root@K8s-master01 ~]#kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-86f4df7f8b-lhkcb 1/1 Running 0 31s 10.244.5.2 k8s-node03.mooreyxia.com <none> <none>

[root@K8s-node03 ~]#docker images | grep nginx

nginx 1.22-alpine 652309d09131 4 weeks ago 23.5MB

#尝试访问pod中的nginx

[root@K8s-node03 ~]#curl 10.244.5.2

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

- 创建Service暴露Nginx:

#Service创建类型

[root@K8s-master01 ~]#kubectl create service --help

Create a service using a specified subcommand.

Aliases:

service, svc

Available Commands:

clusterip Create a ClusterIP service #集群内

externalname Create an ExternalName service #集群外

loadbalancer Create a LoadBalancer service #集群外

nodeport Create a NodePort service #集群内

Usage:

kubectl create service [flags] [options]

Use "kubectl <command> --help" for more information about a given command.

Use "kubectl options" for a list of global command-line options (applies to all commands).

#创建一个内部Service并将端口指向负载均衡器的端口对外开放

------------------------------

#语法

kubectl create service nodeport NAME [--tcp=port:targetPort] [--dry-run=server|client|none] [options]

------------------------------

[root@K8s-master01 ~]# kubectl create service nodeport nginx --tcp=80:80

service/nginx created

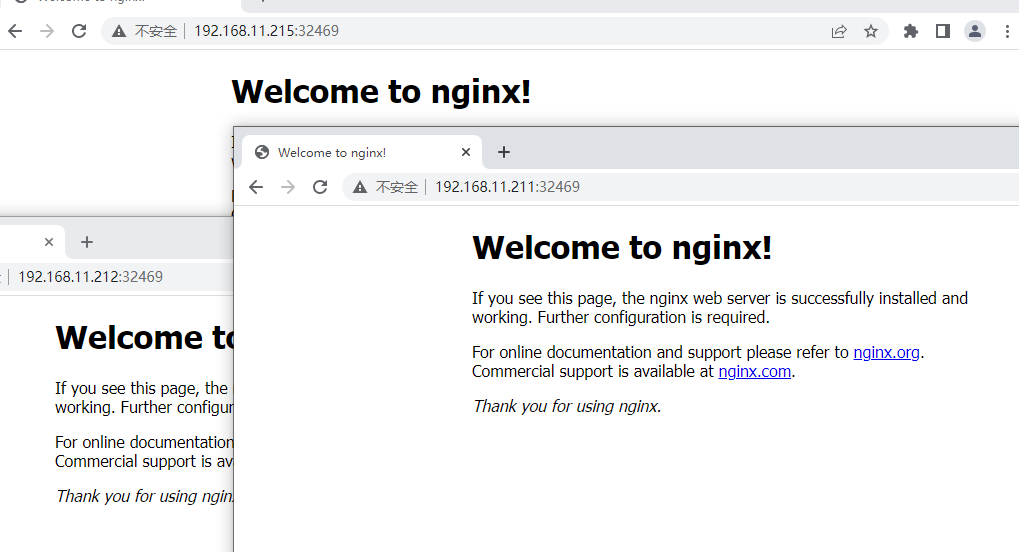

[root@K8s-master01 ~]#kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 116m

nginx NodePort 10.106.57.126 <none> 80:32469/TCP 18s

#集群内访问自动分配ip

[root@K8s-master01 ~]#curl 10.106.57.126

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

集群外访问集群内任意节点的32469端口

测试案例:部署Demoapp

- 创建Deployment部署Demoapp:

#创建运行两个pod

[root@K8s-master02 ~]# kubectl create deployment demoapp --image=ikubernetes/demoapp:v1.0 --replicas=2

deployment.apps/demoapp created

[root@K8s-master02 ~]#kubectl get pods

NAME READY STATUS RESTARTS AGE

demoapp-75f59c894-46c8n 0/1 ContainerCreating 0 9s

demoapp-75f59c894-5cx48 0/1 ContainerCreating 0 9s

nginx-86f4df7f8b-lhkcb 1/1 Running 0 18m

#自动给pod分配了两个地址

[root@K8s-master02 ~]#kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-75f59c894-46c8n 1/1 Running 0 52s 10.244.4.2 k8s-node02.mooreyxia.com <none> <none>

demoapp-75f59c894-5cx48 1/1 Running 0 52s 10.244.3.2 k8s-node01.mooreyxia.com <none> <none>

nginx-86f4df7f8b-lhkcb 1/1 Running 0 19m 10.244.5.2 k8s-node03.mooreyxia.com <none> <none>

#分别访问

[root@K8s-master02 ~]#curl 10.244.3.2

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-5cx48, ServerIP: 10.244.3.2!

[root@K8s-master02 ~]#curl 10.244.4.2

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-46c8n, ServerIP: 10.244.4.2!

- 创建Service暴露Demoapp:

#创建service并将pod端口暴露给负载均衡器

[root@K8s-master02 ~]# kubectl create service nodeport demoapp --tcp=80:80

service/demoapp created

#自动分配一个ip

[root@K8s-master02 ~]#kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

demoapp NodePort 10.101.35.99 <none> 80:30646/TCP 40s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 133m

nginx NodePort 10.106.57.126 <none> 80:32469/TCP 17m

#自动实现负载均衡

[root@K8s-master02 ~]#while true; do curl 10.101.35.99;sleep .5;done

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-46c8n, ServerIP: 10.244.4.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-5cx48, ServerIP: 10.244.3.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-5cx48, ServerIP: 10.244.3.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-46c8n, ServerIP: 10.244.4.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-5cx48, ServerIP: 10.244.3.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-5cx48, ServerIP: 10.244.3.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-5cx48, ServerIP: 10.244.3.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-5cx48, ServerIP: 10.244.3.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-5cx48, ServerIP: 10.244.3.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-46c8n, ServerIP: 10.244.4.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-5cx48, ServerIP: 10.244.3.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-5cx48, ServerIP: 10.244.3.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-5cx48, ServerIP: 10.244.3.2!

集群外访问集群内任意节点的30646端口

- 使用Demoapp Deployment扩容和缩容:

#确定目前有两个pod

[root@K8s-master02 ~]#kubectl get pods

NAME READY STATUS RESTARTS AGE

demoapp-75f59c894-46c8n 1/1 Running 0 18m

demoapp-75f59c894-5cx48 1/1 Running 0 18m

nginx-86f4df7f8b-lhkcb 1/1 Running 0 37m

#扩容至5个

[root@K8s-master02 ~]# kubectl scale deployment demoapp --replicas=5

deployment.apps/demoapp scaled

[root@K8s-master02 ~]#kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-75f59c894-46c8n 1/1 Running 0 20m 10.244.4.2 k8s-node02.mooreyxia.com <none> <none>

demoapp-75f59c894-5cx48 1/1 Running 0 20m 10.244.3.2 k8s-node01.mooreyxia.com <none> <none>

demoapp-75f59c894-c8km7 1/1 Running 0 54s 10.244.5.3 k8s-node03.mooreyxia.com <none> <none>

demoapp-75f59c894-cj5wr 1/1 Running 0 53s 10.244.4.3 k8s-node02.mooreyxia.com <none> <none>

demoapp-75f59c894-v25sf 1/1 Running 0 53s 10.244.3.3 k8s-node01.mooreyxia.com <none> <none>

nginx-86f4df7f8b-lhkcb 1/1 Running 0 38m 10.244.5.2 k8s-node03.mooreyxia.com <none> <none>

#并且会自动加入集群访问中做负载均衡

[root@K8s-master02 ~]#while true; do curl 10.101.35.99;sleep .5;done

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-5cx48, ServerIP: 10.244.3.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-c8km7, ServerIP: 10.244.5.3!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-cj5wr, ServerIP: 10.244.4.3!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-v25sf, ServerIP: 10.244.3.3!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-5cx48, ServerIP: 10.244.3.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-46c8n, ServerIP: 10.244.4.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-c8km7, ServerIP: 10.244.5.3!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-46c8n, ServerIP: 10.244.4.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-5cx48, ServerIP: 10.244.3.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-c8km7, ServerIP: 10.244.5.3!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-46c8n, ServerIP: 10.244.4.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-cj5wr, ServerIP: 10.244.4.3!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-c8km7, ServerIP: 10.244.5.3!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-v25sf, ServerIP: 10.244.3.3!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-5cx48, ServerIP: 10.244.3.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-v25sf, ServerIP: 10.244.3.3!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-5cx48, ServerIP: 10.244.3.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-5cx48, ServerIP: 10.244.3.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-cj5wr, ServerIP: 10.244.4.3!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-5cx48, ServerIP: 10.244.3.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-v25sf, ServerIP: 10.244.3.3!

#缩容至3个

[root@K8s-master02 ~]#kubectl scale deployment demoapp --replicas=3

deployment.apps/demoapp scaled

#确认剩下3个pod

[root@K8s-master02 ~kubectl get pods -o widede

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-75f59c894-46c8n 1/1 Running 0 24m 10.244.4.2 k8s-node02.mooreyxia.com <none> <none>

demoapp-75f59c894-5cx48 1/1 Running 0 24m 10.244.3.2 k8s-node01.mooreyxia.com <none> <none>

demoapp-75f59c894-c8km7 1/1 Running 0 5m2s 10.244.5.3 k8s-node03.mooreyxia.com <none> <none>

nginx-86f4df7f8b-lhkcb 1/1 Running 0 42m 10.244.5.2 k8s-node03.mooreyxia.com <none> <none>

#并且会自动撤去集群访问中原有的负载均衡

[root@K8s-master02 ~]#while true; do curl 10.101.35.99;sleep .5;done

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-5cx48, ServerIP: 10.244.3.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-5cx48, ServerIP: 10.244.3.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-46c8n, ServerIP: 10.244.4.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-c8km7, ServerIP: 10.244.5.3!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-46c8n, ServerIP: 10.244.4.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-46c8n, ServerIP: 10.244.4.2!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-c8km7, ServerIP: 10.244.5.3!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.1.0, ServerName: demoapp-75f59c894-46c8n, ServerIP: 10.244.4.2!

测试案例:部署Wordpress

#部署MySQL:

git clone https://github.com/iKubernetes/learning-k8s.git

[root@K8s-master03 ~]#git clone https://gitee.com/mooreyxia/learning-k8s.git

Cloning into 'learning-k8s'...

remote: Enumerating objects: 363, done.

remote: Counting objects: 100% (363/363), done.

remote: Compressing objects: 100% (228/228), done.

remote: Total 363 (delta 129), reused 363 (delta 129), pack-reused 0

Receiving objects: 100% (363/363), 108.81 KiB | 599.00 KiB/s, done.

Resolving deltas: 100% (129/129), done.

[root@K8s-master03 ~]#cd learning-k8s/wordpress/

[root@K8s-master03 wordpress]#ls

README.md mysql mysql-ephemeral nginx wordpress wordpress-apache-ephemeral

#加载所有配置,创建应用

[root@K8s-master03 wordpress]# kubectl apply -f mysql-ephemeral/

secret/mysql-user-pass created

service/mysql created

deployment.apps/mysql created

#运行一个mysql单实例

[root@K8s-master03 wordpress]#kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-75f59c894-46c8n 1/1 Running 0 87m 10.244.4.2 k8s-node02.mooreyxia.com <none> <none>

demoapp-75f59c894-5cx48 1/1 Running 0 87m 10.244.3.2 k8s-node01.mooreyxia.com <none> <none>

demoapp-75f59c894-c8km7 1/1 Running 0 67m 10.244.5.3 k8s-node03.mooreyxia.com <none> <none>

mysql-58bfc8ff6-47vqk 1/1 Running 0 99s 10.244.3.4 k8s-node01.mooreyxia.com <none> <none>

nginx-86f4df7f8b-lhkcb 1/1 Running 0 105m 10.244.5.2 k8s-node03.mooreyxia.com <none> <none>

#查看定义的账户信息

[root@K8s-master03 wordpress]#cat mysql-ephemeral/01-secret-mysql.yaml

apiVersion: v1

kind: Secret

metadata:

creationTimestamp: null

name: mysql-user-pass

data:

database.name: d3BkYg==

root.password: TUBnZUVkdQ==

user.name: d3B1c2Vy

user.password: bWFnZURVLmMwbQ==

[root@K8s-master03 wordpress]#echo d3BkYg== | base64 -d

wpdb[root@K8s-master03 wordpress]#echo TUBnZUVkdQ== | base64 -d

M@geEdu[root@K8s-master03 wordpress]#

#尝试登录

[root@K8s-master03 wordpress]#kubectl exec -it mysql-58bfc8ff6-47vqk -- /bin/sh

sh-4.4# mysql -uroot -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 10

Server version: 8.0.32 MySQL Community Server - GPL

Copyright (c) 2000, 2023, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> SHOW DATABASES;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

| wpdb |

+--------------------+

5 rows in set (0.01 sec)

# 部署Wordpress:

[root@K8s-master03 wordpress]#kubectl apply -f wordpress-apache-ephemeral/

service/wordpress created

deployment.apps/wordpress created

[root@K8s-master03 wordpress]#kubectl get pods -o wide |grep wordpress

wordpress-767b7ffbd-cjdlb 1/1 Running 0 2m27s 10.244.4.4 k8s-node02.mooreyxia.com <none> <none>

#自动创建了一个mysql的service对接pod中wordpress

[root@K8s-master03 wordpress]#kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

demoapp NodePort 10.101.35.99 <none> 80:30646/TCP 91m

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3h45m

mysql ClusterIP 10.101.129.135 <none> 3306/TCP 14m #ClusterIP

nginx NodePort 10.106.57.126 <none> 80:32469/TCP 108m

wordpress NodePort 10.110.166.246 <none> 80:30607/TCP 4m35s #向集群外开放30607端口

集群外访问集群内任意节点的30607端口

我是moore,大家一起加油!!!