如果 Host 集群(简称 H 集群)的任何节点都能访问 Member 集群(简称 M 集群)的 kube-apiserver 地址,您可以采用直接连接。

当 M 集群的 kube-apiserver 地址可以暴露给外网,或者 H 集群和 M 集群在同一私有网络或子网中时,此方法均适用。

要通过直接连接使用多集群功能,您必须拥有至少两个集群,分别用作 H 集群和 M 集群。您可以在安装 KubeSphere 之前或者之后将一个集群指定为 H 集群或 M 集群。

准备 Host 集群

Host 集群为您提供中央控制平面,并且您只能指定一个 Host 集群。

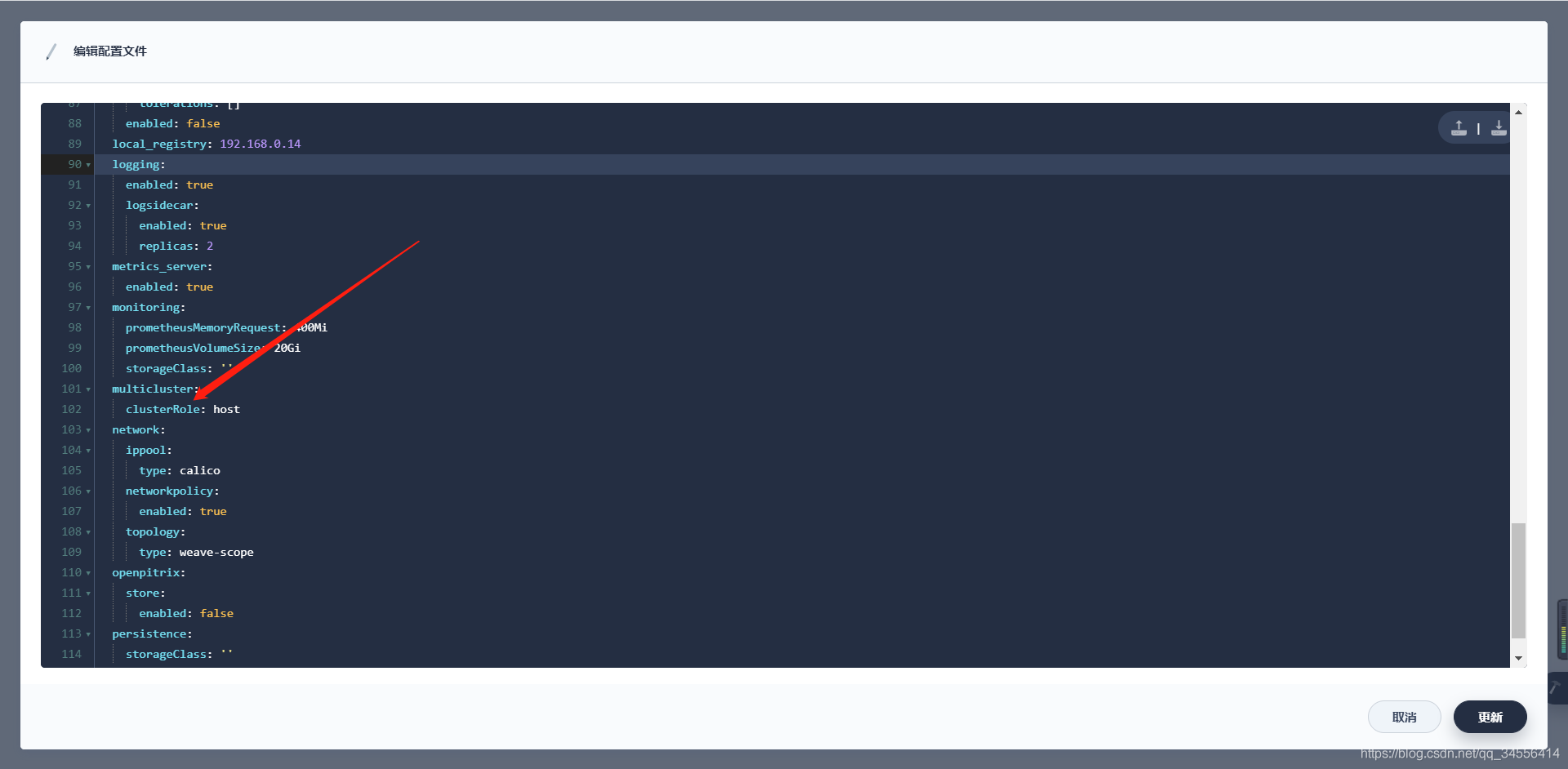

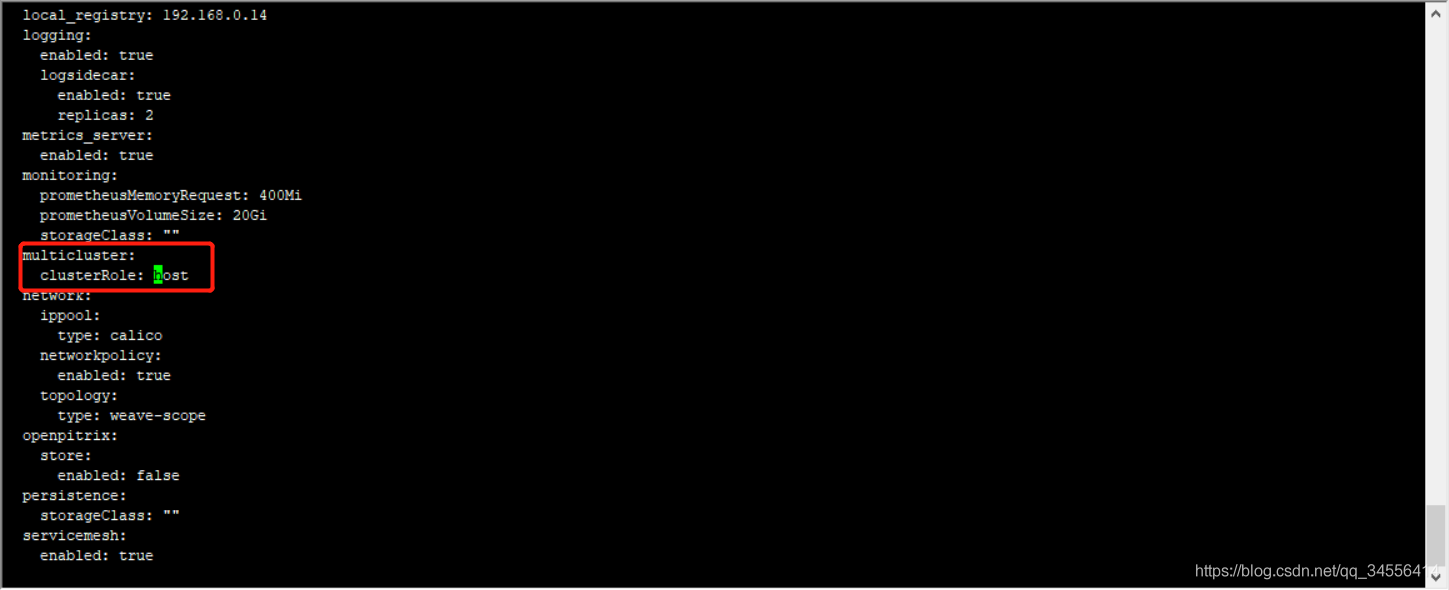

如果已经安装了独立的 KubeSphere 集群,您可以编辑集群配置,将 clusterRole 的值设置为 host。

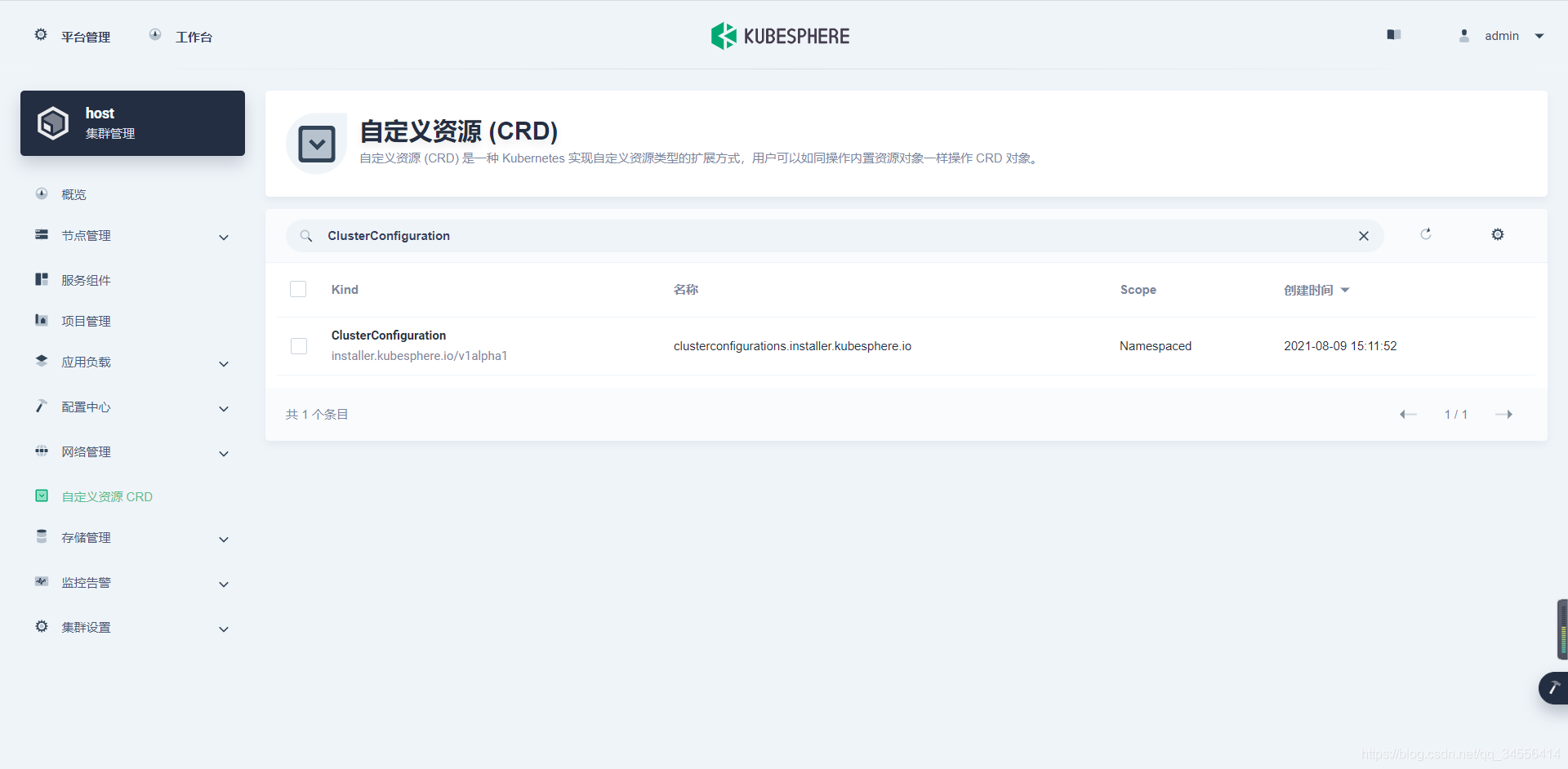

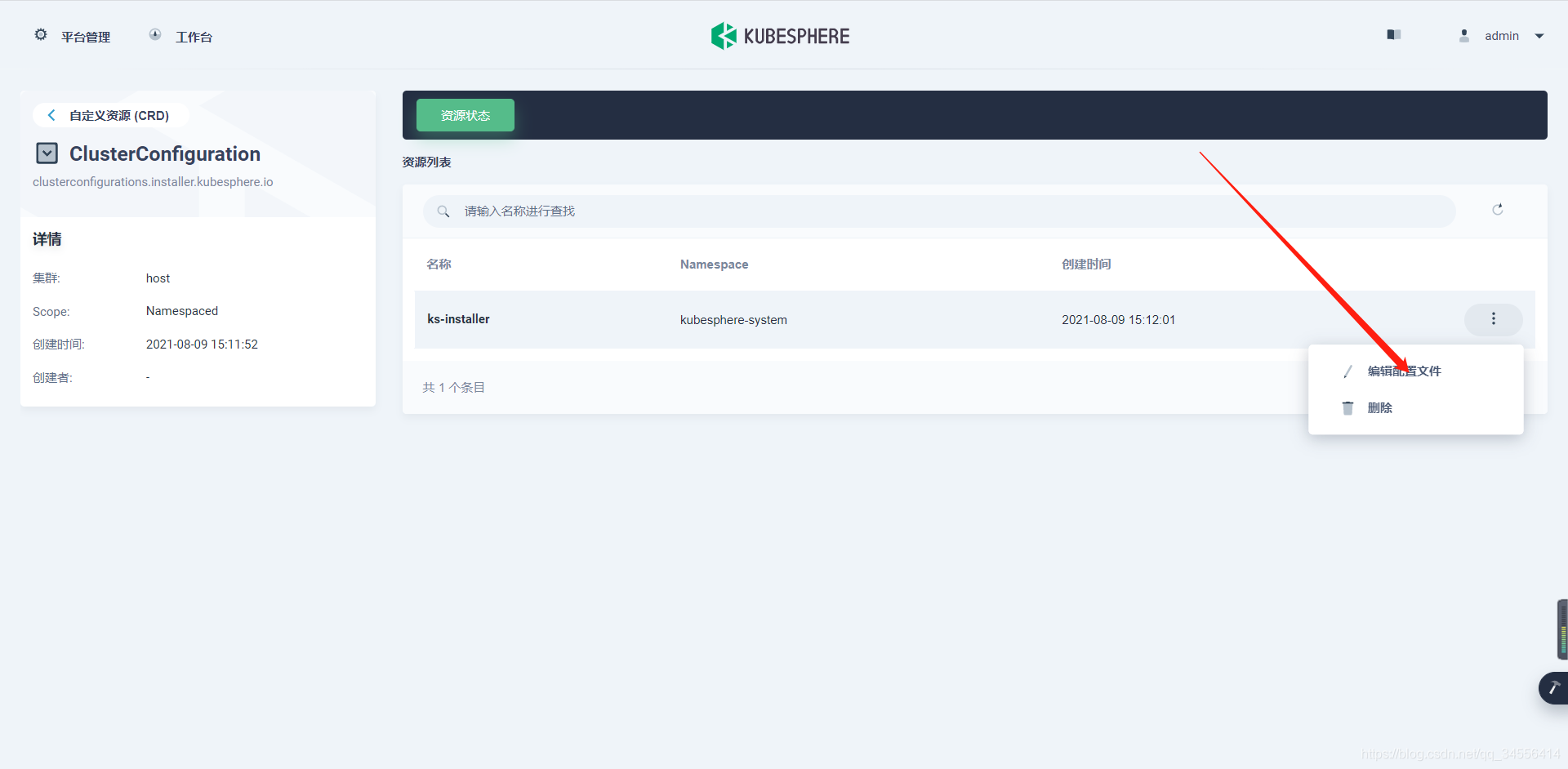

- 选项 A - 使用 Web 控制台:

使用admin 帐户登录控制台,然后进入集群管理页面上的自定义资源 CRD,输入关键字 ClusterConfiguration,然后转到其详情页面。编辑 ks-installer 的 YAML 文件

- 选项 B - 使用 Kubectl:

[root@master01 ~]# kubectl edit cc ks-installer -n kubesphere-system

[root@master01 ~]# kubectl get pod -n kubesphere-system

NAME READY STATUS RESTARTS AGE

ks-installer-b4cb495c9-9xjrl 1/1 Running 0 3d20h

[root@master01 ~]# kubectl delete pod ks-installer-b4cb495c9-9xjrl -n kubesphere-system

pod "ks-installer-b4cb495c9-9xjrl" deleted

[root@master01 ~]# kubectl get pod -n kubesphere-system

NAME READY STATUS RESTARTS AGE

ks-installer-b4cb495c9-txs87 1/1 Running 0 14s

[root@master01 ~]# kubectl logs ks-installer-b4cb495c9-txs87 -n kubesphere-system -f

您可以使用 kubectl 来获取安装日志以验证状态。运行以下命令,稍等片刻,如果 Host 集群已准备就绪,您将看到成功的日志返回。

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f准备 Member 集群

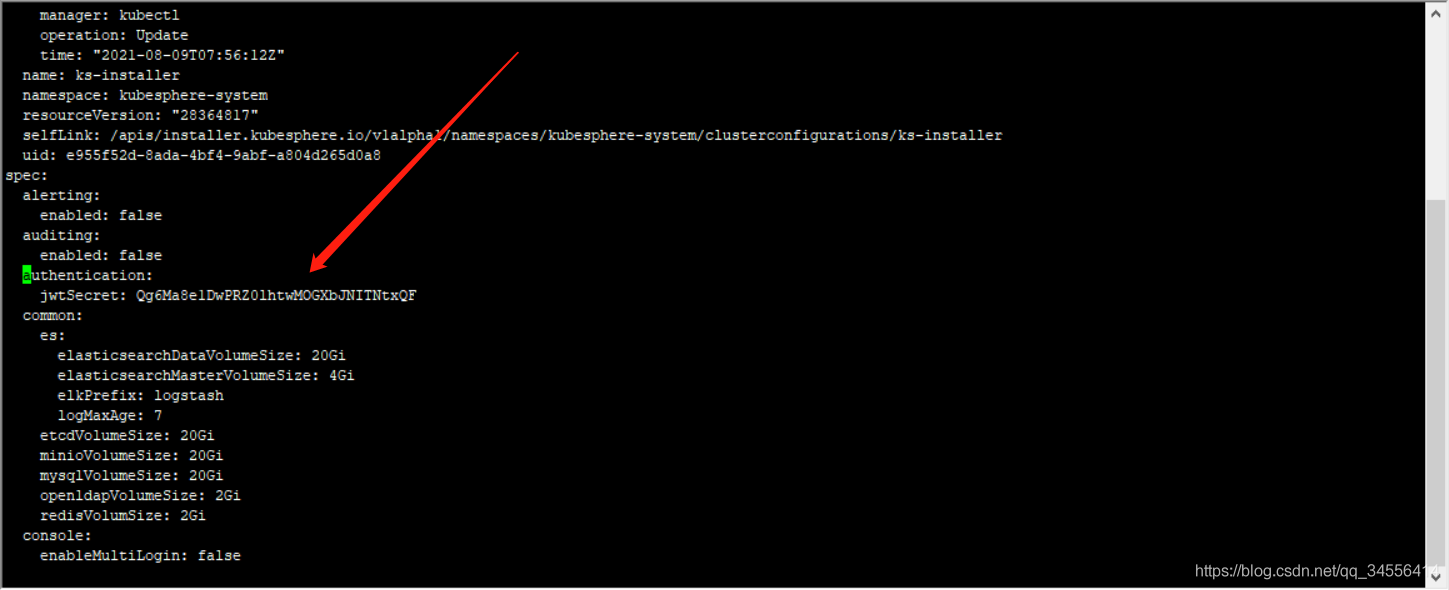

为了通过 Host 集群管理 Member 集群,您需要使它们之间的 jwtSecret 相同。因此,您首先需要在 Host 集群中执行以下命令来获取它。命令输出结果可能如下所示:

[root@master01 ~]# kubectl -n kubesphere-system get cm kubesphere-config -o yaml | grep -v "apiVersion" | grep jwtSecret

jwtSecret: "Qg6Ma8e1DwPRZ0lhtwMOGXbJNITNtxQF"

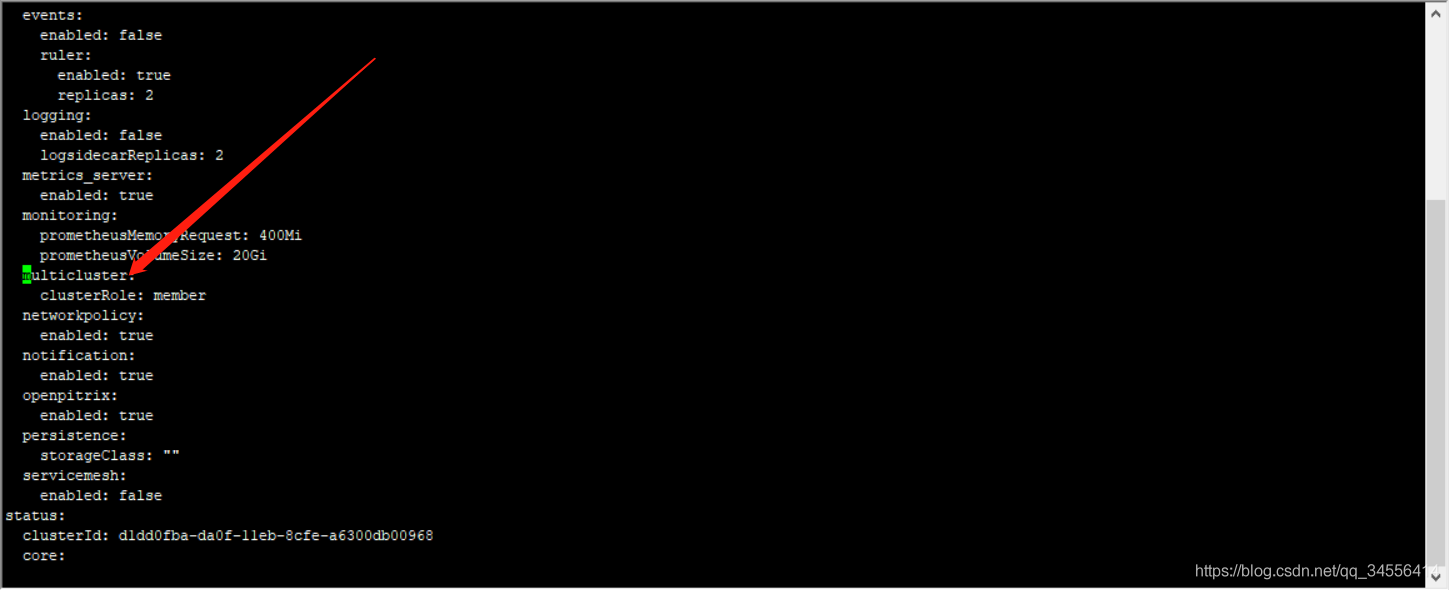

如果已经安装了独立的 KubeSphere 集群,您可以编辑集群配置,将 clusterRole 的值设置为 member。

- 选项 A - 使用 Web 控制台:

使用 admin 帐户登录控制台,然后进入集群管理页面上的自定义资源 CRD,输入关键字 ClusterConfiguration,然后转到其详情页面。编辑 ks-installer 的 YAML 文件

- 选项 B - 使用 Kubectl:

[root@master ~]# kubectl edit cc ks-installer -n kubesphere-system

在 ks-installer 的 YAML 文件中对应输入上面所示的 jwtSecret:

clusterRole 的值设置为 member,然后点击更新(如果使用 Web 控制台)使其生效:

可以使用 kubectl 来获取安装日志以验证状态。运行以下命令,稍等片刻,如果 Member 集群已准备就绪,您将看到成功的日志返回。(确保下面的pod是正常运行的)

[root@master01 ~]# kubectl get pod -n kube-federation-system

NAME READY STATUS RESTARTS AGE

kubefed-admission-webhook-644cfd765-58xgh 1/1 Running 0 3d20h

kubefed-controller-manager-6bd57d69bd-5vf5f 1/1 Running 0 3d20h

kubefed-controller-manager-6bd57d69bd-jkhsb 1/1 Running 0 3d20h

导入 Member 集群

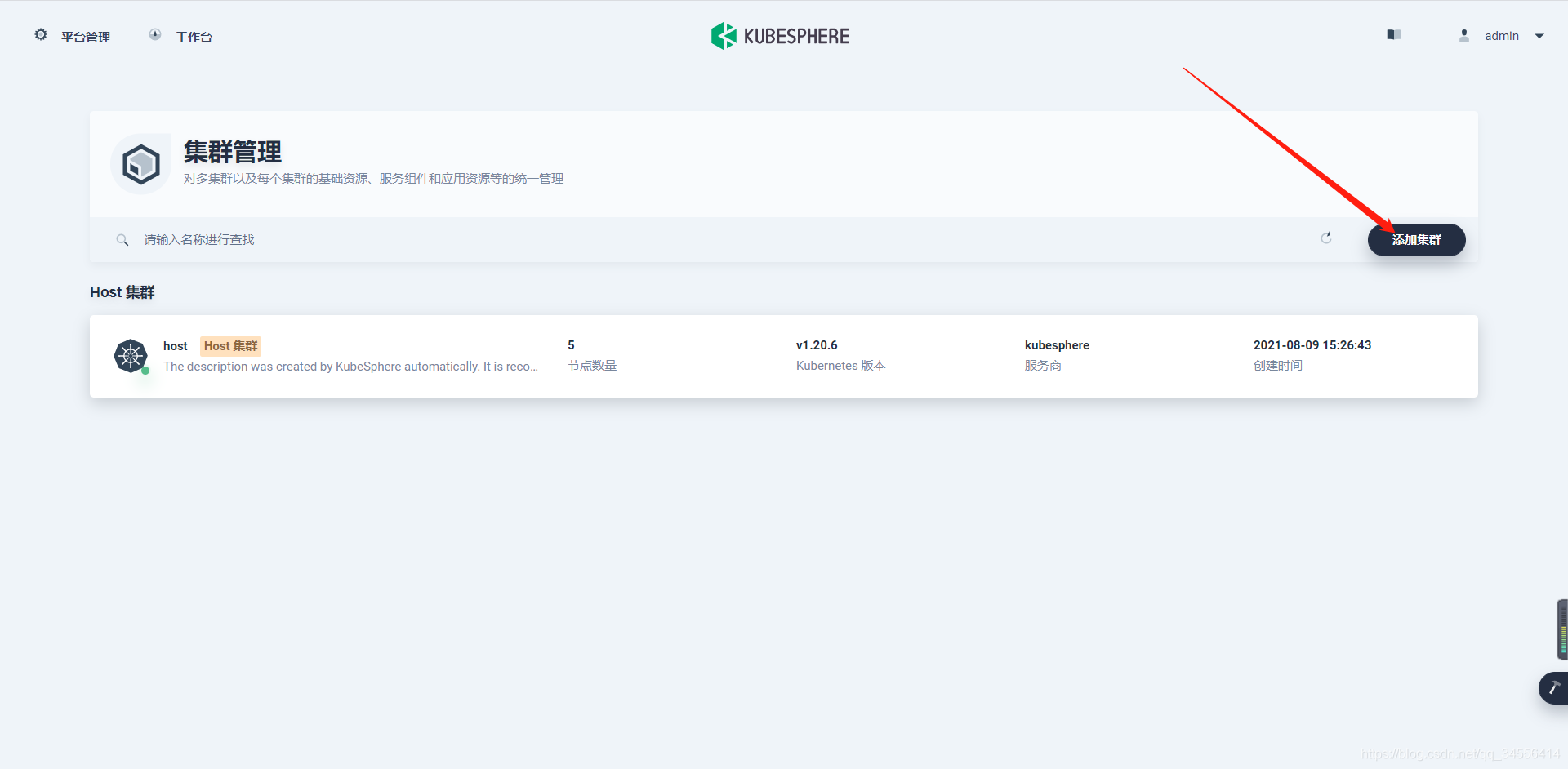

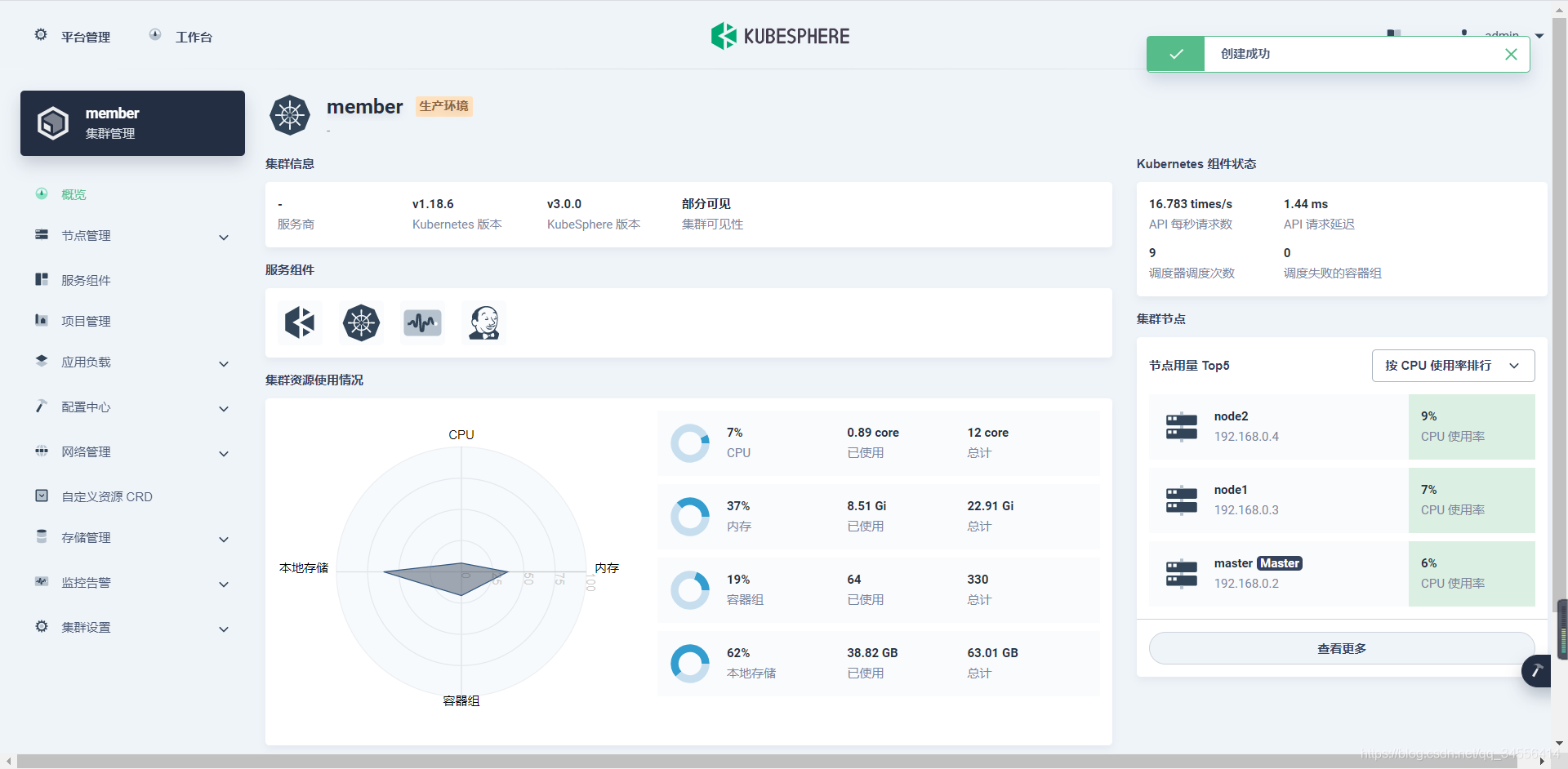

1. 以 admin 身份登录 KubeSphere 控制台,转到集群管理页面点击添加集群。

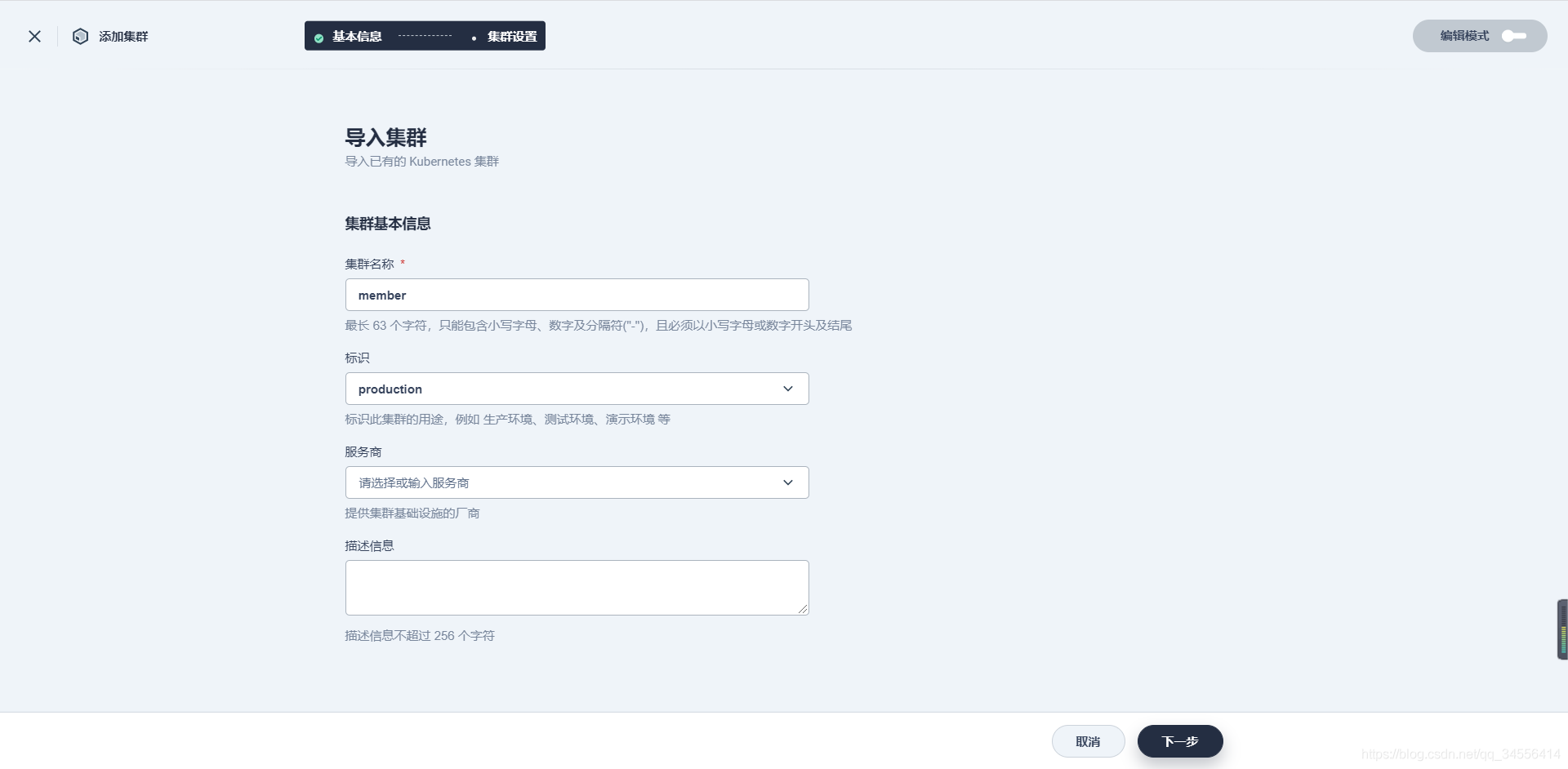

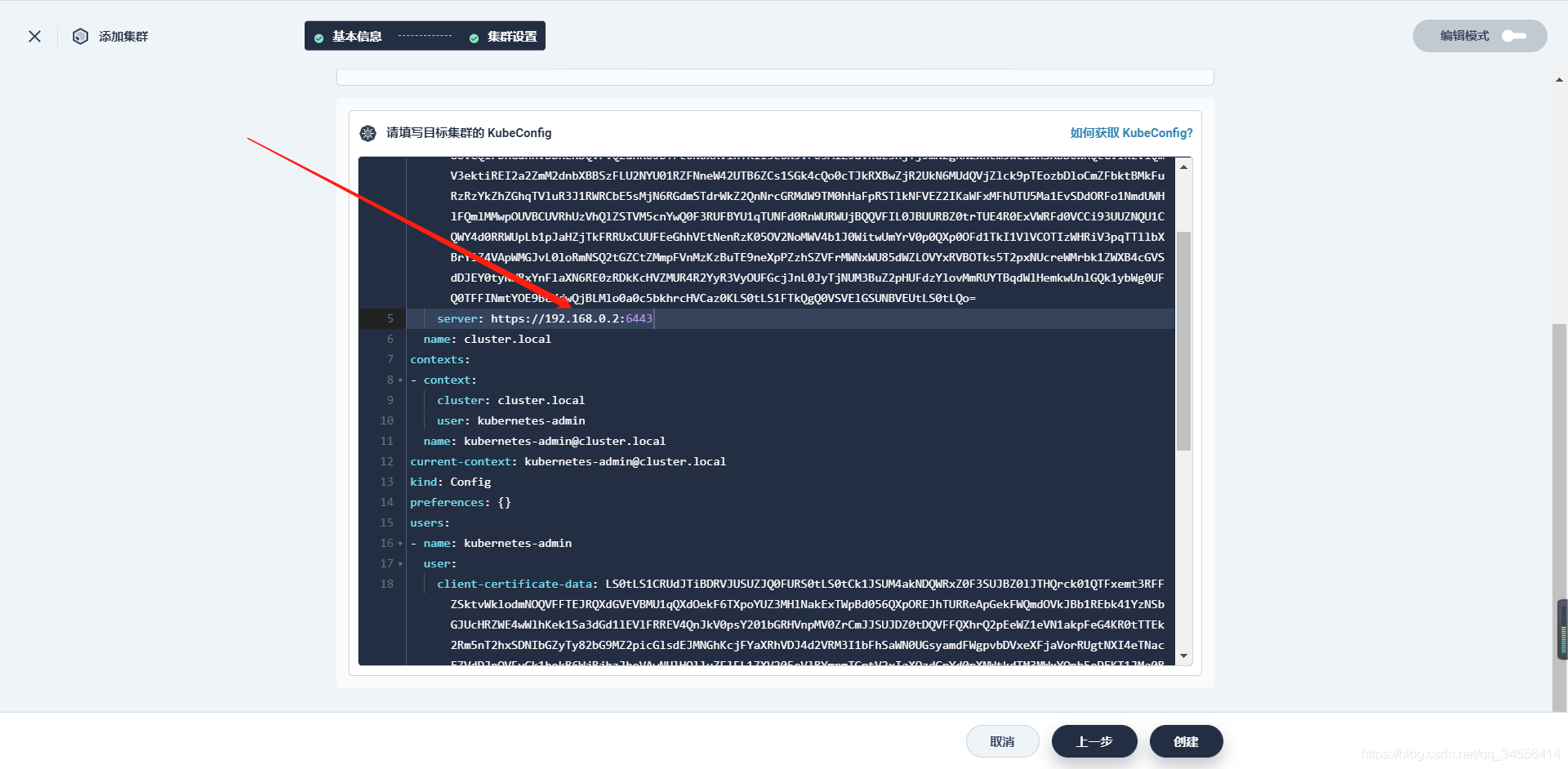

2. 在导入集群页面,输入要导入的集群的基本信息。您也可以点击右上角的编辑模式以 YAML 格式查看并编辑基本信息。编辑完成后,点击下一步。 (取名只能为member要不然会失败)

3. 在连接方式,选择直接连接 Kubernetes 集群,复制 Member 集群的 KubeConfig 内容并粘贴至文本框。您也可以点击右上角的编辑模式以 YAML 格式编辑 Member 集群的 KubeConfig。

[root@master ~]# cd .kube/

[root@master .kube]# ls

cache config http-cache

[root@master .kube]# cat config

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUN5RENDQWJDZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJeE1EVXlNREEzTURNek9Gb1hEVE14TURVeE9EQTNNRE16T0Zvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTFc0CnpudFcwTHVTQ2pJaktHTmFteXBQRmlZdm5zZWNPSHdwZExnNUxXVlhYRlI3eUN5VFU5M1ZJaVhGL3RjYjJmN2gKN2xncm5wc1dRSXBDOWRQcGVIKzViQmV3ektiREI2a2ZmM2dnbXBBSzFLU2NYU01RZFNneW42UTB6ZCs1SGk4cQo0cTJkRXBwZjR2cjN3RmN2QURiY1VJZmZWK0hteXRGMUE2Q2NVNnJsMlJpMW5SUkN6MUdQVjZlck9pTEozbDloCmZFbktBMkFuRzRzYkZhZGhqTVluR3J1RWRCbE5sMjN6RGdmSTdrWkZ2QnNrcGRMdW9TM0hHaFpRSTlkNFVEZ2IKaWFxMFhUTU5Ma1EvSDdORFo1NmdUWHNPd25OYlBOL295ekd4OElLU3AzbjNiVzBUcFhjSzArMGhVWHlFQmlMMwpOUVBCUVRhUzVhQlZSTVM5cnYwQ0F3RUFBYU1qTUNFd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFJYTd2VDZJU1o5QlNSV2xaa21pL3B0d0tNK1AKODVueGhhVEtNenRzK05OV2NoMWV4b1J0WitwUmYrV0p0QXp0OFd1TkI1VlVCOTIzWHRiV3pqTTllbXBrY1Z4VApWMGJvL0loRmNSQ2tGZCtZMmpFVnMzKzBuTE9neXpPZzhSZVFrMWNxWU85eTBZUTJrWURqdVZBSTdxc0gzQ1Y3CjFFRVhRTzR1SmtBYjNIdWZLOVYxRVBOTks5T2pxNUcreWMrbk1ZWXB4cGVSdDJEY0tyNWRxYnFlaXN6RE0zRDkKcHVZMUR4R2YyR3VyOUFGcjJnL0JyTjNUM3BuZ2pHUFdzYlovMmRUYTBqdWlHemkwUnlGQk1yMlE1dXVYc3YrNAovLzl0UkhpclorMW9NUVRXNnhyZ0lsVlpTbWg0UFQ0TFFINmtYOE9BeXdwQjBLMlo0a0c5bkhrcHVCaz0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

server: https://lb.kubesphere.local:6443

name: cluster.local

contexts:

- context:

cluster: cluster.local

user: kubernetes-admin

name: kubernetes-admin@cluster.local

current-context: kubernetes-admin@cluster.local

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM4akNDQWRxZ0F3SUJBZ0lJTHQrck01QTFxemt3RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TVRBMU1qQXdOekF6TXpoYUZ3MHlNakExTWpBd056QXpOREJhTURReApGekFWQmdOVkJBb1REbk41YzNSbGJUcHRZWE4wWlhKek1Sa3dGd1lEVlFRREV4QnJkV0psY201bGRHVnpMV0ZrCmJXbHVNSUlCSWpBTkJna3Foa2lHOXcwQkFRRUZBQU9DQVE4QU1JSUJDZ0tDQVFFQXhrQ2pEeWZ1eVN1akpFeG4KR0tTTEk2Rm5nT2hxSDNIbGZyTy82bG9MZ2picGlsdEJMNGhKcjFYaXRhVDJ4d2VRM3I1bFhSaWN0UGsyamdFWgpvbDVxeXFjaVorRUgycjRSRFhOL3hKWTFNREllRithU1l5L2p0L3hiMEZSSGpTNTZtNXI4eTNacEZVdDJnOVFvCk1hekR6WjRjbzJheVAwNUlHQlluZFlFL1ZXV205eVlRYmpmTGptV2xIaXQzdGpYd0pXNWtkdTM3MWxYQnh5eDEKT1JMa0RDVlk1bUZ6SUNMRUZ6U2N0YXRPcFM3NU02Yy9CWnFyTkI1eXhUTDZ2NGhqSnd1UFNwSm9sMWIvQk5XYwpxZnYwVit3R0IyS2ZIUG5KZk1HRFhVOXNyOEJibFVIL0R5VTE1R2tLVkxGWUhPN0hlYlY3VTQ0ek9YN29qOUZCCnJKQWxkd0lEQVFBQm95Y3dKVEFPQmdOVkhROEJBZjhFQkFNQ0JhQXdFd1lEVlIwbEJBd3dDZ1lJS3dZQkJRVUgKQXdJd0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFMTHk5NTFIMU9GNFRjSTFqNjBNd3B3N3QxMTFRVXFPejdFWQorVTdvMTNEdnJPRGlwS3h3S1UwYys4cFAzRlQwZmFlSTdJZTlYcEFodHE5SUVDVkQrZGtYM3JnU2dMcmhvZ1hMCjdQempjRys5MXlSL3h6NkRFTC9Hdno1cC9DazFnMFY5cEVZOW9QNFNsRnlPSnFQVHBpRTRQd2pXMWVGY3JqejcKd0ZZVHBvZnp6R016VkFFNmJtRnJpTSs1Q3FqYlc5TnRNTGl0SWlQaGhsR2NKWFRDNjJUTE9KVXY1SEQ4ZUdIZgpId0RxU1NybGloOXdidnltU25Lb041VlQ4aVoxUm9pTUpqTUdoQkNyRWNmQkRnMFVoa1JFTU5nOExSaEhaanpvCnR5UFl2aVZoREJTcUlmODJGOCtVMnhpWU4xT2NmcFZ0R1M2ZlUzMC9nVS9tNjFzVUpYbz0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcEFJQkFBS0NBUUVBeGtDakR5ZnV5U3VqSkV4bkdLU0xJNkZuZ09ocUgzSGxmck8vNmxvTGdqYnBpbHRCCkw0aEpyMVhpdGFUMnh3ZVEzcjVsWFJpY3RQazJqZ0Vab2w1cXlxY2laK0VIMnI0UkRYTi94SlkxTURJZUYrYVMKWXkvanQveGIwRlJIalM1Nm01cjh5M1pwRlV0Mmc5UW9NYXpEelo0Y28yYXlQMDVJR0JZbmRZRS9WV1dtOXlZUQpiamZMam1XbEhpdDN0alh3Slc1a2R1MzcxbFhCeHl4MU9STGtEQ1ZZNW1GeklDTEVGelNjdGF0T3BTNzVNNmMvCkJacXJOQjV5eFRMNnY0aGpKd3VQU3BKb2wxYi9CTldjcWZ2MFYrd0dCMktmSFBuSmZNR0RYVTlzcjhCYmxVSC8KRHlVMTVHa0tWTEZZSE83SGViVjdVNDR6T1g3b2o5RkJySkFsZHdJREFRQUJBb0lCQUY4ckFCdHlhK29lREg2TQpQcUZSdTRrckhlaC9xUkhYTnZlUGlmMFJwSnlwaDRwbksrK0pXUFhXSUxKWnNpendzTlo4UjJSZFFEeVB0V1NOCm1xU2E5bUM1d29aak84WUJuMlg1bjhmYTQwVnppWWFucEhZQXN5dks5TGdZL1JBRTA4NkdvL3QzcUJJVTI1dUUKNkphUC9KNWozQk9FWk9TR3k0emtkYVN1bXB4L0NlNzVwdXNxKzhlNVVxWE5zUzdXK01XRUlPdSttZlZrVGZQMgpWeFBNOFZFZW9URjJrQmRwUmV4YnJDUkZRQktmY3NKbGhXd1ZxUWFJc0x3Tlh6dEJsbUtHcFlRc1N6cnNaekozCjhPTVRXcWtpY1FZMkorS1BBVDNUZkhseW5mK2duQktxdlpHUGN5WUFUa2tRcWE0Q2lsd2VId1I5T0dWWGM1SXMKYTk5eXVjRUNnWUVBL0Qxb1ZaTWU2VjNhM093TEd0OUd5KzQrWHlOWU04Z1dwbE1zZDJBMHFyYnp5endRRnF4WAptMjQrdFVPcGdOSVJUcWFPaFgrdCtPQUt1elh4L0FNWVJLMGZvQlZrZGNjamVmdXdVSXY3enRYZTZramtwbUtYCnZkU2YvaWUweWVtdzZFcGhOL1J5THR6RFVJcjJlM3d0UHp0T0kyZmZsRnZEbUdaVmdsZ214UWtDZ1lFQXlUVTAKTVo4WDZtY05uRnZxdXM0VVdUT3FPVFlJdzZFR2VoSlppdDJldm1qemo0MXFnbExYdGtUQ3pETzZuYTl5SHMxWQp0OUZJbTFDRDQwUm43Z2gvdXJZNkhnNUV0STJMRjYzcXgydjhsYUxpYXpFS0pPeVZQMFhzZ3V4Z0pqak96MWtECm1vSmtuRW44dWtQZEJDZFFHRkZYN0JCTVVJZ2czQU5WOE9kQXRuOENnWUEwVFROV0V5Umt0UzVOZ3JBbXlVY3YKdjkyMDhtbzJpbldQMVUweUQ0TzhKaVZVN2NaVVBUWUpKNG5mSWdHQ2tZdy9KN0FEcEY3WmJQTXNzRm5WckhxYQp1dDhFM1lxd0ZUT1k5b1F2bjJiK0UwQ2VpYkRIZzBmOWQ1b1pJL2lMcVdZaU5GSUdQU3h6NVJpbW1iblVEd0orCnl2Zy94U2tKNVNFZ2Q0K2hjRS9ScVFLQmdRQzVUR0VqN2JNMXNQQk05aTJIR2ZDTmNkSXJYQUxZUHZ4YkFYa3IKTlF4VHFRM2RQMHcrZHhLbmprMmpoMlF1Z2Z2a09CS2pQbjdTWW1VRHNPVFJ4MHZ5WkkzRFRqcWgyTlNtMndragozT1JCM2o4TW5wNE1BVUUxZ3l6Y0tkb21lUnlrd2dKYWtna2NFZHRJb3VUeFJVOHpCZjZsclNESzdZTHRlN2p5CjRkV3Ird0tCZ1FEQlZXRHpmNXRhYVJ3VWkwVXBkaUEzMStEcWROLzZYSmoxZ1gvcFk4THdPekR1T28yYUc2encKeWxjK0JYMFlwVHY2V1dvR2NCOWRqNkM5NC9BLzdDanF1S00yR3ZnQnBPS0JzRm4veTNNeHJvcEVVZ09seFV2cgoyMm53L3BNWVlLS0wwbnQxWHdDVUlZVHNKMUNROU4yL0pOVThYZFVjY2Z6Z2ViNHhKUUE0THc9PQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=

[root@master .kube]# kubectl get ep

NAME ENDPOINTS AGE

fuseim.pri-ifs <none> 84d

kubernetes 192.168.0.2:6443 84d

请确保 Host 集群的任何节点都能访问 KubeConfig 中的 server 地址。

我这里只有一个master,如果是多master架构需要提供VIP+端口号

4. 点击创建,然后等待集群初始化完成。

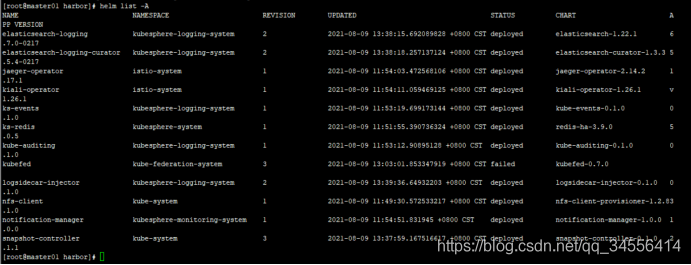

导入过程当中遇到的坑

当准备HOST集群管理时候的报错,由于没有镜像包,所以需要手动retag一下本地镜像再推送到镜像仓库

[root@master01 ~]# kubectl get pod -n kube-federation-system

NAME READY STATUS RESTARTS AGE

kubefed-43ozaylubp-7qr6w 0/1 ImagePullBackOff 0 37m

kubefed-admission-webhook-644cfd765-sfctd 1/1 Running 0 131m

kubefed-controller-manager-6d7b985fc5-4tg84 1/1 Running 0 131m

kubefed-controller-manager-6d7b985fc5-8zmz7 1/1 Running 0 131m

[root@master01 ~]# kubectl describe pod kubefed-43ozaylubp-7qr6w -n kube-federation-system

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 38m default-scheduler Successfully assigned kube-federation-system/kubefed-43ozaylubp-7qr6w to node01

Normal SandboxChanged 38m (x5 over 38m) kubelet Pod sandbox changed, it will be killed and re-created.

Warning Failed 37m (x2 over 38m) kubelet Error: ErrImagePull

Normal Pulling 37m (x3 over 38m) kubelet Pulling image "192.168.0.14/kubesphere/kubectl:v1.19.0"

Warning Failed 37m (x3 over 38m) kubelet Failed to pull image "192.168.0.14/kubesphere/kubectl:v1.19.0": rpc error: code = Unknown desc = Error response from daemon: unknown: artifact kubesphere/kubectl:v1.19.0 not found

Normal BackOff 13m (x114 over 38m) kubelet Back-off pulling image "192.168.0.14/kubesphere/kubectl:v1.19.0"

Warning Failed 2m58s (x158 over 38m) kubelet Error: ImagePullBackOff

[root@master01 harbor]# helm list -A

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

elasticsearch-logging kubesphere-logging-system 2 2021-08-09 13:38:15.692089828 +0800 CST deployed elasticsearch-1.22.1 6.7.0-0217

elasticsearch-logging-curator kubesphere-logging-system 2 2021-08-09 13:38:18.257137124 +0800 CST deployed elasticsearch-curator-1.3.3 5.5.4-0217

jaeger-operator istio-system 1 2021-08-09 11:54:03.472568106 +0800 CST deployed jaeger-operator-2.14.2 1.17.1

kiali-operator istio-system 1 2021-08-09 11:54:11.059469125 +0800 CST deployed kiali-operator-1.26.1 v1.26.1

ks-events kubesphere-logging-system 1 2021-08-09 11:53:19.699173144 +0800 CST deployed kube-events-0.1.0 0.1.0

ks-redis kubesphere-system 1 2021-08-09 11:51:55.390736324 +0800 CST deployed redis-ha-3.9.0 5.0.5

kube-auditing kubesphere-logging-system 1 2021-08-09 11:53:12.90895128 +0800 CST deployed kube-auditing-0.1.0 0.1.0

kubefed kube-federation-system 3 2021-08-09 13:03:01.853347919 +0800 CST failed kubefed-0.7.0

logsidecar-injector kubesphere-logging-system 2 2021-08-09 13:39:36.64932203 +0800 CST deployed logsidecar-injector-0.1.0 0.1.0

nfs-client kube-system 1 2021-08-09 11:49:30.572533217 +0800 CST deployed nfs-client-provisioner-1.2.83.1.0

notification-manager kubesphere-monitoring-system 1 2021-08-09 11:54:51.831945 +0800 CST deployed notification-manager-1.0.0 1.0.0

snapshot-controller kube-system 3 2021-08-09 13:37:59.167516617 +0800 CST deployed snapshot-controller-0.1.0 2.1.1

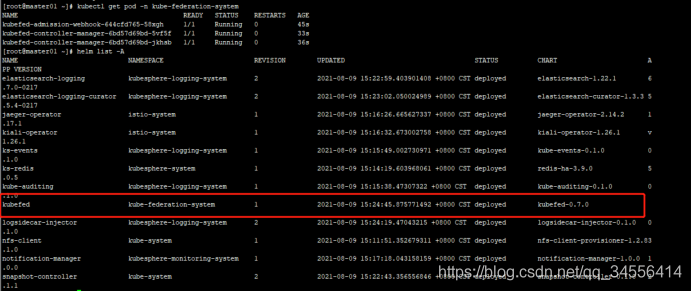

手动retag在推送到镜像仓库

[root@master01 harbor]# docker tag 192.168.0.14/kubesphere/kubectl:v1.19.1 192.168.0.14/kubesphere/kubectl:v1.19.0

You have mail in /var/spool/mail/root

[root@master01 harbor]# docker push 192.168.0.14/kubesphere/kubectl:v1.19.0

The push refers to repository [192.168.0.14/kubesphere/kubectl]

82c95447c0ab: Layer already exists

a37b661028f1: Layer already exists

9fb3aa2f8b80: Layer already exists

v1.19.0: digest: sha256:ad61643208ccdc01fcd6662557bfdc7e52fd64b6e178e42122bb71e0dcd86c74 size: 947

[root@master01 harbor]# helm delete kubefed -n kube-federation-system

release "kubefed" uninstalled

[root@master01 harbor]# kubectl delete pod ks-installer-b4cb495c9-462bg -n kubesphere-system

pod "ks-installer-b4cb495c9-462bg" deleted

[root@master01 ~]# kubectl get pod -n kube-federation-system

NAME READY STATUS RESTARTS AGE

kubefed-admission-webhook-644cfd765-58xgh 1/1 Running 0 45s

kubefed-controller-manager-6bd57d69bd-5vf5f 1/1 Running 0 33s

kubefed-controller-manager-6bd57d69bd-jkhsb 1/1 Running 0 36s

[root@master01 ~]# helm list -A

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

elasticsearch-logging kubesphere-logging-system 2 2021-08-09 15:22:59.403901408 +0800 CST deployed elasticsearch-1.22.1 6.7.0-0217

elasticsearch-logging-curator kubesphere-logging-system 2 2021-08-09 15:23:02.050024989 +0800 CST deployed elasticsearch-curator-1.3.3 5.5.4-0217

jaeger-operator istio-system 1 2021-08-09 15:16:26.665627337 +0800 CST deployed jaeger-operator-2.14.2 1.17.1

kiali-operator istio-system 1 2021-08-09 15:16:32.673002758 +0800 CST deployed kiali-operator-1.26.1 v1.26.1

ks-events kubesphere-logging-system 1 2021-08-09 15:15:49.002730971 +0800 CST deployed kube-events-0.1.0 0.1.0

ks-redis kubesphere-system 1 2021-08-09 15:14:19.603968061 +0800 CST deployed redis-ha-3.9.0 5.0.5

kube-auditing kubesphere-logging-system 1 2021-08-09 15:15:38.47307322 +0800 CST deployed kube-auditing-0.1.0 0.1.0

kubefed kube-federation-system 1 2021-08-09 15:24:45.875771492 +0800 CST deployed kubefed-0.7.0

logsidecar-injector kubesphere-logging-system 2 2021-08-09 15:24:19.47043215 +0800 CST deployed logsidecar-injector-0.1.0 0.1.0

nfs-client kube-system 1 2021-08-09 15:11:51.352679311 +0800 CST deployed nfs-client-provisioner-1.2.83.1.0

notification-manager kubesphere-monitoring-system 1 2021-08-09 15:17:18.043158159 +0800 CST deployed notification-manager-1.0.0 1.0.0

snapshot-controller kube-system 2 2021-08-09 15:22:43.356556846 +0800 CST deployed snapshot-controller-0.1.0 2.1.1

在member加入Host集群报错

关于jwt的报错,需要查看两个集群的confIgmap当中的jwt是否配置是一样的

[root@master01 ~]# kubectl describe configmap kubesphere-config -n kubesphere-system | grep jwt

jwtSecret: "Qg6Ma8e1DwPRZ0lhtwMOGXbJNITNtxQF"

[root@master .kube]# kubectl describe configmap kubesphere-config -n kubesphere-system | grep jwt

jwtSecret: "wV3mj25yM7O4A4C30rb2lcbpQiikfAst"

[root@master01 ~]# kubectl get configmap -n kubesphere-system

NAME DATA AGE

istio-ca-root-cert 1 7d20h

ks-console-config 1 7d20h

ks-controller-manager-leader-election 0 7d19h

ks-router-config 2 7d19h

kube-root-ca.crt 1 7d20h

kubesphere-config 1 7d19h

redis-ha-configmap 5 7d20h

sample-bookinfo 1 7d20h

[root@master01 ~]# kubectl describe configmap kubesphere-config -n kubesphere-system

Name: kubesphere-config

Namespace: kubesphere-system

Labels: <none>

Annotations: <none>

Data

====

kubesphere.yaml:

----

authentication:

authenticateRateLimiterMaxTries: 10

authenticateRateLimiterDuration: 10m0s

loginHistoryRetentionPeriod: 168h

maximumClockSkew: 10s

multipleLogin: True

kubectlImage: 192.168.0.14/kubesphere/kubectl:v1.20.0

jwtSecret: "Qg6Ma8e1DwPRZ0lhtwMOGXbJNITNtxQF"

redis:

host: redis.kubesphere-system.svc

port: 6379

password: ""

db: 0

network:

enableNetworkPolicy: true

ippoolType: calico

weaveScopeHost: weave-scope-app.weave

servicemesh:

istioPilotHost: http://istiod.istio-system.svc:8080/version

jaegerQueryHost: http://jaeger-query.istio-system.svc:16686

servicemeshPrometheusHost: http://prometheus-k8s.kubesphere-monitoring-system.svc:9090

kialiQueryHost: http://kiali.istio-system:20001

multicluster:

enable: true

agentImage: 192.168.0.14/kubesphere/tower:v0.2.0

proxyPublishService: tower.kubesphere-system.svc

monitoring:

endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090

logging:

host: http://elasticsearch-logging-data.kubesphere-logging-system.svc:9200

indexPrefix: ks-logstash-log

events:

host: http://elasticsearch-logging-data.kubesphere-logging-system.svc:9200

indexPrefix: ks-logstash-events

auditing:

enable: true

host: http://elasticsearch-logging-data.kubesphere-logging-system.svc:9200

indexPrefix: ks-logstash-auditing

alerting:

prometheusEndpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090

thanosRulerEndpoint: http://thanos-ruler-operated.kubesphere-monitoring-system.svc:10902

thanosRuleResourceLabels: thanosruler=thanos-ruler,role=thanos-alerting-rules

Events: <none>

You have mail in /var/spool/mail/root

[root@master01 ~]# kubectl get configmap kubesphere-config -n kubesphere-system -o yaml

apiVersion: v1

data:

kubesphere.yaml: |

authentication:

authenticateRateLimiterMaxTries: 10

authenticateRateLimiterDuration: 10m0s

loginHistoryRetentionPeriod: 168h

maximumClockSkew: 10s

multipleLogin: True

kubectlImage: 192.168.0.14/kubesphere/kubectl:v1.20.0

jwtSecret: "Qg6Ma8e1DwPRZ0lhtwMOGXbJNITNtxQF"

redis:

host: redis.kubesphere-system.svc

port: 6379

password: ""

db: 0

network:

enableNetworkPolicy: true

ippoolType: calico

weaveScopeHost: weave-scope-app.weave

servicemesh:

istioPilotHost: http://istiod.istio-system.svc:8080/version

jaegerQueryHost: http://jaeger-query.istio-system.svc:16686

servicemeshPrometheusHost: http://prometheus-k8s.kubesphere-monitoring-system.svc:9090

kialiQueryHost: http://kiali.istio-system:20001

multicluster:

enable: true

agentImage: 192.168.0.14/kubesphere/tower:v0.2.0

proxyPublishService: tower.kubesphere-system.svc

monitoring:

endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090

logging:

host: http://elasticsearch-logging-data.kubesphere-logging-system.svc:9200

indexPrefix: ks-logstash-log

events:

host: http://elasticsearch-logging-data.kubesphere-logging-system.svc:9200

indexPrefix: ks-logstash-events

auditing:

enable: true

host: http://elasticsearch-logging-data.kubesphere-logging-system.svc:9200

indexPrefix: ks-logstash-auditing

alerting:

prometheusEndpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090

thanosRulerEndpoint: http://thanos-ruler-operated.kubesphere-monitoring-system.svc:10902

thanosRuleResourceLabels: thanosruler=thanos-ruler,role=thanos-alerting-rules

kind: ConfigMap

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","data":{"kubesphere.yaml":"authentication:\n authenticateRateLimiterMaxTries: 10\n authenticateRateLimiterDuration: 10m0s\n loginHistoryRetentionPeriod: 168h\n maximumClockSkew: 10s\n multipleLogin: True\n kubectlImage: 192.168.0.14/kubesphere/kubectl:v1.20.0\n jwtSecret: \"Qg6Ma8e1DwPRZ0lhtwMOGXbJNITNtxQF\"\n\n\nredis:\n host: redis.kubesphere-system.svc\n port: 6379\n password: \"\"\n db: 0\n\n\n\nnetwork:\n enableNetworkPolicy: true\n ippoolType: calico\n weaveScopeHost: weave-scope-app.weave\nservicemesh:\n istioPilotHost: http://istiod.istio-system.svc:8080/version\n jaegerQueryHost: http://jaeger-query.istio-system.svc:16686\n servicemeshPrometheusHost: http://prometheus-k8s.kubesphere-monitoring-system.svc:9090\n kialiQueryHost: http://kiali.istio-system:20001\nmulticluster:\n enable: true\n agentImage: 192.168.0.14/kubesphere/tower:v0.2.0\n proxyPublishService: tower.kubesphere-system.svc\nmonitoring:\n endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090\nlogging:\n host: http://elasticsearch-logging-data.kubesphere-logging-system.svc:9200\n indexPrefix: ks-logstash-log\nevents:\n host: http://elasticsearch-logging-data.kubesphere-logging-system.svc:9200\n indexPrefix: ks-logstash-events\nauditing:\n enable: true\n host: http://elasticsearch-logging-data.kubesphere-logging-system.svc:9200\n indexPrefix: ks-logstash-auditing\n\nalerting:\n prometheusEndpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090\n thanosRulerEndpoint: http://thanos-ruler-operated.kubesphere-monitoring-system.svc:10902\n thanosRuleResourceLabels: thanosruler=thanos-ruler,role=thanos-alerting-rules\n"},"kind":"ConfigMap","metadata":{"annotations":{},"name":"kubesphere-config","namespace":"kubesphere-system"}}

creationTimestamp: "2021-08-09T07:17:35Z"

managedFields:

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:data:

.: {}

f:kubesphere.yaml: {}

f:metadata:

f:annotations:

.: {}

f:kubectl.kubernetes.io/last-applied-configuration: {}

manager: kubectl

operation: Update

time: "2021-08-09T07:17:35Z"

name: kubesphere-config

namespace: kubesphere-system

resourceVersion: "8563"

uid: e86492f2-d28f-4b8e-bcfb-1b19b193661e

最后别忘记修改apiserevr的地址

[root@master .kube]# kubectl get ep

NAME ENDPOINTS AGE

fuseim.pri-ifs <none> 80d

kubernetes 192.168.0.2:6443 81d