docker 入门

一 .实验环境配置

1. 配置网络

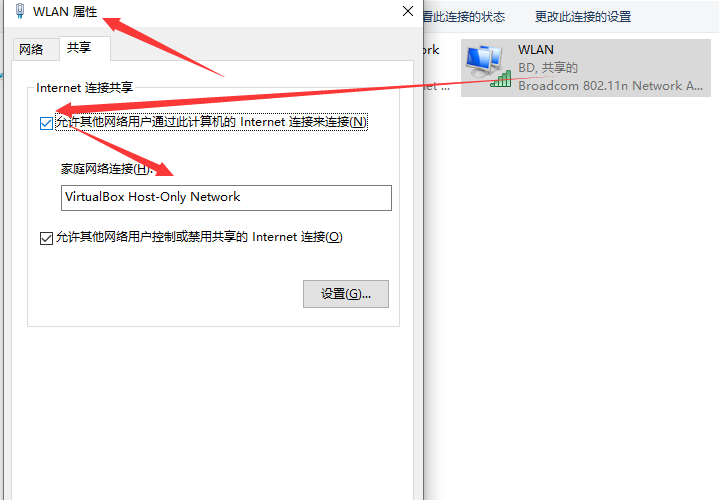

共享外网(如果虚拟机中不能 ping 通 baidu 先取消共享,然后重新设置共享)

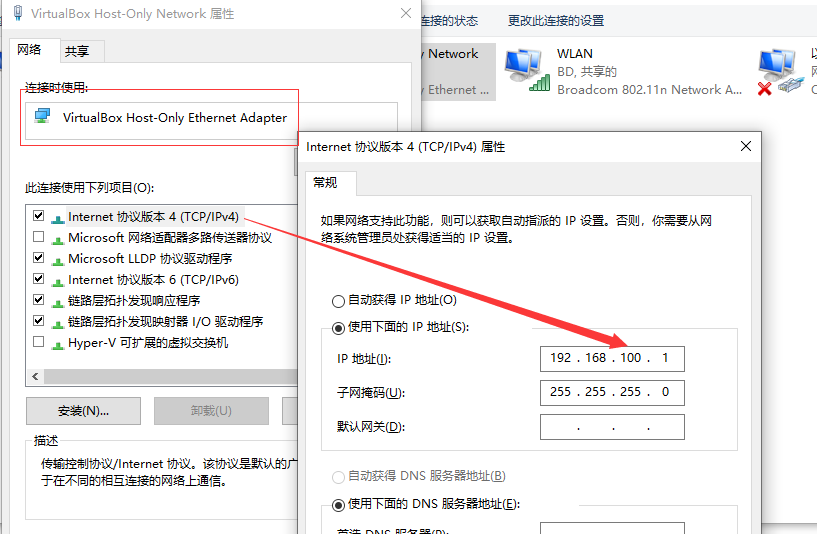

配置host-only网卡ip (如果虚拟机中不能 ping 通 baidu 请重新设置此步骤)

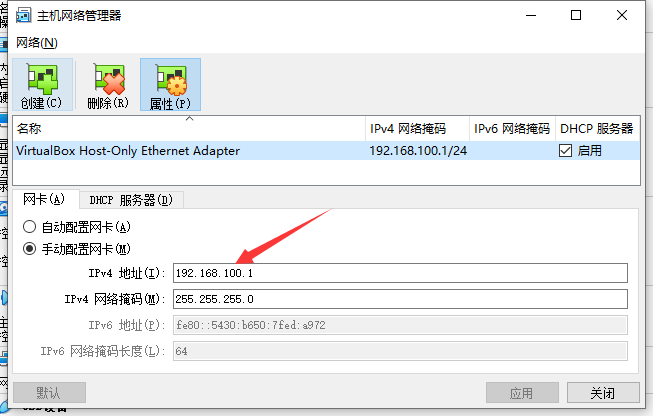

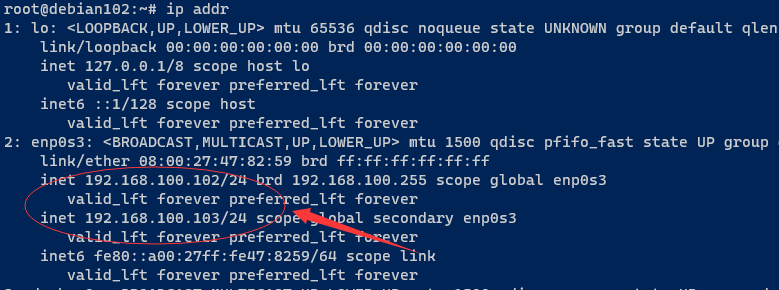

确认配置是否正确

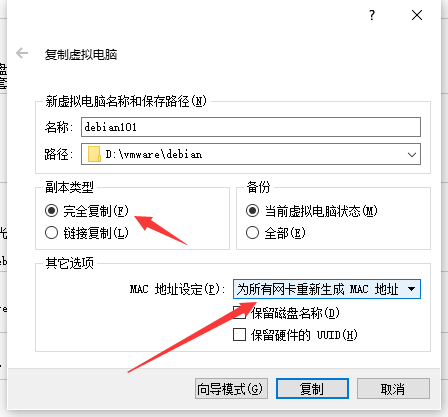

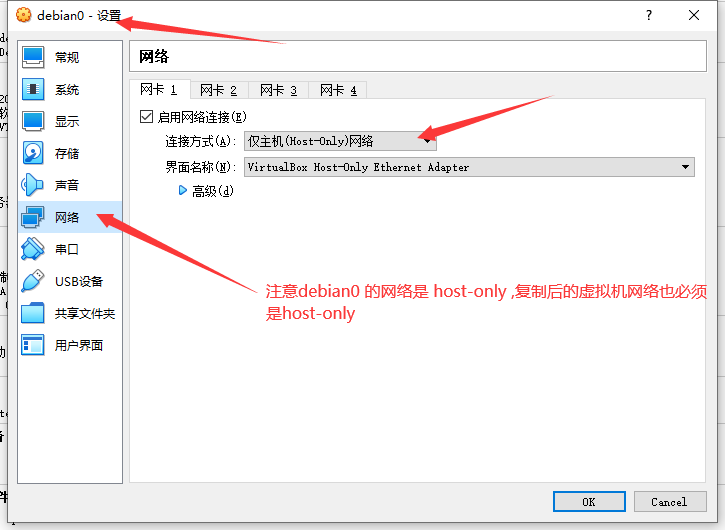

2. 从debian0复制虚拟机 注意修改以下配置

3. 修改ip

#编辑配置文件

vi /etc/network/interfaces

内容如下

auto enp0s3

iface enp0s3 inet static

address 192.168.100.103

netmask 255.255.255.0

gateway 192.168.100.1

#修改dns 解析文件

vi /etc/resolv.conf

添加以下内容

nameserver 192.168.100.1

4. 修改hostname

4.1、首先运行以下命令设置新主机名

hostnamectl set-hostname debian101

注:hostnamectl命令不生成输出,成功时,返回0,否则返回非零故障代码。

4.2、其次,打开/etc/hosts文件并将旧主机名替换为新主机名,如下:

vi /etc/hosts

127.0.0.1 localhost

127.0.0.1 debian101

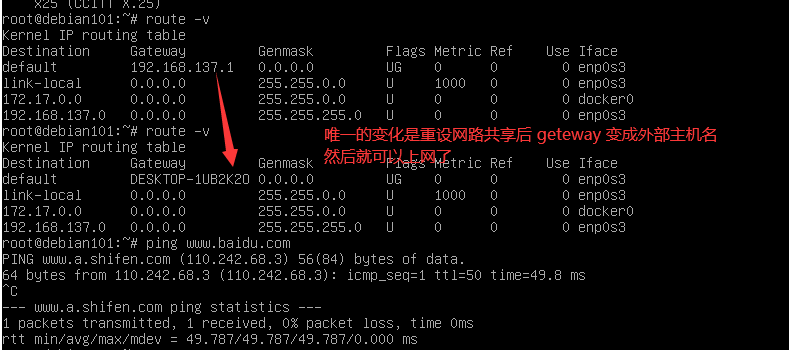

5. 为什么重启后网络共享不起作用?

5.1 症状:每次重启电脑后在虚拟机内部就无法联通外网

目前情况不明,怀疑是路由的问题,但是不确定

查看window 路由表

PS C:\Users\xue> route print 192.168.100.*

===========================================================================

接口列表

13...0a 00 27 00 00 0d ......VirtualBox Host-Only Ethernet Adapter

15...82 56 f2 f1 b0 51 ......Microsoft Wi-Fi Direct Virtual Adapter

3...82 56 f2 f1 b8 51 ......Microsoft Wi-Fi Direct Virtual Adapter #2

10...80 56 f2 f1 b0 51 ......Broadcom 802.11n Network Adapter

1...........................Software Loopback Interface 1

46...00 15 5d 12 f6 e2 ......Hyper-V Virtual Ethernet Adapter

===========================================================================

IPv4 路由表

===========================================================================

活动路由:

网络目标 网络掩码 网关 接口 跃点数

192.168.100.0 255.255.255.0 在链路上 192.168.100.1 281

192.168.100.1 255.255.255.255 在链路上 192.168.100.1 281

192.168.100.255 255.255.255.255 在链路上 192.168.100.1 281

window 主机名称

PS C:\Users\xue> hostname

DESKTOP-1UB2K2O

能够联通外网时的虚拟机路由信息

-----------------------------------------------------------------------

root@debian101:~# ping www.baidu.com

PING www.a.shifen.com (110.242.68.3) 56(84) bytes of data.

64 bytes from 110.242.68.3 (110.242.68.3): icmp_seq=1 ttl=50 time=12.5 ms

root@debian101:~# route

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

default DESKTOP-1UB2K2O 0.0.0.0 UG 0 0 0 enp0s3

link-local 0.0.0.0 255.255.0.0 U 1000 0 0 enp0s3

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

192.168.100.0 0.0.0.0 255.255.255.0 U 0 0 0 enp0s3

root@debian101:~# ping DESKTOP-1UB2K2O

PING DESKTOP-1UB2K2O (172.17.0.1) 56(84) bytes of data.

64 bytes from 172.17.0.1 (172.17.0.1): icmp_seq=1 ttl=64 time=0.075 ms

64 bytes from 172.17.0.1 (172.17.0.1): icmp_seq=2 ttl=64 time=0.083 ms

64 bytes from 172.17.0.1 (172.17.0.1): icmp_seq=3 ttl=64 time=0.085 ms

^C

--- DESKTOP-1UB2K2O ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2003ms

rtt min/avg/max/mdev = 0.075/0.081/0.085/0.004 ms

root@debian101:~# ip route

default via 192.168.100.1 dev enp0s3 onlink

169.254.0.0/16 dev enp0s3 scope link metric 1000

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1

192.168.100.0/24 dev enp0s3 proto kernel scope link src 192.168.100.101

-------------------------------------------------------------

重启后的路由信息

root@debian101:~# ping www.baidu.com

ping: www.baidu.com: Temporary failure in name resolution

root@debian101:~# route

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

default 192.168.100.1 0.0.0.0 UG 0 0 0 enp0s3

link-local 0.0.0.0 255.255.0.0 U 1000 0 0 enp0s3

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

192.168.100.0 0.0.0.0 255.255.255.0 U 0 0 0 enp0s3

root@debian101:~# ip route

default via 192.168.100.1 dev enp0s3 onlink

169.254.0.0/16 dev enp0s3 scope link metric 1000

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1

192.168.100.0/24 dev enp0s3 proto kernel scope link src 192.168.100.101

5.2 具体原因

分析路由信息后没有发现异常情况。

怀疑是windows 的问题

这让我好找!!!整整历时一下午,重启10多次

最后查找原因是 windows10 每次重启后共享网络自动停止,解决这个问题 需要在每次重启后自动启动共享服务

参考文章 http://woshub.com/internet-connection-sharing-not-working-windows-reboot/

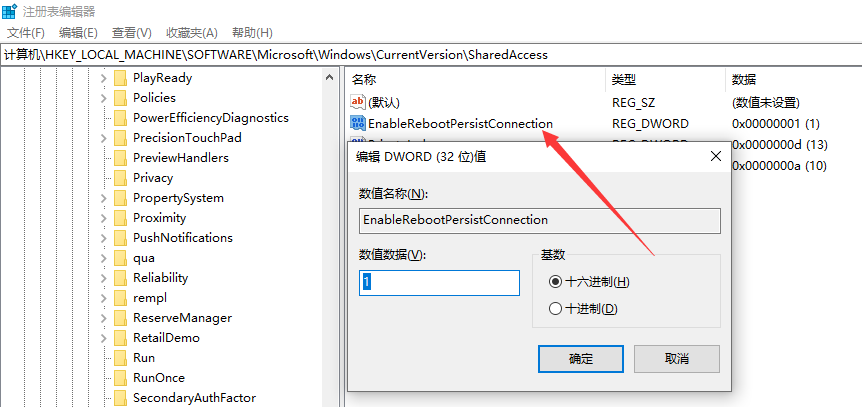

5.3 具体解决方法如下

在注册表 以下路径下

HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows\CurrentVersion\SharedAccess

创建一个

类型 DWORD

名称 EnableRebootPersistConnection

值为1

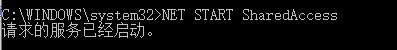

用管理员权限 并在cmd 中确认以下服务已经启动

PS C:\WINDOWS\system32> NET START SharedAccess

图片如下:

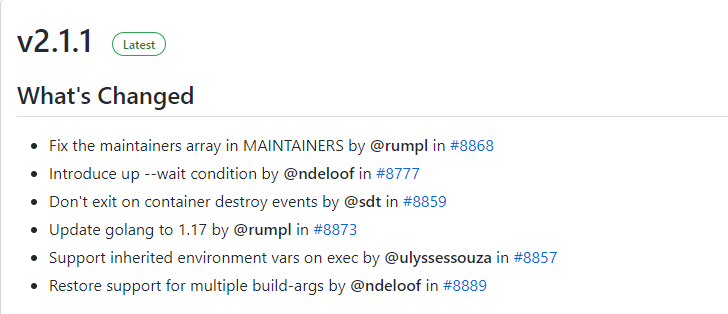

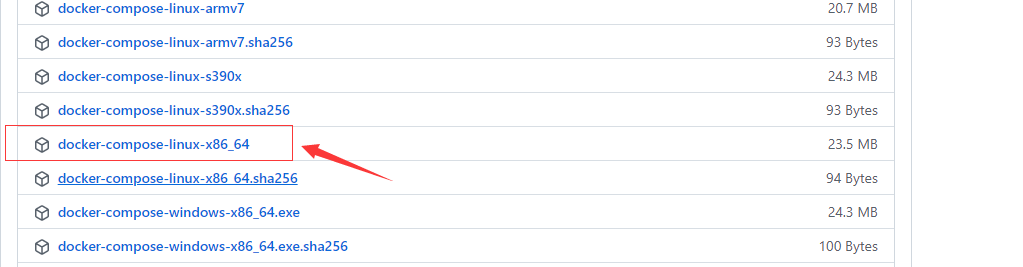

二 . docker-compose 安装

1. 安装 docker-compose

查看当前发行版本网址 https://github.com/docker/compose/releases

用命令下载并保存到 /usr/local/bin/docker-compose

curl -L https://github.com/docker/compose/releases/download/v2.1.1/docker-compose-`uname -s`-`uname -m` -o /usr/local/bin/docker-compose

实际的下载连接是

root@debian101:~# echo https://github.com/docker/compose/releases/download/v2.1.1/docker-compose-`uname -s`-`uname -m`

https://github.com/docker/compose/releases/download/v2.1.1/docker-compose-Linux-x86_64

或则直接下载 https://github.com/docker/compose/releases

我本机文件保存到 D:\vmware\debian\docker-compose-linux-x86_64_v2.1.1

2. 修改权限

chmod +x /usr/local/bin/docker-compose

3. 测试运行

docker-compose --version

三 . docker入门

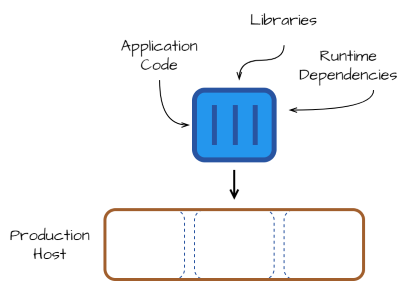

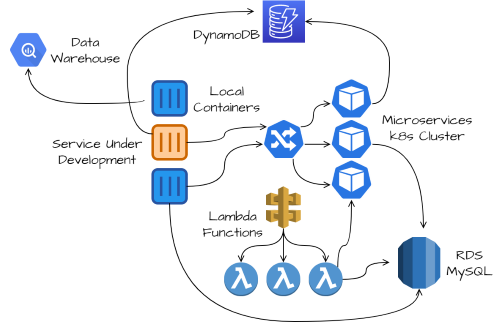

1. why docker

1.1 如何分发一个应用程序

exe jar

自包含自依赖 依赖操作系统,cpu架构,动态连接库

你需要准备

装机 安装软件 配置环境

1.2 使用容器打包

简单化>-标准化->规模化

简单的规则使应用程序的部署产品化:如果你的应用程序可以打包成一个容器, 那么它就可以部署在任何地方。

拥有一个看起来(至少大体看起来)像生产环境的开发环境有很多好处。如果你在生产环境中部署 Docker 容器,那么在开发过程中在容器中运行代码也是合理的。

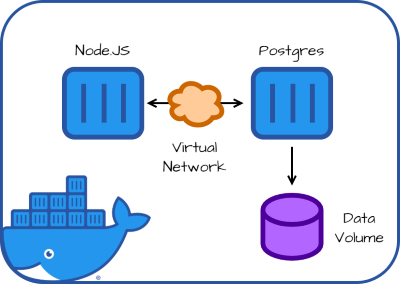

1.3 现在如何定义一个应用

1.3.1 简单应用 Docker

1.3.2 稍微复杂点的 Docker Compose

1.3.3 微服务 k8s

1.4 参考文档

容器并不能解决一切问题 https://www.infoq.cn/article/AfjwTybO7MWigLMJqocekwlrd

凤凰架构 虚拟化容器 https://icyfenix.cn/immutable-infrastructure/container/

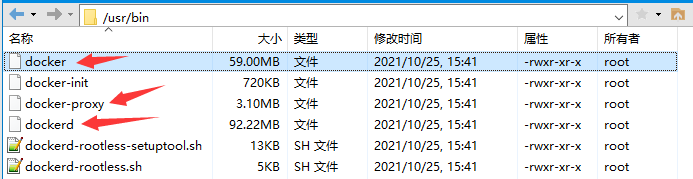

2. docker 是什么?

docker 是用go语言写的几个可执行文件

查看docker 安装目录

root@debian101:~# which docker

/usr/bin/docker

env 可以查看linux 系统的环境变量,PATH 变量是可执行文件的主要目录

root@debian101:~# env

SHELL=/bin/bash

LANGUAGE=en_US:en

PWD=/root

LOGNAME=root

XDG_SESSION_TYPE=tty

MOTD_SHOWN=pam

HOME=/root

LANG=en_US.UTF-8

SSH_CONNECTION=192.168.100.1 57823 192.168.100.101 22

XDG_SESSION_CLASS=user

TERM=xterm-256color

USER=root

SHLVL=1

XDG_SESSION_ID=4

XDG_RUNTIME_DIR=/run/user/0

SSH_CLIENT=192.168.100.1 57823 22

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

SSH_TTY=/dev/pts/1

_=/usr/bin/env

root@debian101:~# env

SHELL=/bin/bash

LANGUAGE=en_US:en

PWD=/root

LOGNAME=root

XDG_SESSION_TYPE=tty

MOTD_SHOWN=pam

HOME=/root

LANG=en_US.UTF-8

SSH_CONNECTION=192.168.100.1 57823 192.168.100.101 22

XDG_SESSION_CLASS=user

TERM=xterm-256color

USER=root

SHLVL=1

XDG_SESSION_ID=4

XDG_RUNTIME_DIR=/run/user/0

SSH_CLIENT=192.168.100.1 57823 22

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

SSH_TTY=/dev/pts/1

_=/usr/bin/env

在 /usr/bin 路径下可以看到docker的执行文件

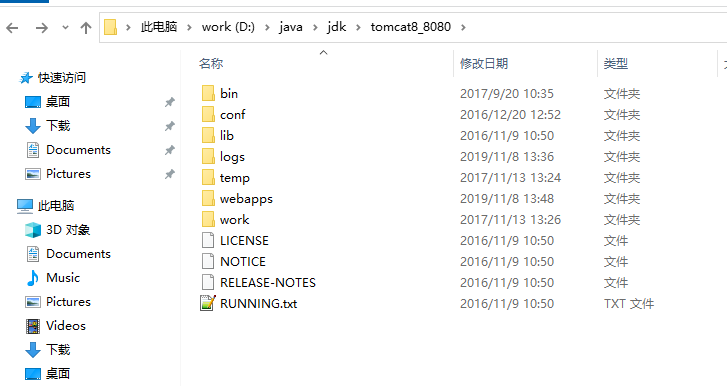

3. docker 安装配置tomcat

docker 基础命令 https://docs.docker.com/engine/reference/commandline/docker/

3.1 在window下查看tomcat 目录

bin 目录是可执行文件目录

webapps 是war包目录

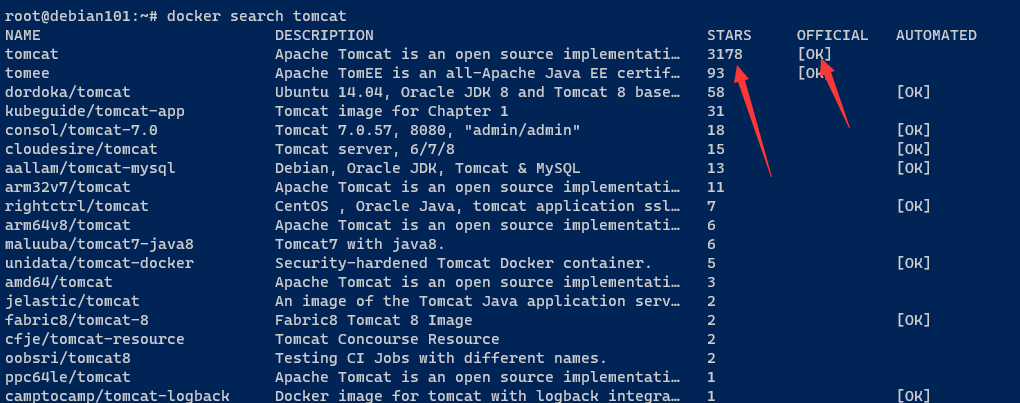

3.2 查找镜像

docker search tomcat

该命令从官方镜像仓库查询 tomcat 镜像,一般选择 official 官方的

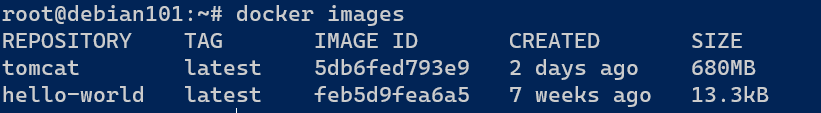

查看本地已有镜像

**root@debian101:~# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

hello-world latest feb5d9fea6a5 7 weeks ago 13.3kB**

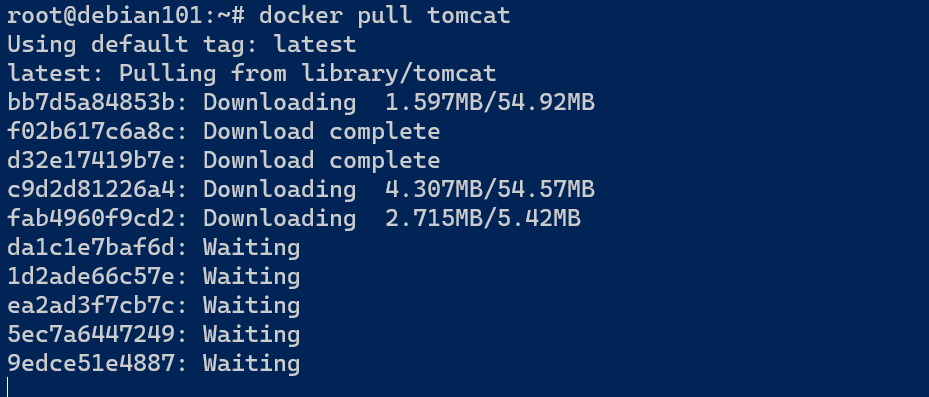

3.3 下载镜像

3.4 审查镜像 确定环境 ,尤其在对一个不熟悉的镜像进行部署时,得熟练应用

docker inspect

root@debian101:~# docker inspect tomcat

[

{ # IMAGE ID 是 ID的简写 前12 位

"Id": "sha256:5db6fed793e95ca20134faf60b8476c8fb1d5091e2f7e6aff254ebba09868528",

"RepoTags": [#仓库的标签 tomcat:v9

"tomcat:latest"

],

"RepoDigests": [#用于判断是否完整

"tomcat@sha256:fbbe9960d4fd905b58c3f6eb6b6f4e16488927b9eab31db69578a118c9af833c"

],

"Parent": "",

"Comment": "",

"Created": "2021-11-15T20:58:46.683453708Z", #镜像构建日期

"Container": "91f5e919082f6ee3181083d521d347deb410b01a1bea49b2dcf2325d34a591ed",

"ContainerConfig": {

"Hostname": "91f5e919082f",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"ExposedPorts": { ##《-----1.暴露的端口

"8080/tcp": {}

},

"Tty": false,

"OpenStdin": false,

"StdinOnce": false,

"Env": [##《-----2.看容器的环境

"PATH=/usr/local/tomcat/bin:/usr/local/openjdk-11/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

"JAVA_HOME=/usr/local/openjdk-11",

"LANG=C.UTF-8",

"JAVA_VERSION=11.0.13",

"CATALINA_HOME=/usr/local/tomcat",

"TOMCAT_NATIVE_LIBDIR=/usr/local/tomcat/native-jni-lib",

"LD_LIBRARY_PATH=/usr/local/tomcat/native-jni-lib",

"GPG_KEYS=A9C5DF4D22E99998D9875A5110C01C5A2F6059E7",

"TOMCAT_MAJOR=10",

"TOMCAT_VERSION=10.0.13",

"TOMCAT_SHA512=fecfe06f38ff31e31fa43c15f2566f6fcd26bb874a9b7c0533087be81d1decd97f81eefeaca7ecb5ab2b79a3ea69ed0459adff5f9d55c05f5af45f69b0725608"

],

"Cmd": [ ##《-----3.看启动命令

"/bin/sh",

"-c",

"#(nop) ",

"CMD ["catalina.sh" "run"]"

],

"Image": "sha256:9f2d6de92da5523bab394466924c3a6d19e9d17c59821bd2eaddf6d71a598e9c",

"Volumes": null, ##《-----4. 看映射的目录

"WorkingDir": "/usr/local/tomcat", ##《-----5. 看工作目录

"Entrypoint": null,

"OnBuild": null,

"Labels": {}

},

"DockerVersion": "20.10.7",

"Author": "",

"Config": {

"Hostname": "",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"ExposedPorts": {

"8080/tcp": {}

},

"Tty": false,

"OpenStdin": false,

"StdinOnce": false,

"Env": [

"PATH=/usr/local/tomcat/bin:/usr/local/openjdk-11/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

"JAVA_HOME=/usr/local/openjdk-11",

"LANG=C.UTF-8",

"JAVA_VERSION=11.0.13",

"CATALINA_HOME=/usr/local/tomcat",

"TOMCAT_NATIVE_LIBDIR=/usr/local/tomcat/native-jni-lib",

"LD_LIBRARY_PATH=/usr/local/tomcat/native-jni-lib",

"GPG_KEYS=A9C5DF4D22E99998D9875A5110C01C5A2F6059E7",

"TOMCAT_MAJOR=10",

"TOMCAT_VERSION=10.0.13",

"TOMCAT_SHA512=fecfe06f38ff31e31fa43c15f2566f6fcd26bb874a9b7c0533087be81d1decd97f81eefeaca7ecb5ab2b79a3ea69ed0459adff5f9d55c05f5af45f69b0725608"

],

"Cmd": [

"catalina.sh",

"run"

],

"Image": "sha256:9f2d6de92da5523bab394466924c3a6d19e9d17c59821bd2eaddf6d71a598e9c",

"Volumes": null,

"WorkingDir": "/usr/local/tomcat",

"Entrypoint": null,

"OnBuild": null,

"Labels": null

},

"Architecture": "amd64",

"Os": "linux",

"Size": 679640663,

"VirtualSize": 679640663,

"GraphDriver": {

"Data": {

"LowerDir": "/var/lib/docker/overlay2/d1f36a756d4228f9c951097584307d486f828a30cc089f8a64c2a2850309a03d/diff:/var/lib/docker/overlay2/a301629e7de6136f0ad3fe1655b882abc5f3a5c53700b7b656b690932e65a9a0/diff:/var/lib/docker/overlay2/2889642e115836f88c39dfa5cb84ad90de6ddf00487e175a33d78aa444933c27/diff:/var/lib/docker/overlay2/61c04221b641b8e5b41c8a36ff97faaeed6338cb913e78cc3decd97a1c7a5792/diff:/var/lib/docker/overlay2/926cff1497c69b6873ddf39466d05224fe7c8762c80bcfe5cbdcc18c21a1828a/diff:/var/lib/docker/overlay2/8d45a792fa5bef66d1b6eb66da2e2b501f55ae23fec54a36e4ddd5c563c50a2b/diff:/var/lib/docker/overlay2/d980c38ff948f081fdb50c9d707910f878f119bd4dcdeba2a002ed5c2dbec12b/diff:/var/lib/docker/overlay2/b520521e035077afc463e52efc302b7efd1cc7d8040839601420ceefd855e4f8/diff:/var/lib/docker/overlay2/e0a0aa574cfeed38fc82021a18989dc70214c6529f6ecba6892858fe8347ca99/diff",

"MergedDir": "/var/lib/docker/overlay2/6a73f4262645e3c757ef637eb2a07b0056a1fbe20526b924614f36d9dde89d7a/merged",

"UpperDir": "/var/lib/docker/overlay2/6a73f4262645e3c757ef637eb2a07b0056a1fbe20526b924614f36d9dde89d7a/diff",

"WorkDir": "/var/lib/docker/overlay2/6a73f4262645e3c757ef637eb2a07b0056a1fbe20526b924614f36d9dde89d7a/work"

},

"Name": "overlay2"

},

"RootFS": {

"Type": "layers",

"Layers": [

"sha256:62a747bf1719d2d37fff5670ed40de6900a95743172de1b4434cb019b56f30b4",

"sha256:0b3c02b5d746e8cc8d537922b7c2abc17b22da7de268d068f2a4ebd55ac4f6d7",

"sha256:9f9f651e9303146e4dd98aca7da21fd8e21dd1a47d71da3d6d187da7691a6dc9",

"sha256:ba6e5ff31f235bbfd34aae202da4e6d4dc759f266f284d79018cae755f36f9e3",

"sha256:36e0782f115904773d06f7d03af94a1ec9ca9ad42736ec55baae8823c457ba69",

"sha256:62a5b8741e8334844625c513016da47cf2b61afb1145f6317edacb4c13ab010e",

"sha256:78700b6b35d0ab6e70befff1d26c5350222a8fea49cc874916bce950eeae35a1",

"sha256:cb80689c9aefc3f455b35b0110fa04a7c13e21a25f342ee2bb27c28f618a0eb5",

"sha256:b03f233a9851d13f8d971d6a9b54b8b988db8b906525b2587457933be9f9e1d0",

"sha256:28d7132ca18d4f39e318d59e559d1c4f874efef1f7a66bca231a4438fc1ccb20"

]

},

"Metadata": {

"LastTagTime": "0001-01-01T00:00:00Z"

}

}

]

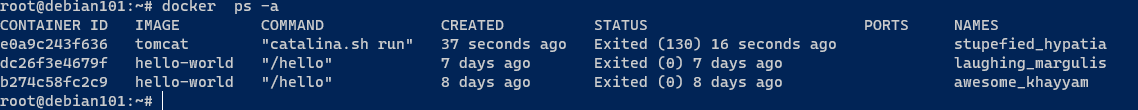

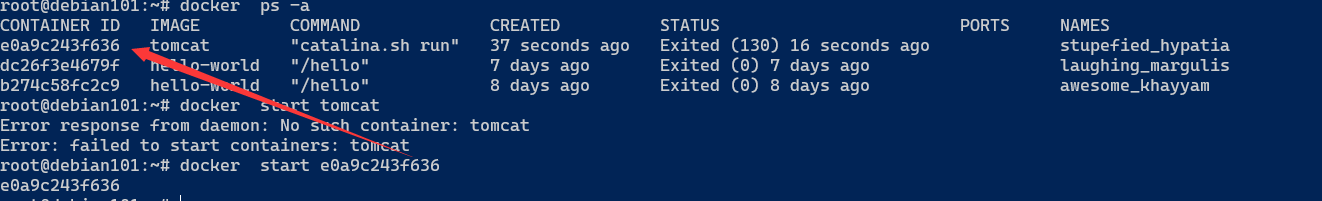

3.5 创建容器并运行

docker run tomcat

这样运行后会直接在控制台打印应用启动日志,如果想退出的话按 ctr+c,这样会导致容器也直接退出

列出所有的容器包括现在没在运行的

启动以后的容器

root@debian101:~# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e0a9c243f636 tomcat "catalina.sh run" 3 minutes ago Up 59 seconds 8080/tcp stupefied_hypatia

--先关闭容器

root@debian101:~# docker stop e0a9c243f636

e0a9c243f636

--后删除容器

root@debian101:~# docker rm e0a9c243f636

e0a9c243f636

端口映射

-p

-p 外:容器内

-d 作为后台程序运行

docker run --name tomcat1 -p 8080:8080 -d tomcat

docker run --name tomcat2 -p 8081:8080 -d tomcat

docker run --name tomcat3 -p 8082:8080 -d tomcat

进入容器内部,进行操作

docker exec -it tomcat1 /bin/bash

#在容器内部tomcat webapps 的目录下创建目录 ROOT

mkdir ROOT

#进入 ROOT

cd ROOT

#把helloword 写入到index.html,不需要创建index.html 文件

echo "helloword" >> index.html

3.6 文件拷贝

docker cp 从主机到容器,从容器到主机

docker cp /root/index.html tomcat1:/usr/local/tomcat/webapps/ROOT

docker cp tomcat1:/usr/local/tomcat/webapps/ROOT/index2.html /root/

3.7 目录映射,这个要灵活应用!!!

-v

把 debian101 /root/www 目录映射到容器内部 /usr/local/tomcat/webapps

docker run --name tomcat1 -p 8080:8080 -v /root/www:/usr/local/tomcat/webapps -d tomcat

docker rm -f tomcat1 && docker run --name tomcat1 -p 8080:8080 -v /root/www:/usr/local/tomcat/webapps -v /root/logs/tomcat1:/usr/local/tomcat/logs -d tomcat && docker logs -f tomcat1

docker rm -f tomcat2 && docker run --name tomcat2 -p 8081:8080 -v /root/www:/usr/local/tomcat/webapps -v /root/logs/tomcat2:/usr/local/tomcat/logs -d tomcat && docker logs -f tomcat2

3.8 查看日志

docker logs

查看tomcat1 打印到标准输出的日志,docker 中的最佳实践都是把日志打印到标准输出

docker logs -f tomcat1

3.9 更新配置,更新启动策略,在docker 服务启动的时候启动容器

docker update 参考 http://www.lwhweb.com/posts/26195/

docker update --restart always tomcat1

Docker容器的重启策略如下:

no,默认策略,在容器退出时不重启容器 on-failure,在容器非正常退出时(退出状态非0),才会重启容器 on-failure:3,在容器非正常退出时重启容器,最多重启3次 always,在容器退出时总是重启容器 unless-stopped,在容器退出时总是重启容器,但是不考虑在Docker守护进程启动时就已经停止了的容器

4. docker 发布springboot2 程序 和镜像的构建

– 下载镜像

docker pull openjdk

–查看镜像

docker inspect openjdk

jar 包位置

D:\java\myopen\springboot2\demo2021\target

4.1 利用目录映射 启动springboot 程序

# 第1个 http://192.168.100.101:9090/hello

docker rm -f springboot1 &&\

docker run --name springboot1 -p 9090:8080 -v /root/www/demo2021-1120.jar:/usr/local/demo2021-1120.jar -d openjdk java -jar /usr/local/demo2021-1120.jar && docker logs -f springboot1

# 第2个 http://192.168.100.101:9091/hello

docker rm -f springboot2 &&\

docker run --name springboot2 -p 9091:8080 -v /root/www/demo2021-1120.jar:/usr/local/demo2021-1120.jar -d openjdk java -jar /usr/local/demo2021-1120.jar && docker logs -f springboot2

#删除

docker rm -f springboot1 springboot2

4.2 创建专有镜像启动程序

#创建docker file

touch /root/soft/springboot2021.conf

vi /root/soft/springboot2021.conf

docker file 内容如下

#按照 /root/soft/springboot2021.conf 配置 当前工作目录 /root/www

# docker build -t springboot2021 -f /root/soft/springboot2021.conf /root/www

# Docker image for springboot

# VERSION 20211120

# Author: xue

# 基础镜像使用openjdk

FROM openjdk

# 作者

MAINTAINER xue <xuejianxinokok@163.com>

# 将jar包添加到容器中并更名为app.jar

ADD demo2021-1120.jar app.jar

# 运行jar包

ENTRYPOINT ["java","-jar","/app.jar"]

运行镜像测试容器

docker rm -f springboot1 &&\

docker run --name springboot1 -p 9090:8080 -d springboot2021 && docker logs -f springboot1

docker exec -it springboot1 /bin/bash

4.3 如何保存镜像到文件

docker save -o /root/soft/openjdk17.tar openjdk

docker rmi openjdk

docker load --input /root/soft/openjdk17.tar

docker images

4.4 如何把容器导出镜像

一般用于不熟悉环境时,交互式和实验性的制作镜像

有的时候你想修改一个镜像的历史来减少镜像大小。那么就会用到 docker export 命令会将扁平的联合五年间系统的所有内容导出到标准输出或者一个压缩文件上。

docker export

#先运行容器

docker rm -f myopenjdk && docker run --name myopenjdk -d openjdk tail -f /dev/null && docker exec -it myopenjdk /bin/bash

#把用到的文件拷贝到容器内

docker cp /root/www/demo2021-1120.jar myopenjdk:/app.jar

#导出容器为镜像

docker export -o /root/soft/1.tar myopenjdk

#导入镜像

docker import /root/soft/1.tar

#查看导入的镜像id

docker images -a

#docker tag 重命名新镜像

docker tag 你的镜像hashID myopenjdk:v1

#使用新镜像运行容器

docker rm -f springboot1 &&\

docker run --name springboot1 -p 9090:8080 -d myopenjdk:v1 java -jar /app.jar && docker logs -f springboot1

5. docker 配置kafka 和 线上环境实际用例

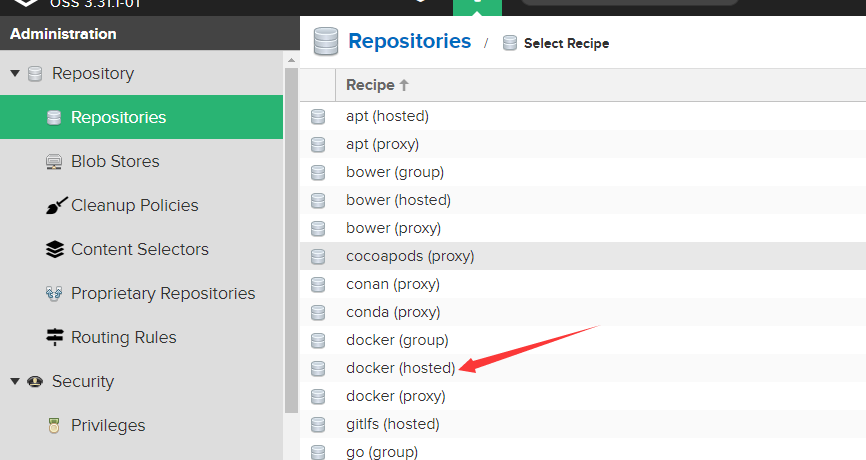

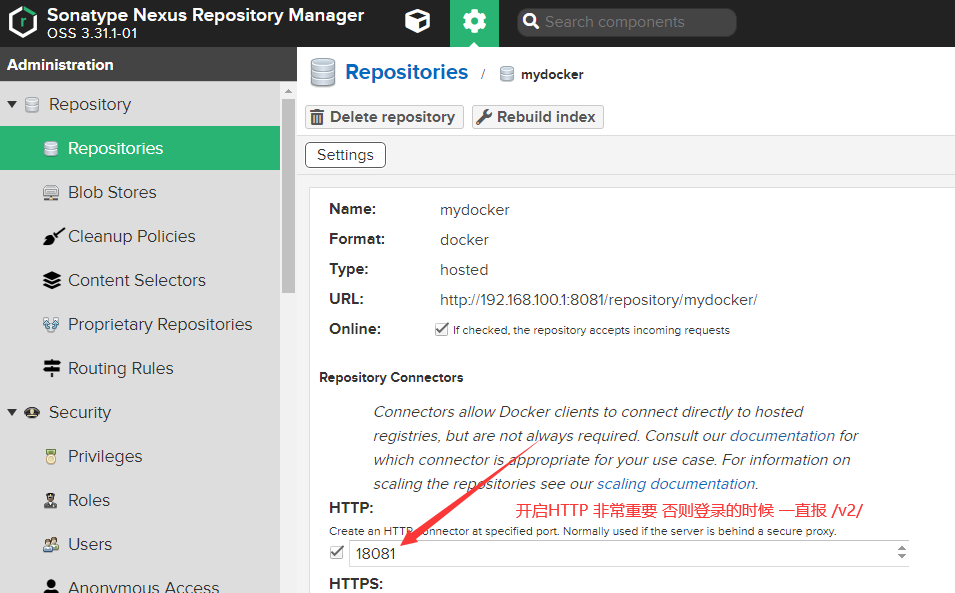

5.1 搭建一个docker 私服

下载 nexus [ˈneksəs]] (关系; (错综复杂的)联结; 联系;)

https://www.sonatype.com/products/repository-oss-download,一般下载不下来

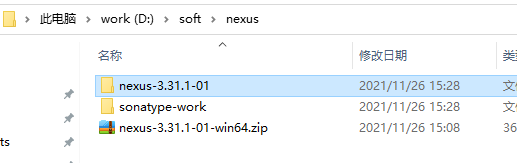

nexus-3.31.1-01:是nexus服务器相关的文件

sonatype-work:是nexus工作的数据文件,上传下载的jar包就在这个文件夹下面

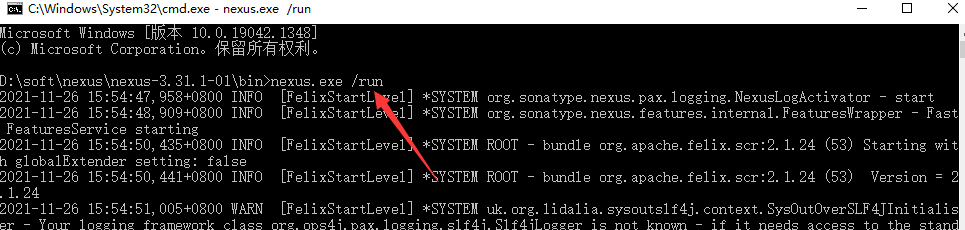

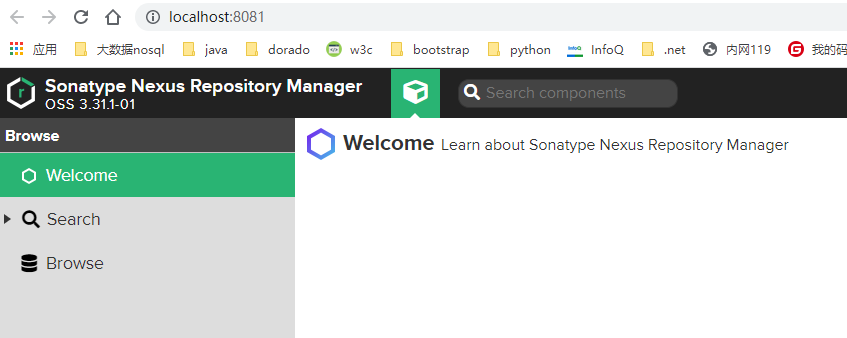

nexus-3.31.1-01\bin文件夹,执行命令:nexus /run,访问http://localhost:8081

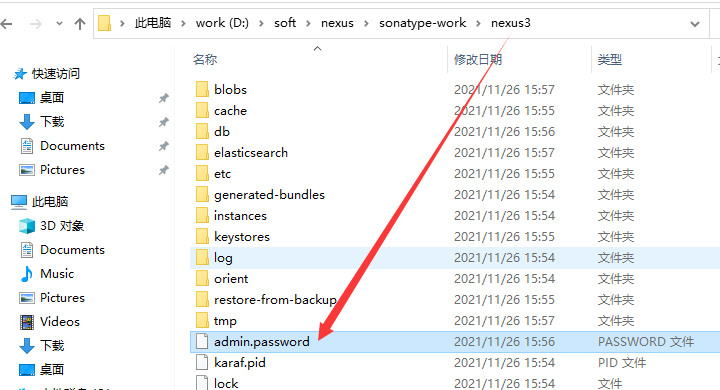

使用默认用户admin,密码在 如下图文件中获取

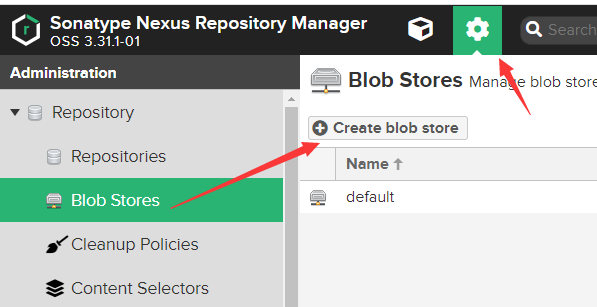

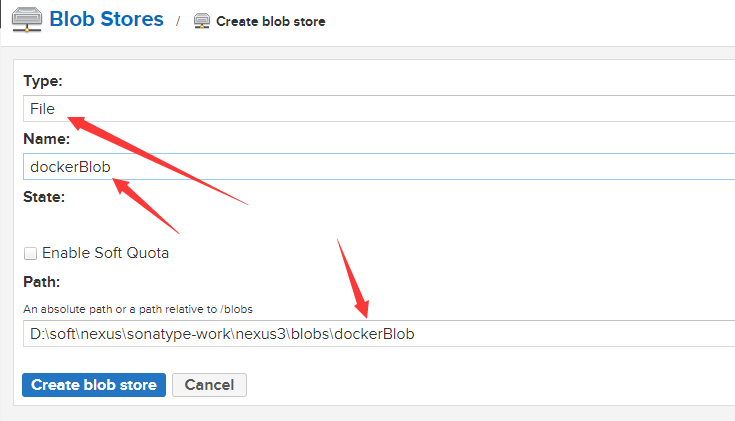

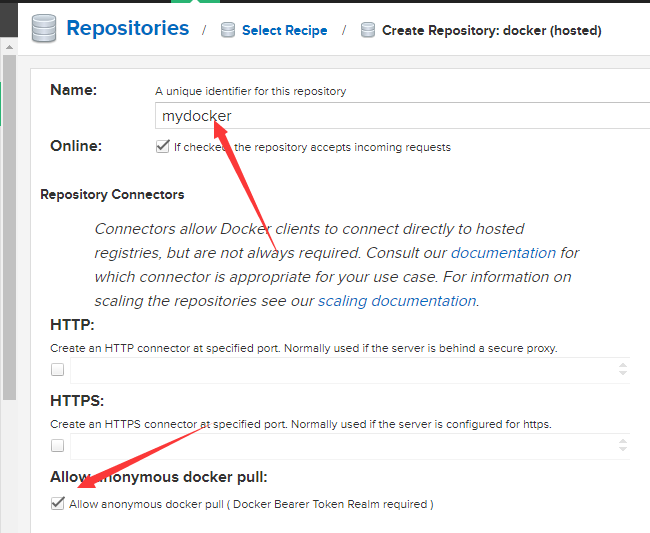

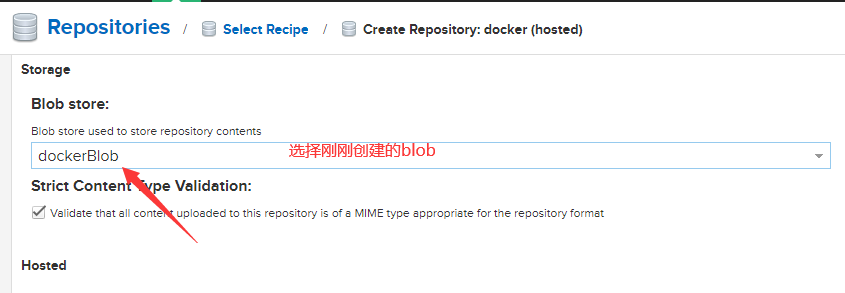

创建一个存储,用于保存docker 镜像

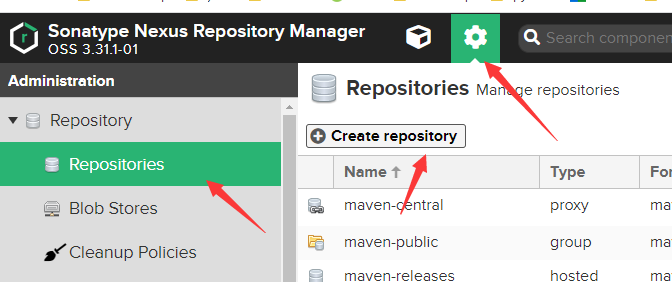

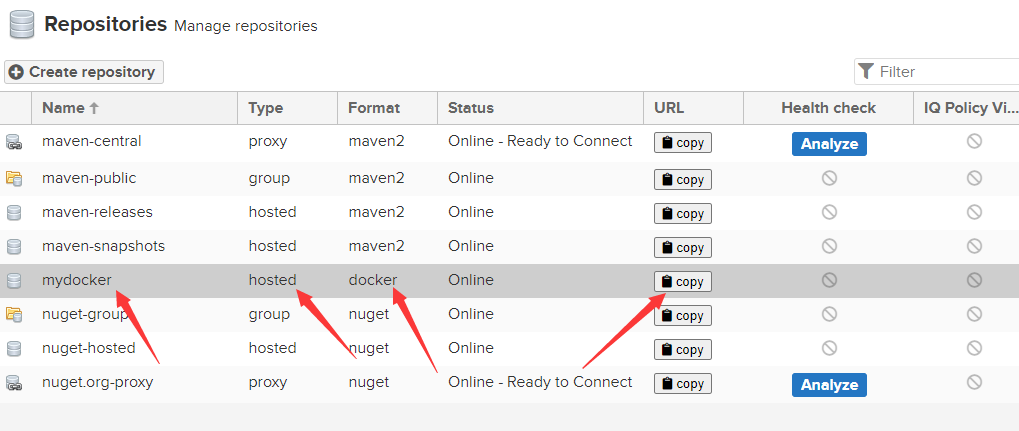

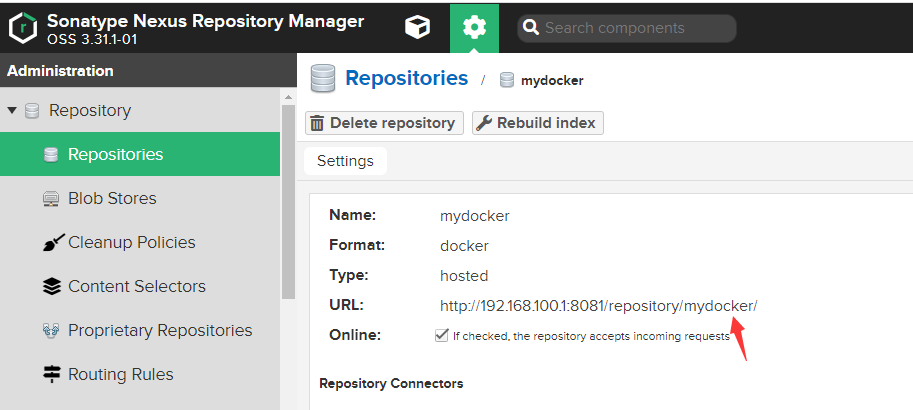

创建docker 私服仓库

为了简单化 我们运行匿名pull 镜像

创建一个仓库 类型为 docker(hosted)

注意仓库 URL 端口为18081 而不是8081

http://192.168.100.1:18081/repository/mydocker/

到目前为止docker 私服搭建完成 ,测试一下,如何推送镜像到私服

添加docker 配置文件

vi /etc/docker/daemon.json

内容如下

{

"insecure-registries": ["192.168.100.1:18081"]

,"exec-opts": ["native.cgroupdriver=systemd"]

}

#重启docker 服务

service docker restart

#登录私服

docker login -u admin 192.168.100.1:18081

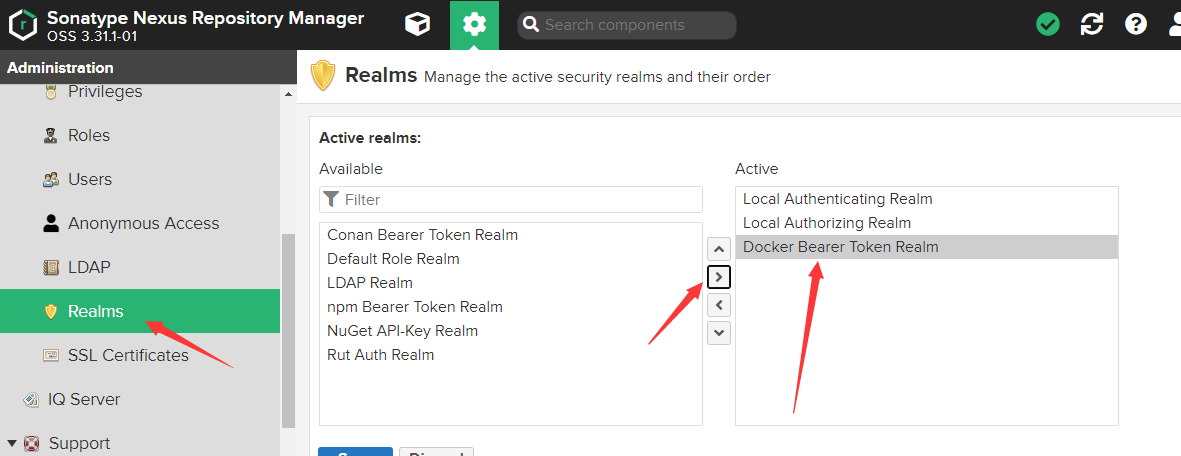

如果输出密码后报以下错误 请参考下图在realms 中添加一些东西

Password:

Error response from daemon: login attempt to http://192.168.100.1:18081/v2/ failed with status: 401 Unauthorized

#登录成功

root@debian101:~# docker login -u admin 192.168.100.1:18081

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

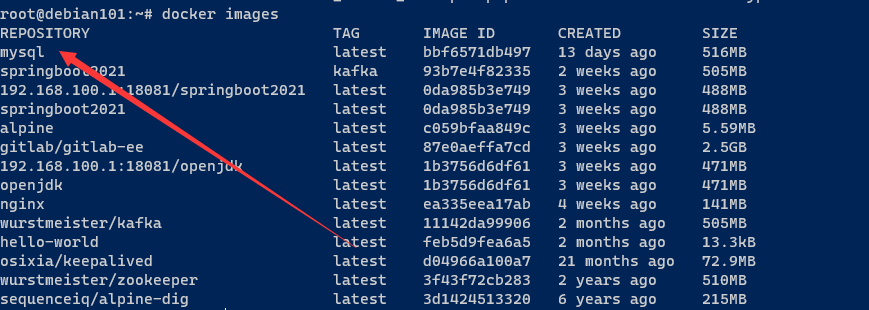

#查看现有镜像

root@debian101:~# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

openjdk latest 1b3756d6df61 9 days ago 471MB

#打tag 准备推送镜像

root@debian101:~# docker tag openjdk 192.168.100.1:18081/openjdk

#查看打完tag 的镜像

root@debian101:~# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

192.168.100.1:18081/openjdk latest 1b3756d6df61 9 days ago 471MB

openjdk latest 1b3756d6df61 9 days ago 471MB

#推送

root@debian101:~# docker push 192.168.100.1:18081/openjdk

Using default tag: latest

The push refers to repository [192.168.100.1:18081/openjdk]

e566764ec223: Pushing [==> ] 14.99MB/320.6MB

3edc5fd20034: Pushing [===================> ] 15.58MB/39.67MB

32ac9dd9610b: Pushing [===> ] 8.116MB/110.5MB

#推送springboot2021

root@debian101:~# docker tag springboot2021 192.168.100.1:18081/springboot2021

root@debian101:~# docker push 192.168.100.1:18081/springboot2021

Using default tag: latest

The push refers to repository [192.168.100.1:18081/springboot2021]

cea643473484: Pushed

e566764ec223: Layer already exists

3edc5fd20034: Layer already exists

32ac9dd9610b: Layer already exists

latest: digest: sha256:3b30b51136c1449a7ab41100a25327b5dd2e2920f27dbbbc6d28a9f07214fc5a size: 1166

docker tag nginx 192.168.100.1:18081/nginx

docker push 192.168.100.1:18081/nginx

docker tag wurstmeister/kafka 192.168.100.1:18081/wurstmeister/kafka

docker push 192.168.100.1:18081/wurstmeister/kafka

docker tag wurstmeister/zookeeper 192.168.100.1:18081/wurstmeister/zookeeper

docker push 192.168.100.1:18081/wurstmeister/zookeeper

#测试下载

root@debian101:/etc/docker# docker login -u admin 192.168.100.1:18081

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

#测试下载

root@debian101:/etc/docker# docker pull 192.168.100.1:18081/openjdk

Using default tag: latest

latest: Pulling from openjdk

#重新打tag

root@debian101:/etc/docker# docker tag 192.168.100.1:18081/openjdk openjdk

#查看镜像

root@debian101:/etc/docker# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

openjdk latest 1b3756d6df61 9 days ago 471MB

192.168.100.1:18081/openjdk latest 1b3756d6df61 9 days ago 471MB

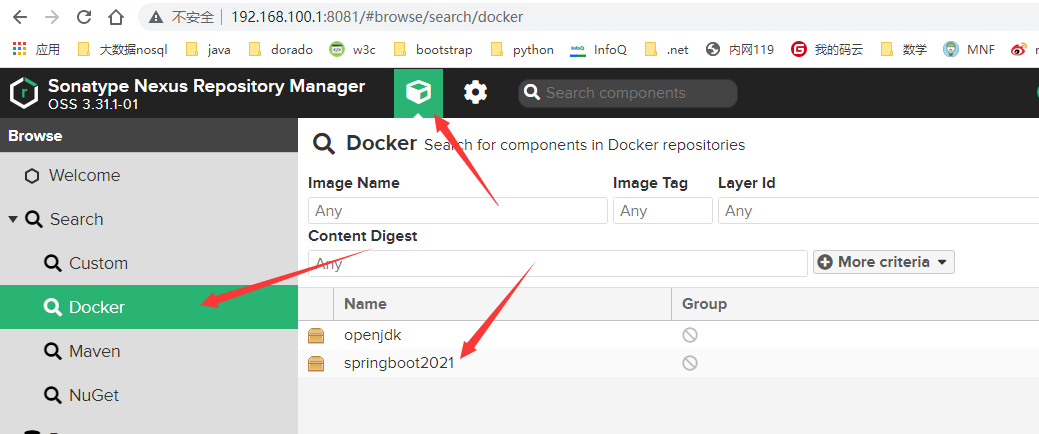

查看推送完成的镜像

5.2 搭建gitlab 私服,需要的资源太多,暂时没完成20211129

- 4 cores is the recommended minimum number of cores and supports up to 500 users

- 4GB RAM is the required minimum memory size and supports up to 500 users

不推荐 在同机安装GitLab Runner

We strongly advise against installing GitLab Runner on the same machine you plan to install GitLab on. Depending on how you decide to configure GitLab Runner and what tools you use to exercise your application in the CI environment, GitLab Runner can consume significant amount of available memory.

We recommend using a separate machine for each GitLab Runner, if you plan to use the CI features. The GitLab Runner server requirements depend on:

- The type of executor you configured on GitLab Runner.

- Resources required to run build jobs.

- Job concurrency settings.

https://docs.gitlab.com/ee/install/next_steps.html 安装的后续工作 包括CI/CD 和备份工作

gitlab cicd ->gitlab runner ->build ->push dock

#这一步需要初始化很长时间

docker run -d \

--hostname gitlab \

--name gitlab \

-p 2443:443 -p 2080:80 -p 2022:22 \

-v /root/gitlab/config:/etc/gitlab \

-v /root/gitlab/logs:/var/log/gitlab \

-v /root/gitlab/data:/var/opt/gitlab \

gitlab/gitlab-ee:latest

#查看初始密码

docker exec -it gitlab grep 'Password:' /etc/gitlab/initial_root_password

#安装runner

docker run -d --name gitlab-runner \

-v /root/gitlab/gitlab-runner/config:/etc/gitlab-runner \

-v /var/run/docker.sock:/var/run/docker.sock \

gitlab/gitlab-runner:latest

docker run --rm -it -v /root/gitlab/gitlab-runner/config:/etc/gitlab-runner gitlab/gitlab-runner register

5.3 利用docker-compose 测试kafka

#创建docker file

touch /root/soft/springboot2021kafka.conf

vi /root/soft/springboot2021kafka.conf

docker file 内容如下

#按照 /root/soft/springboot2021kafka.conf 配置 当前工作目录 /root/www

# docker build -t springboot2021:kafka -f /root/soft/springboot2021kafka.conf /root/www

# Docker image for springboot

# VERSION 20211120

# Author: xue

# 基础镜像使用openjdk

FROM openjdk

# 作者

MAINTAINER xue <xuejianxinokok@163.com>

# 将jar包添加到容器中并更名为app.jar

ADD demo2021-1120-kafka.jar app.jar

# 运行jar包

ENTRYPOINT ["java","-jar","/app.jar"]

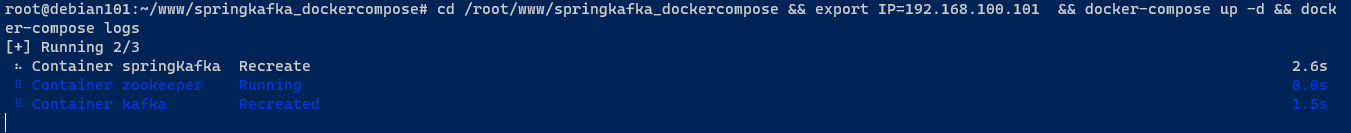

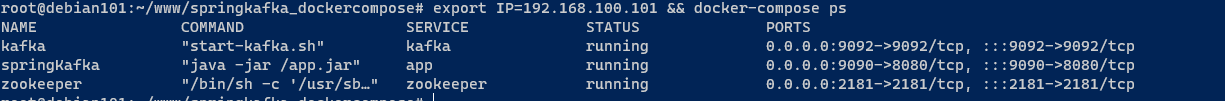

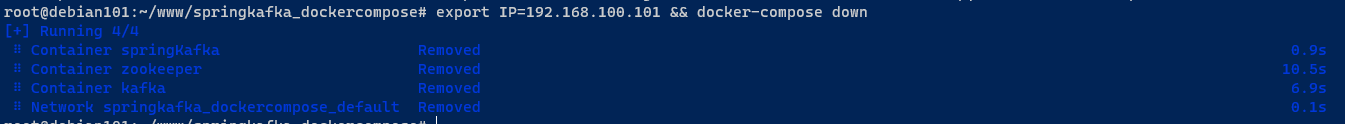

5.3.1 外部访问

cd /root/www/springkafka_dockercompose && export IP=192.168.100.101 && docker-compose up -d && docker-compose logs

#关闭

#删除

docker-compose rm -vf

#---------以下是docker-compose.yml 文件内容

#下载路径 https://gitee.com/xuejianxinokok/springboot2/blob/master/demo2021/doc/v1/docker-compose.yml

# cd /root/www/springkafka_dockercompose && export IP=192.168.100.101 && docker-compose up -d && docker-compose logs -f

# 访问路径 http://192.168.100.101:9090/kafka

# docker-compose down && docker-compose rm -vf

version: '3.2'

services:

zookeeper:

image: wurstmeister/zookeeper

ports:

- "2181:2181"

container_name: "zookeeper"

#restart: always

kafka:

image: wurstmeister/kafka

ports:

- "9092:9092"

container_name: "kafka"

environment:

- TZ=CST-8

- KAFKA_ZOOKEEPER_CONNECT=zookeeper:2181

# 非必须,设置自动创建 topic

- KAFKA_AUTO_CREATE_TOPICS_ENABLE=true

- KAFKA_ADVERTISED_HOST_NAME=${IP}

- KAFKA_ADVERTISED_PORT=9092

- KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://${IP}:9092

- KAFKA_LISTENERS=PLAINTEXT://:9092

# 非必须,设置对内存

#- KAFKA_HEAP_OPTS=-Xmx1G -Xms1G

# 非必须,设置保存7天数据,为默认值

- KAFKA_LOG_RETENTION_HOURS=168

volumes:

# 将 kafka 的数据文件映射出来

- /root/kafka:/kafka

- /var/run/docker.sock:/var/run/docker.sock

springKafka:

image: springboot2021:kafka

#build: springboot2021:kafka

ports:

- "9090:8080"

container_name: "springKafka"

environment:

- BOOTSTRAP_SERVERS=${IP}:9092

启动

查看

关闭

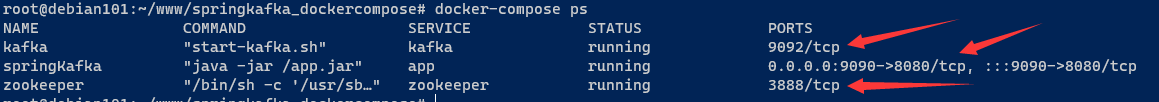

5.3.2 localhost 连接方式

这种方式下 kafka 只能在 springboot2021:kafka 容器中连接,外部无法访问

docker-compose down && docker-compose up -d && docker-compose logs -f

#---------以下是docker-compose.yml 文件内容

# 下载路径 https://gitee.com/xuejianxinokok/springboot2/blob/master/demo2021/doc/v2/docker-compose.yml

# localhost 方式

version: '3.2'

services:

zookeeper:

image: wurstmeister/zookeeper

expose:

- 2181

#ports:

# - "2181:2181"

container_name: "zookeeper"

#restart: always

kafka:

image: wurstmeister/kafka

expose:

- 9092

links:

- zookeeper

#ports:

# - "9092:9092"

container_name: "kafka"

environment:

- TZ=CST-8

- KAFKA_ZOOKEEPER_CONNECT=zookeeper:2181

# 非必须,设置自动创建 topic

- KAFKA_AUTO_CREATE_TOPICS_ENABLE=true

- KAFKA_ADVERTISED_HOST_NAME=kafka

- KAFKA_ADVERTISED_PORT=9092

- KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://kafka:9092

- KAFKA_LISTENERS=PLAINTEXT://:9092

# 非必须,设置对内存

#- KAFKA_HEAP_OPTS=-Xmx1G -Xms1G

# 非必须,设置保存7天数据,为默认值

- KAFKA_LOG_RETENTION_HOURS=168

volumes:

# 将 kafka 的数据文件映射出来

- /root/kafka:/kafka

- /var/run/docker.sock:/var/run/docker.sock

springKafka:

image: springboot2021:kafka

#build: springboot2021:kafka

ports:

- "9090:8080"

container_name: "springKafka"

links:

- kafka

environment:

- BOOTSTRAP_SERVERS=kafka:9092

效果如下,发现kafka 9092 端口并没有映射到主机端口

容器内部环境变量

root@debian101:~/www/springkafka_dockercompose# docker exec -it springKafka /bin/bash

bash-4.4# env

LANG=C.UTF-8

HOSTNAME=d05a345667bc

JAVA_HOME=/usr/java/openjdk-17

BOOTSTRAP_SERVERS=kafka:9092 #《《------注意此处

JAVA_VERSION=17.0.1

PWD=/

HOME=/root

TERM=xterm

SHLVL=1

PATH=/usr/java/openjdk-17/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

_=/usr/bin/env

root@debian101:~/www/springkafka_dockercompose# docker exec -it kafka /bin/bash

bash-5.1# env

HOSTNAME=07b2aaa4defb

LANGUAGE=en_US:en

JAVA_HOME=/usr/lib/jvm/zulu8-ca

KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://kafka:9092 #《《------注意此处

PWD=/

TZ=CST-8

KAFKA_ADVERTISED_HOST_NAME=kafka #《《------注意此处

HOME=/root

LANG=en_US.UTF-8

KAFKA_HOME=/opt/kafka

TERM=xterm

KAFKA_LISTENERS=PLAINTEXT://:9092

KAFKA_LOG_RETENTION_HOURS=168

KAFKA_VERSION=2.7.1

KAFKA_ADVERTISED_PORT=9092

GLIBC_VERSION=2.31-r0

SHLVL=1

KAFKA_ZOOKEEPER_CONNECT=zookeeper:2181 #《《------注意此处

KAFKA_AUTO_CREATE_TOPICS_ENABLE=true

LC_ALL=en_US.UTF-8

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/opt/kafka/bin

SCALA_VERSION=2.13

_=/usr/bin/env

5.4 ipvs 双机互备, keepalive 保活,nginx 负载均衡

5.4.1 双机互备

这次试验需要2台虚拟机,3个ip

| hostname | IP | 角色 | 机器配置 |

|---|---|---|---|

| debian101 | 192.168.100.101 | master节点 | – |

| debian102 | 192.168.100.102 | backup节点 | – |

| 192.168.100.103 | 虚拟IP | 不需要机器 |

Keepalived是基于vrrp协议的一款高可用软件。Keepailived有一台主服务器和多台备份服务器,在主服务器和备份服务器上面部署相同的服务配置,使用一个虚拟IP地址对外提供服务,当主服务器出现故障时,虚拟IP地址会自动漂移到备份服务器。

#下载镜像

docker pull osixia/keepalived

docker pull nginx

#推送到仓库

docker login -u admin 192.168.100.1:18080

docker tag osixia/keepalived 192.168.100.1:18080/osixia/keepalived

docker push 192.168.100.1:18080/osixia/keepalived

docker rmi 192.168.100.1:18080/osixia/keepalived

#拉取镜像

docker login -u admin 192.168.100.1:18080

docker pull 192.168.100.1:18080/osixia/keepalived

docker tag 192.168.100.1:18080/osixia/keepalived osixia/keepalived

docker rmi 192.168.100.1:18080/osixia/keepalived

#创建keepalive 配置

mkdir -p /root/keepalived

vi /root/keepalived/keepalived.conf

master节点101 的keepalive 配置

#master 1

#vi /root/keepalived/keepalived.conf

! Configuration File for keepalived

# 全局设置

global_defs {

# 设置邮件通知

#notification_email {

# root@localhost

#}

#notification_email_from admin@lnmmp.com

#smtp_connect_timeout 3

#smtp_server 127.0.0.1

#注意id 不能重复

router_id LVS_1

}

# checkhaproxy 是自定义脚本名称,比如 checkNginx

vrrp_script checkhaproxy

{

# 健康检查脚本,注意是容器内的位置

script "/usr/bin/check-haproxy.sh"

# 每2秒检测一次nginx的运行状态

interval 2

# 失败一次,将自己的优先级调整为-5

weight -5

# require 2 failures for KO

fall 2

# require 2 successes for OK

rise 2

}

vrrp_instance VI_1 {

#此处是 MASTER

state MASTER

#设置实例绑定的网卡 enp0s3

interface enp0s3

# 同一实例下 virtual_router_id 必须相同

virtual_router_id 51

# MASTER 权重要高于 BACKUP 比如 BACKUP 为99

priority 100

# MASTER 与 BACKUP 负载均衡器之间同步检查的时间间隔,单位是秒

advert_int 1

#虚拟IP,两个节点设置必须一致,可以设置多个

virtual_ipaddress {

#虚拟ip 为103,在网卡 enp0s3上

192.168.100.103/24 dev enp0s3

}

#设置认证

authentication {

# 主从服务器验证方式

auth_type PASS

auth_pass password

}

#跟踪的脚本 见 vrrp_script checkhaproxy

track_script {

checkhaproxy

}

}

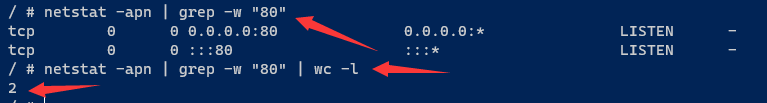

健康检查脚本101和102 上的一样

mkdir -p /root/keepalived

vi /root/keepalived/check-haproxy.sh

#!/bin/bash

#vi /root/keepalived/check-haproxy.sh

#查找并统计 80 端口,也就是nginx 端口

count=`netstat -apn | grep -w "80" | wc -l`

#下边这行不行,因为在容器内看不见nginx 进程

#count=`ps aux|grep nginx|egrep '(master|worker)' |wc -l`

if [ $count -gt 0 ]; then

echo 'ok...'

#正常退出

exit 0

else

#80 端口不存在,keepalived 也不活了...

echo 'kill keepalived...'

#自杀开始

ps -ef | grep keepalived | grep -v grep | awk '{print $1}' | xargs kill -9

fi

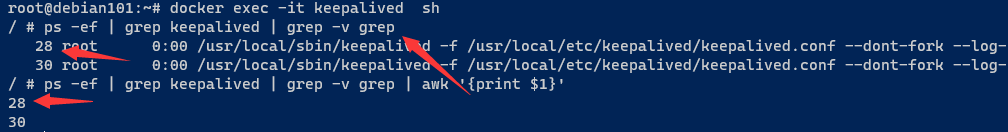

进入 keepalived 容器检查脚本正确性

docker exec -it keepalived sh

添加执行权限在101 和102 上都必须执行

chmod u+x /root/keepalived/check-haproxy.sh

docker 脚本

docker rm -f keepalived && \

docker run -d --name keepalived \

--net=host \

--cap-add=NET_ADMIN --cap-add=NET_BROADCAST --cap-add=NET_RAW \

-v /root/keepalived/keepalived.conf:/container/service/keepalived/assets/keepalived.conf \

-v /root/keepalived/check-haproxy.sh:/usr/bin/check-haproxy.sh \

osixia/keepalived --copy-service && \

docker logs -f keepalived

docker rm -f nginx && \

docker run --name nginx -p 80:80 -d nginx

backup节点102 的keepalive 配置

# backup1

#vi /root/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

#注意id 不能重复

router_id LVS_2

}

vrrp_script checkhaproxy

{

# 健康检查脚本,注意是容器内的位置

script "/usr/bin/check-haproxy.sh"

interval 2

weight -5

}

vrrp_instance VI_1 {

#此处是 BACKUP

state BACKUP

#设置实例绑定的网卡 enp0s3

interface enp0s3

virtual_router_id 51

priority 99

advert_int 1

virtual_ipaddress {

#虚拟ip 为103,在网卡 enp0s3上

192.168.100.103/24 dev enp0s3

}

authentication {

auth_type PASS

auth_pass password

}

track_script {

checkhaproxy

}

}

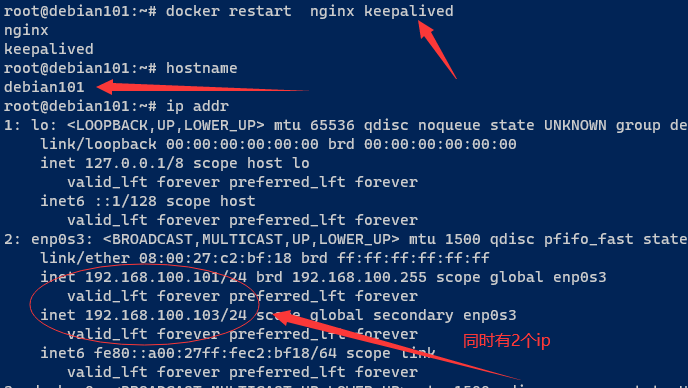

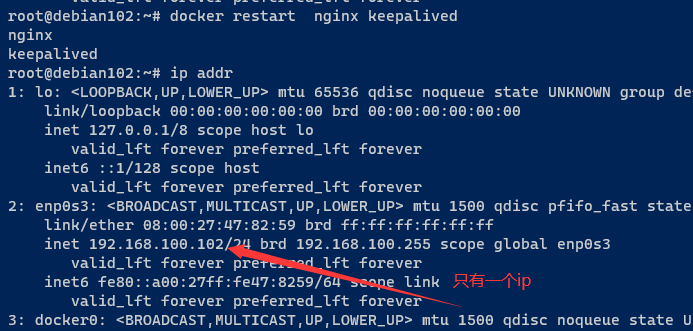

docker restart nginx keepalived

测试查看网络是否生效

docker restart nginx keepalived

验证ip 是否能够转移

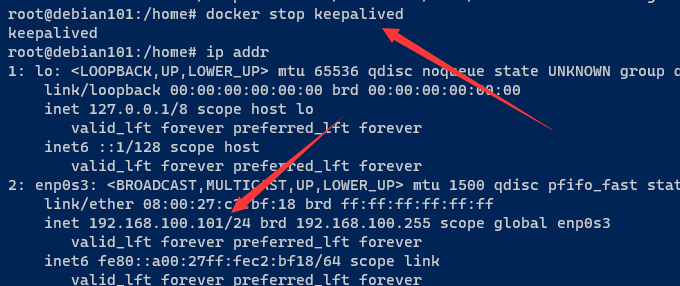

先查看ps 有 keepalived ,然后 停掉nginx ,等待3秒后 再次打印 ps 发现 keepalived 也stop 了

docker ps && docker stop nginx && sleep 3 && docker ps

在debian101 上执行 docker stop nginx keepalived 后发现虚拟ip 已经转到 debian102

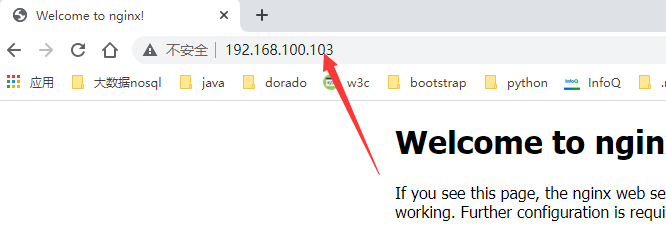

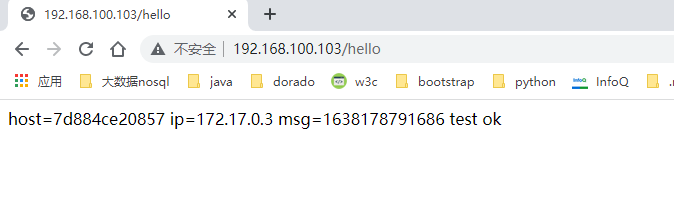

而且 http://192.168.100.103/ 总是可以访问的

5.4.2 nginx 负载均衡

nginx 配置文件,在101和102上都需要创建

mkdir -p /root/nginx

vi /root/nginx/default.conf

# 配置上游服务列表

upstream springboot {

server 192.168.100.101:9090;

server 192.168.100.102:9090;

}

server {

listen 80;

listen [::]:80;

server_name localhost;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

}

location /hello {

# 转发到上游服务

proxy_pass http://springboot;

}

}

docker login -u admin 192.168.100.1:18081

docker pull 192.168.100.1:18081/springboot2021

docker tag 192.168.100.1:18081/springboot2021 springboot2021

docker rm -f springboot1 &&\

docker run --name springboot1 -p 9090:8080 -d springboot2021 && docker logs -f springboot1

#重新创建nginx r

docker rm -f nginx && \

docker run --name nginx -p 80:80 -v /root/nginx/default.conf:/etc/nginx/conf.d/default.conf -d nginx

刷新访问一下这个路径 http://192.168.100.103/hello 发现每次刷新 host 是变动的

5.5 其他问题

线上案例分析

网络文件系统 NFS windows

– rancher k8s

5.6 参考文档

记使用docker配置kafka https://www.cnblogs.com/xuxiaobai13/p/12533056.html

使用docker-compose部署kafka(wurstmeister/kafka)以及常见问题解决 https://www.jb51.cc/docker/1040856.html

GitLab CI/CD 自动化构建与发布实践 https://xie.infoq.cn/article/bc20bead3996eafa934eda51b

nexus3下载安装配置运行

https://blog.csdn.net/mazhongjia/article/details/106857123

使用nexus3配置docker私有仓库

https://www.cnblogs.com/sanduzxcvbnm/p/13099635.html

Keepalived介绍 , 配置说明 , 及实际应用 高级用法

https://blog.51cto.com/lgdong/1869443

6. docker 配置mysql8

主从复制

拉取mysql镜像

root@debian101:~# docker inspect mysql

[

{

"Id": "sha256:bbf6571db4977fe13c3f4e6289c1409fc6f98c2899eabad39bfe07cad8f64f67",

"RepoTags": [

"mysql:latest"

],

"RepoDigests": [

"mysql@sha256:ff9a288d1ecf4397967989b5d1ec269f7d9042a46fc8bc2c3ae35458c1a26727"

],

"Parent": "",

"Comment": "",

"Created": "2021-12-02T11:23:31.593924783Z",

"Container": "2f4919fa51ac232e1fd970b67ebabc178bb2f58aed7632dc3c87c1fe4f0595aa",

"ContainerConfig": {

"Hostname": "2f4919fa51ac",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"ExposedPorts": {

"3306/tcp": {},

"33060/tcp": {}

},

"Tty": false,

"OpenStdin": false,

"StdinOnce": false,

"Env": [

"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

"GOSU_VERSION=1.12",

"MYSQL_MAJOR=8.0",

"MYSQL_VERSION=8.0.27-1debian10"

],

"Cmd": [

"/bin/sh",

"-c",

"#(nop) ",

"CMD ["mysqld"]"

],

"Image": "sha256:388bcf3faea12991d0fc5fe3d05a20624b0eef643b637bb37b04966e33535a14",

"Volumes": {

"/var/lib/mysql": {}

},

"WorkingDir": "",

"Entrypoint": [

"docker-entrypoint.sh"

],

"OnBuild": null,

"Labels": {}

},

"DockerVersion": "20.10.7",

"Author": "",

"Config": {

"Hostname": "",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"ExposedPorts": {

"3306/tcp": {},

"33060/tcp": {}

},

"Tty": false,

"OpenStdin": false,

"StdinOnce": false,

"Env": [

"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

"GOSU_VERSION=1.12",

"MYSQL_MAJOR=8.0",

"MYSQL_VERSION=8.0.27-1debian10"

],

"Cmd": [

"mysqld"

],

"Image": "sha256:388bcf3faea12991d0fc5fe3d05a20624b0eef643b637bb37b04966e33535a14",

"Volumes": {

"/var/lib/mysql": {}

},

"WorkingDir": "",

"Entrypoint": [

"docker-entrypoint.sh"

],

"OnBuild": null,

"Labels": null

},

"Architecture": "amd64",

"Os": "linux",

"Size": 515592874,

"VirtualSize": 515592874,

"GraphDriver": {

"Data": {

"LowerDir": "/var/lib/docker/overlay2/a5e70db93bd490dba61479e23fb5755394e6c9e118d6cb4f559e7f2c94ef9cb2/diff:/var/lib/docker/overlay2/01f89d7abf97dbcaa74528ae3dc29b4caa25bc02a470dc1995627af4f74af410/diff:/var/lib/docker/overlay2/74a8386cd668c56c243657489061b030464164bcb500f00e558b52b4550c2b8e/diff:/var/lib/docker/overlay2/87b9fa8d3e372a708cb45a6bdb0caa62e19ddd14f4da26e96ec6a72e6f9442db/diff:/var/lib/docker/overlay2/89ef374c44cb3cee2c92eb5b8d6ea64f56553724265b60443a52966cbf052f07/diff:/var/lib/docker/overlay2/57f48a8fc3f8fa0908e8666742f514d16e3da9b4f54ca0cb516d7f4e3e0438f4/diff:/var/lib/docker/overlay2/5d402cf82faa267dbde0ea4d399a689826925c50fb6af73c568524699d034cc1/diff:/var/lib/docker/overlay2/2255911fb6874c590237d8e83939f7027871beb21556af5994e10c65835fe6df/diff:/var/lib/docker/overlay2/a7545e207cc3e597be86413728f6ba6295b3ea7626240b35b7155eaff357d7b8/diff:/var/lib/docker/overlay2/0cacfabd03da470f7989a1cfd5669baac00472e214903edf753efc03831bc1b8/diff:/var/lib/docker/overlay2/ab0ea16f4a34cc8478ff676337ece8f47628c4a5b2d14d40467d46dab4d086a3/diff",

"MergedDir": "/var/lib/docker/overlay2/baf3b58b1ac504818166dc8caf5cd51719b7ca714026586d3da84719f5fdff25/merged",

"UpperDir": "/var/lib/docker/overlay2/baf3b58b1ac504818166dc8caf5cd51719b7ca714026586d3da84719f5fdff25/diff",

"WorkDir": "/var/lib/docker/overlay2/baf3b58b1ac504818166dc8caf5cd51719b7ca714026586d3da84719f5fdff25/work"

},

"Name": "overlay2"

},

"RootFS": {

"Type": "layers",

"Layers": [

"sha256:f11bbd657c82c45cc25b0533ce72f193880b630352cc763ed0c045c808ff9ae1",

"sha256:9203c40f710c1c4026417a1cffdcf6997d0c63d94d59c4fb0cda5a833fd12806",

"sha256:8697fe28090564bb7c7d3fcee27aac056d9039eb959b26b8abf1d15aa6ebbf67",

"sha256:d1539e01b8825393fd27a0cb28606d87a2326ad2080195e797e4fa20dc891bc5",

"sha256:525dc20b11ecb6526f3bcabc49b0e819515b3658b1b540ed2b13eb8f15223d0d",

"sha256:8114335ae8bdb6358b6b78dadd960e8881de4e385d57e99e11cec7cac1039606",

"sha256:1109ab22a05f3fa452db4cd4f3a2a23f30393b84dc97fd24ee38d8e095fac017",

"sha256:863a7946636bc09bc1b7fc2f27631d84702899fd9c81884bd747a53bd4446e04",

"sha256:d2b191d8394a35ca145010e72a41b683d497a5f15a1a1617dcc771dbc73e95e2",

"sha256:316f2712a8ff759913ee51d3e11669757fe3fa27ebf620df4369762f1ed0c027",

"sha256:7a3465b1d178aac45dc4aed83f6e83636965ef05b20adc6cf218bbc383114dd8",

"sha256:5386241009e1431bca51b9efe47c41a03fc76aabd1da3fee1ad80e4f6a6ab0aa"

]

},

"Metadata": {

"LastTagTime": "0001-01-01T00:00:00Z"

}

}

]

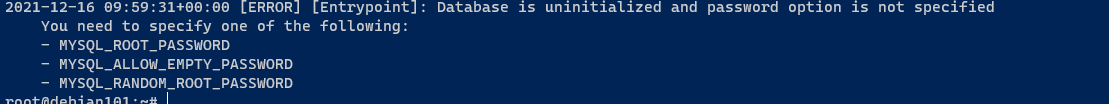

测试mysql 容器

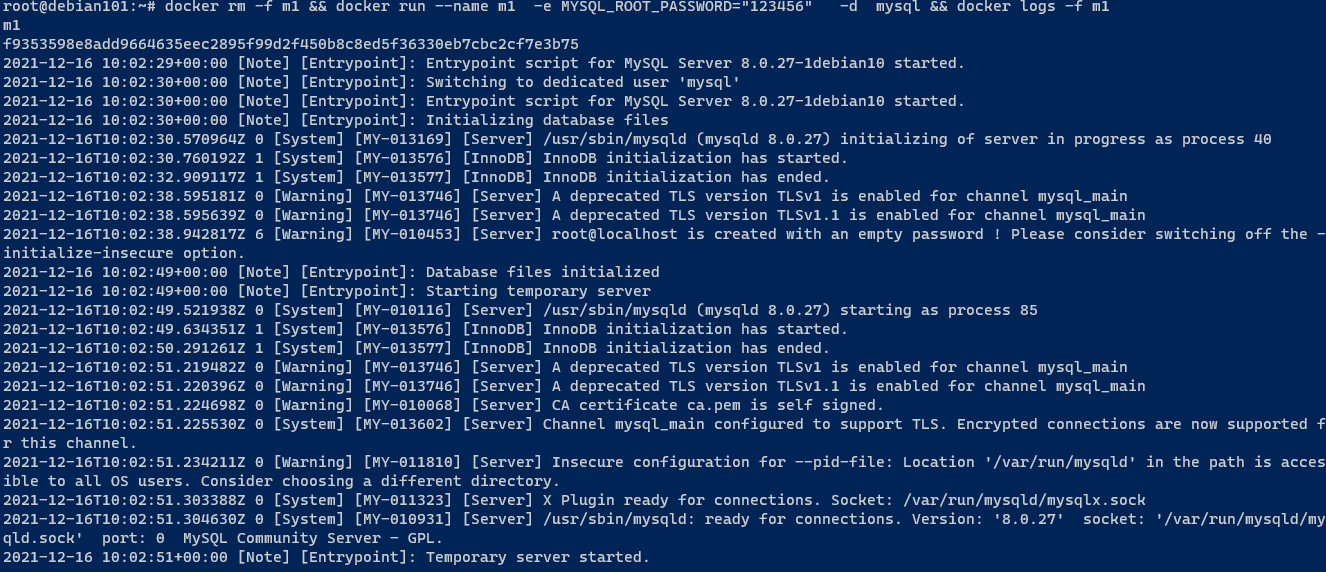

docker rm -f m1 && docker run --name m1 -e MYSQL_ROOT_PASSWORD=“123456” -d mysql && docker logs -f m1

尝试启动 发现需要设置mysql 密码

正式启动

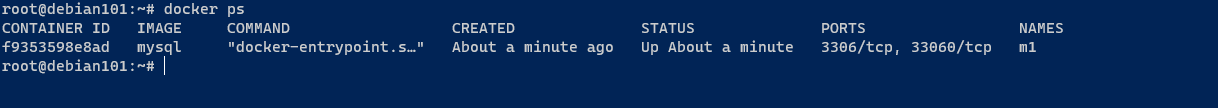

发现容器已经正常启动

进入容器

docker exec -it m1 /bin/bash

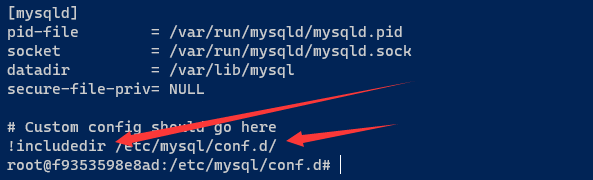

找到mysql 的默认配置文件 在 /etc/mysql/my.cnf

more /etc/mysql/my.cnf

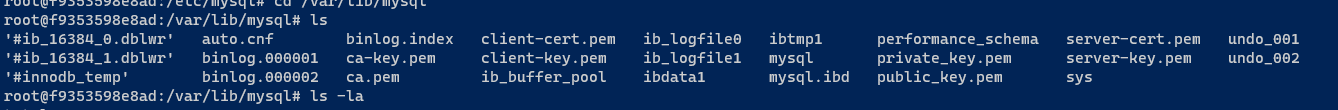

数据文件位置

cd /etc/mysql/conf.d

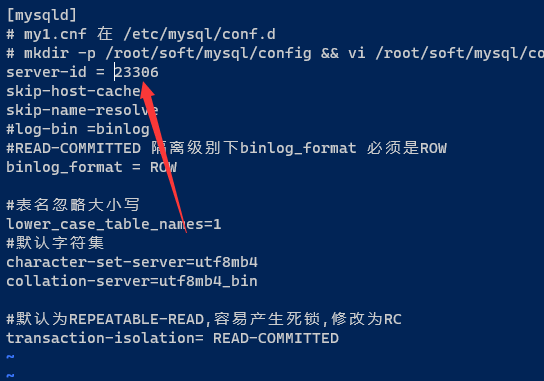

[mysqld]

# my1.cnf 在 /etc/mysql/conf.d

# mkdir -p /root/soft/mysql1/config && vi /root/soft/mysql1/config/my1.cnf

server-id = 13306

skip-host-cache

skip-name-resolve

#log-bin =binlog

#READ-COMMITTED 隔离级别下binlog_format 必须是ROW

binlog_format = ROW

#表名忽略大小写

lower_case_table_names=1

#默认字符集

character-set-server=utf8mb4

collation-server=utf8mb4_bin

#默认为REPEATABLE-READ,容易产生死锁,修改为RC

transaction-isolation= READ-COMMITTED

docker rm -f m1 && docker run --name m1 -e MYSQL_ROOT_PASSWORD=“123456” -p 13306:3306 -v /root/soft/mysql1/config:/etc/mysql/conf.d -v /root/soft/mysql1/data:/var/lib/mysql -d mysql && docker logs -f m1

进入容器

docker exec -it m1 mysql -uroot -p123456

查看配置

SHOW VARIABLES LIKE ‘server_id’;

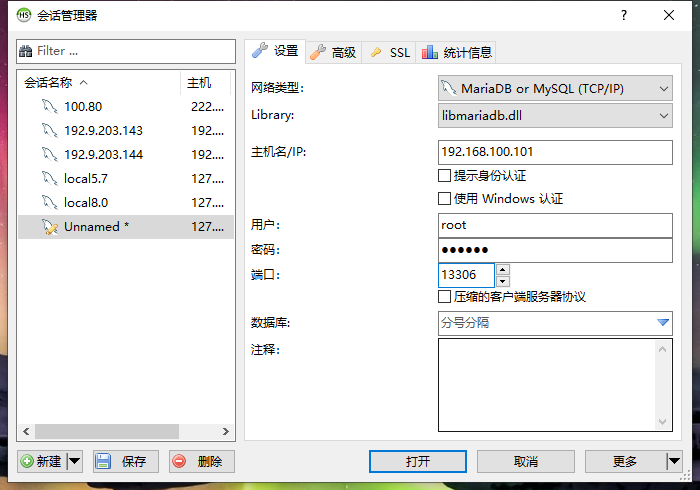

连接容器内mysql,注意端口是13306

停止m1

root@debian101:~/soft/mysql1/data# docker stop m1

m1

cd /root/soft

复制一份

cp -r mysql1 mysql2

修改mysql2/config/my1.cnf 中的server-id 为23306

一定要删除mysql2/data/auto.cnf,否则配置复制时有问题,而且一定不能复制成功

rm -f auto.cnf

docker rm -f m2 && docker run --name m2 -p 23306:3306 -v /root/soft/mysql2/config:/etc/mysql/conf.d -v /root/soft/mysql2/data:/var/lib/mysql -d mysql && docker logs -f m2

docker start m1

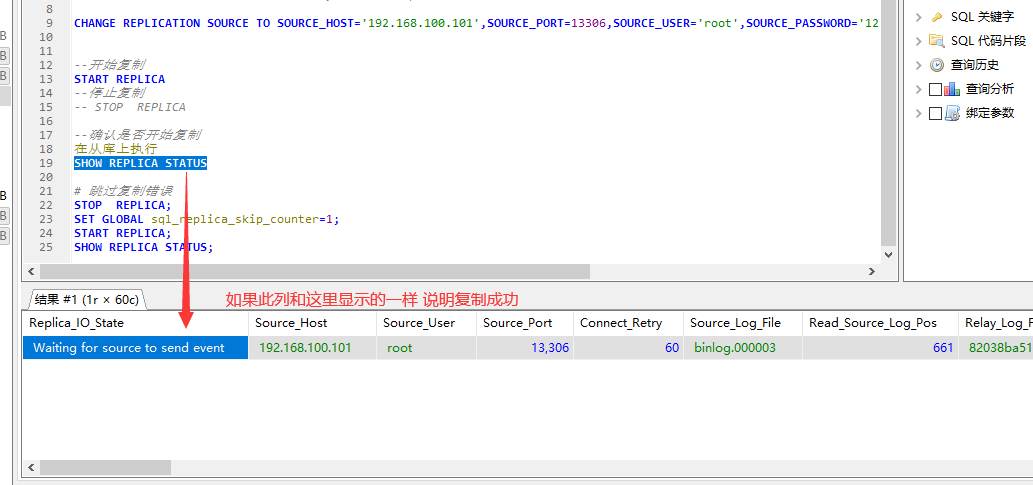

# 在13306 上执行

show master STATUS;

#记录一下binlog 坐标 binlog.000003 , 156

# 在 23306 上

-- 停止复制

-- STOP REPLICA;

--配置复制 这里会弹出错误不需要理他,注意主库的ip 地址要正确

CHANGE REPLICATION SOURCE TO SOURCE_HOST='192.168.100.101',SOURCE_PORT=13306,SOURCE_USER='root',SOURCE_PASSWORD='123456',SOURCE_LOG_FILE='binlog.000003',SOURCE_LOG_POS=156;

--开始复制

START REPLICA

--停止复制

-- STOP REPLICA

--确认是否开始复制

在从库上执行

SHOW REPLICA STATUS

# 跳过复制错误

STOP REPLICA;

SET GLOBAL sql_replica_skip_counter=1;

START REPLICA;

SHOW REPLICA STATUS;

7. docker 文件系统

https://mp.weixin.qq.com/s/cWSC9hQ6JHCAMRO4jrZ7VQ

openjdk

"GraphDriver": {

"Data": {

"LowerDir": "/var/lib/docker/overlay2/70f5493f3f730dee6f0f17c9c2a19b07d00f621024c0bedbdb8a77b8d7ded686/diff:/var/lib/docker/overlay2/38e89dd21cb49ba42eddab9dc74f0c917d0d6ddc364be9fc4f91a64b7c6e3980/diff",

"MergedDir": "/var/lib/docker/overlay2/d0f111678dc06c5e946a219ef56ae420edd0e745723d50f5933cc3cf643ae432/merged",

"UpperDir": "/var/lib/docker/overlay2/d0f111678dc06c5e946a219ef56ae420edd0e745723d50f5933cc3cf643ae432/diff",

"WorkDir": "/var/lib/docker/overlay2/d0f111678dc06c5e946a219ef56ae420edd0e745723d50f5933cc3cf643ae432/work"

},

"Name": "overlay2"

},

"RootFS": {

"Type": "layers",

"Layers": [

"sha256:32ac9dd9610b7b8c69248fb0454d9aad8082c327a5a7a46c7527a7fc0c15be37",

"sha256:3edc5fd20034bc55506e4a3a04896279f6118268b6280b23268a24392fa9aa82",

"sha256:e566764ec22375c8e969346b69a2301a9ca898441ca9f2a939cc87220978068f"

]

},

springboot2021

"GraphDriver": {

"Data": {

"LowerDir": "/var/lib/docker/overlay2/d0f111678dc06c5e946a219ef56ae420edd0e745723d50f5933cc3cf643ae432/diff:/var/lib/docker/overlay2/70f5493f3f730dee6f0f17c9c2a19b07d00f621024c0bedbdb8a77b8d7ded686/diff:/var/lib/docker/overlay2/38e89dd21cb49ba42eddab9dc74f0c917d0d6ddc364be9fc4f91a64b7c6e3980/diff",

"MergedDir": "/var/lib/docker/overlay2/7ba96c45d138cc35110bbdbf6606fcbb2fb3f9b9431fed4e47ecbd4421f21e5b/merged",

"UpperDir": "/var/lib/docker/overlay2/7ba96c45d138cc35110bbdbf6606fcbb2fb3f9b9431fed4e47ecbd4421f21e5b/diff",

"WorkDir": "/var/lib/docker/overlay2/7ba96c45d138cc35110bbdbf6606fcbb2fb3f9b9431fed4e47ecbd4421f21e5b/work"

},

"Name": "overlay2"

},

"RootFS": {

"Type": "layers",

"Layers": [

"sha256:32ac9dd9610b7b8c69248fb0454d9aad8082c327a5a7a46c7527a7fc0c15be37",

"sha256:3edc5fd20034bc55506e4a3a04896279f6118268b6280b23268a24392fa9aa82",

"sha256:e566764ec22375c8e969346b69a2301a9ca898441ca9f2a939cc87220978068f",

"sha256:cea643473484bf1e4438bc03e6e5edf796ca3577a758eefa1424ce99bee9fd21"

]

},

springboot2021:kafka

"GraphDriver": {

"Data": {

"LowerDir": "/var/lib/docker/overlay2/d0f111678dc06c5e946a219ef56ae420edd0e745723d50f5933cc3cf643ae432/diff:/var/lib/docker/overlay2/70f5493f3f730dee6f0f17c9c2a19b07d00f621024c0bedbdb8a77b8d7ded686/diff:/var/lib/docker/overlay2/38e89dd21cb49ba42eddab9dc74f0c917d0d6ddc364be9fc4f91a64b7c6e3980/diff",

"MergedDir": "/var/lib/docker/overlay2/0191386a863b4b58ceb71bafc99147b90602470cca235700bdbba4d4aebe5c86/merged",

"UpperDir": "/var/lib/docker/overlay2/0191386a863b4b58ceb71bafc99147b90602470cca235700bdbba4d4aebe5c86/diff",

"WorkDir": "/var/lib/docker/overlay2/0191386a863b4b58ceb71bafc99147b90602470cca235700bdbba4d4aebe5c86/work"

},

"Name": "overlay2"

},

"RootFS": {

"Type": "layers",

"Layers": [

"sha256:32ac9dd9610b7b8c69248fb0454d9aad8082c327a5a7a46c7527a7fc0c15be37",

"sha256:3edc5fd20034bc55506e4a3a04896279f6118268b6280b23268a24392fa9aa82",

"sha256:e566764ec22375c8e969346b69a2301a9ca898441ca9f2a939cc87220978068f",

"sha256:6d3af2e8be297e03615d6bfbeeeed8251a9928e3a425179ed75345f93c463d4e"

]

},

/var/lib/docker/overlay2 保存原始镜像的层数据

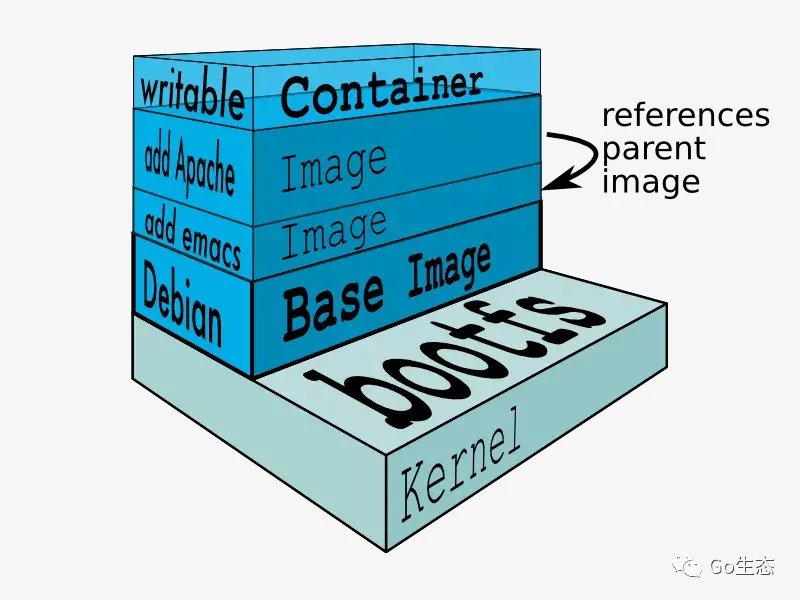

7.1 概念

Docker镜像是如何高效存储的,来看overlayfs的奇妙之处

https://mp.weixin.qq.com/s/T-7ym4OYWNoBA68lzMlULA

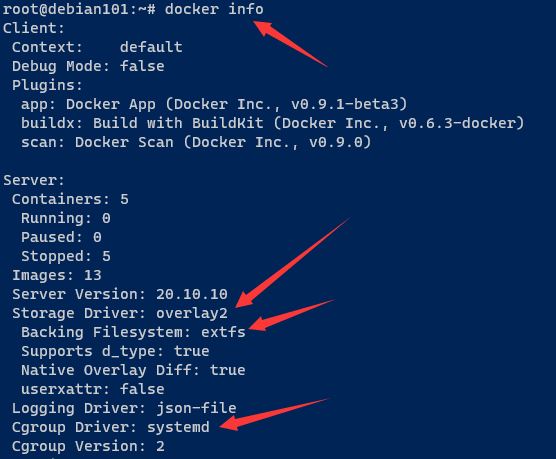

Docker中的镜像采用分层构建设计,每个层可以称之为“layer”,这些layer被存放在了/var/lib/docker//目录下,这里的storage-driver可以有很多种如:AUFS、OverlayFS、VFS、Brtfs等。可以通过docker info命令查看存储驱动。

https://docs.docker.com/storage/storagedriver/overlayfs-driver/ docker 官方文档

OverlayFS is a modern union filesystem that is similar to AUFS, but faster and with a simpler implementation. Docker provides two storage drivers for OverlayFS: the original overlay, and the newer and more stable overlay2.

配置存储驱动 /etc/docker/daemon.json,一般不用配置默认就是 overlay2

{

"storage-driver": "overlay2"

}

用docker info 查看所用存储驱动

必须保护镜像,镜像是只读的,而且只有一份是共享的,这样能够降低存储空间

每个容器实例必须有可写的内容

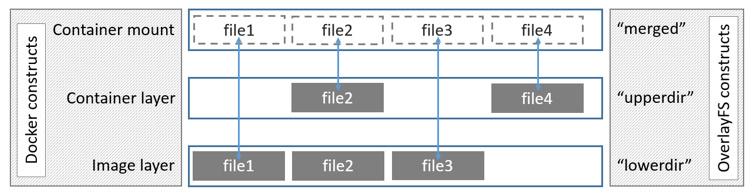

OverlayFS使用两个目录,把一个目录置放于另一个之上,并且对外提供单个统一的视角。这两个目录通常被称作层,这个分层的技术被称作union mount。术语上,下层的目录叫做lowerdir,上层的叫做upperdir。对外展示的统一视图称作merged。

docker镜像原理:overlayfs在linux主机上只有两层,一个目录在下层,用来保存镜像(docker) ,另外一个目录在上层,用来存储容器信息。在overlayfs中,底层的目录叫做lowerdir,顶层的目录称之为upperdir,对外提供统一的文件系统为merged。当需要修改一个文件时,使用Copy on Write 将文件从只读的Lower复制到可写的Upper进行修改,结果也保存在Upper层。在Docker中,底下的只读层就是image,可写层就是Container

overlayfs保存的每个镜像都保存在/var/lib/docker/overlay,并且有对应的目录,使用硬链接与底层数据进行关联,他是通过硬链接与底层存储建立连接的。

overlay就可以联合很多不同的底层存储,然后通过统一的视图来面向docker容器

root@debian101:~/filetest# pwd

/root/filetest

#建立相应的目录

mkdir lowerdir &&

mkdir -p upperdir/upper &&

mkdir -p upperdir/work &&

mkdir merged

#建立一些表示文件

cd lowerdir && echo "mirror_file_1" > ./mirror_file_1 && echo "mirror_file_2" > ./mirror_file_2

cd upperdir/upper && echo "upper_file_1" > ./upper_file_1 && echo "upper_file_2" > ./upper_file_2

apt-get install tree

root@debian101:~/filetest# tree

.

├── lowerdir

│ ├── mirror_file_1

│ └── mirror_file_2

├── merged #<-------这里没有文件

└── upperdir

├── upper

│ ├── upper_file_1

│ └── upper_file_2

└── work

5 directories, 4 files

#挂载到 merged

root@debian101:~/filetest# mount -t overlay overlay -o lowerdir=./lowerdir,upperdir=./upperdir/upper,workdir=./upperdir/work ./merged

root@debian101:~/filetest# tree

.

├── lowerdir

│ ├── mirror_file_1

│ └── mirror_file_2

├── merged #<-------这里有合并后的文件

│ ├── mirror_file_1

│ ├── mirror_file_2

│ ├── upper_file_1

│ └── upper_file_2

└── upperdir

├── upper

│ ├── upper_file_1

│ └── upper_file_2

└── work

└── work

6 directories, 8 files

root@debian101:~/filetest# pwd

/root/filetest

root@debian101:~/filetest# df -h |grep /root/filetest/merged

overlay 48G 6.3G 40G 14% /root/filetest/merged

7.2 原理

7.2.1 镜像与层

https://docs.docker.com/storage/storagedriver/

how Docker builds and stores images

how these images are used by containers.

A Docker image is built up from a series of layers. Each layer represents an instruction in the image’s Dockerfile

Docker镜像由一系列的层构成, 在Dockerfile 中的每条指令 都代表一层

比如:

# syntax=docker/dockerfile:1

FROM ubuntu:18.04

LABEL org.opencontainers.image.authors="org@example.com"

COPY . /app

RUN make /app

RUN rm -r $HOME/.cache

CMD python /app/app.py

This Dockerfile contains four commands. Commands that modify the filesystem create a layer. TheFROM statement starts out by creating a layer from the ubuntu:18.04 image. The LABEL command only modifies the image’s metadata, and does not produce a new layer. The COPY command adds some files from your Docker client’s current directory. The first RUN command builds your application using the make command, and writes the result to a new layer. The second RUN command removes a cache directory, and writes the result to a new layer. Finally, the CMD instruction specifies what command to run within the container, which only modifies the image’s metadata, which does not produce an image layer.

Each layer is only a set of differences from the layer before it. Note that both adding, and removing files will result in a new layer. In the example above, the $HOME/.cache directory is removed, but will still be available in the previous layer and add up to the image’s total size. Refer to the Best practices for writing Dockerfiles and use multi-stage builds sections to learn how to optimize your Dockerfiles for efficient images.

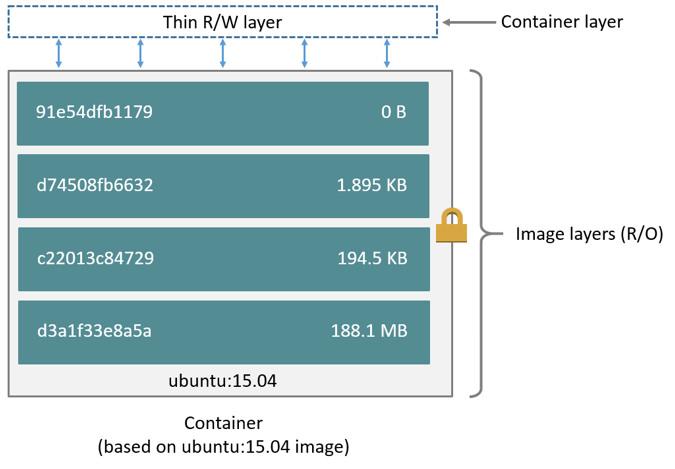

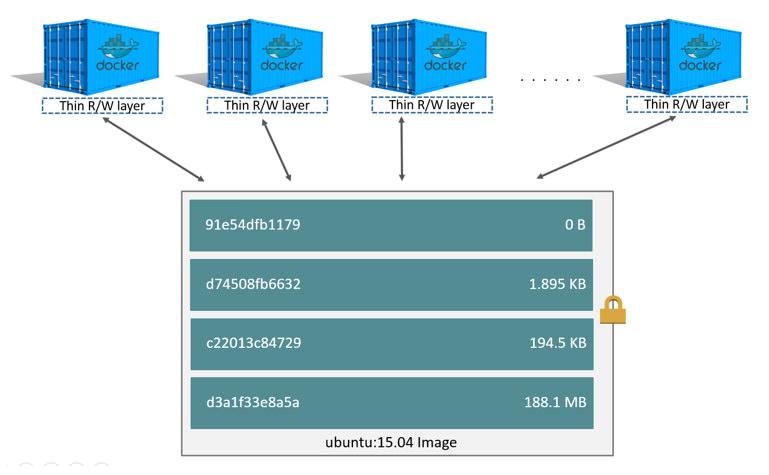

The layers are stacked on top of each other. When you create a new container, you add a new writable layer on top of the underlying layers. This layer is often called the “container layer”. All changes made to the running container, such as writing new files, modifying existing files, and deleting files, are written to this thin writable container layer. The diagram below shows a container based on an ubuntu:15.04 image.

7.2.2 容器和层

The major difference between a container and an image is the top writable layer. All writes to the container that add new or modify existing data are stored in this writable layer. When the container is deleted, the writable layer is also deleted. The underlying image remains unchanged.

Because each container has its own writable container layer, and all changes are stored in this container layer, multiple containers can share access to the same underlying image and yet have their own data state. The diagram below shows multiple containers sharing the same Ubuntu 15.04 image.

Docker uses storage drivers to manage the contents of the image layers and the writable container layer. Each storage driver handles the implementation differently, but all drivers use stackable image layers and the copy-on-write (CoW) strategy.

7.2.3 Container size on disk 容器的大小

打印容器大小

docker ps --size --format "table {{.ID}}\t{{.Image}}\t{{.Names}}\t{{.Size}}"

To view the approximate size of a running container, you can use the docker ps -s command. Two different columns relate to size.

size: the amount of data (on disk) that is used for the writable layer of each container.virtual size: the amount of data used for the read-only image data used by the container plus the container’s writable layersize. Multiple containers may share some or all read-only image data. Two containers started from the same image share 100% of the read-only data, while two containers with different images which have layers in common share those common layers. Therefore, you can’t just total the virtual sizes. This over-estimates the total disk usage by a potentially non-trivial amount.

The total disk space used by all of the running containers on disk is some combination of each container’s size and the virtual size values. If multiple containers started from the same exact image, the total size on disk for these containers would be SUM (size of containers) plus one image size (virtual size- size).

This also does not count the following additional ways a container can take up disk space:

- Disk space used for log files stored by the logging-driver. This can be non-trivial if your container generates a large amount of logging data and log rotation is not configured.

- Volumes and bind mounts used by the container.

- Disk space used for the container’s configuration files, which are typically small.

- Memory written to disk (if swapping is enabled).

- Checkpoints, if you’re using the experimental checkpoint/restore feature.

7.3 参考文档

参考文档:

OverlayFS https://blog.programster.org/overlayfs

深入理解overlayfs(一):初识 https://blog.csdn.net/qq_15770331/article/details/96699386

深入理解overlayfs(二):使用与原理分析 https://blog.csdn.net/luckyapple1028/article/details/78075358

docker镜像分层原理-overlay https://blog.csdn.net/baixiaoshi/article/details/88924281

[DOCKER存储驱动之OVERLAYFS简介]

https://www.cnblogs.com/styshoo/p/6503953.html

8. docker 资源和权限管理

五分钟搞定Docker底层原理

https://cloud.51cto.com/art/202112/693197.htm

https://mp.weixin.qq.com/s/o5nZZzOTNXOFjv2aaIZ6OA namespace

9. docker 网络原理

https://mp.weixin.qq.com/s/SqCwa069y4dcVQ1fWNQ0Wg 这个讲的通透

#容器内互联测试

#查看网络名称空间

#ip netns list

BR=bridge2

HOST_IP=192.168.200.33

#两个网卡client2-veth 和client2-veth-br 还都属于“default”命名空间,和物理网卡一样

ip link add client2-veth type veth peer name client2-veth-br

ip link add server2-veth type veth peer name server2-veth-br

# 添加一个网桥bridge2

ip link add $BR type bridge

#添加连个名称空间

ip netns add client2

ip netns add server2

ip link set client2-veth netns client2

ip link set server2-veth netns server2

ip link set client2-veth-br master $BR

ip link set server2-veth-br master $BR

#ip link set dev client2-veth-br master $BR

#ip link set dev server2-veth-br master $BR

ip link set $BR up

ip link set client2-veth-br up

ip link set server2-veth-br up

ip netns exec client2 ip link set client2-veth up

ip netns exec server2 ip link set server2-veth up

ip netns exec client2 ip addr add 192.168.200.11/24 dev client2-veth

ip netns exec server2 ip addr add 192.168.200.12/24 dev server2-veth

ip addr add 192.168.200.1/24 dev $BR

# 开始测试-----

# 查看路由表

ip netns exec client2 route -n

ip netns exec server2 route -n

#查看配置

root@debian101:~# ip netns exec client2 ip addr

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

6: client2-veth@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether b6:b0:6f:3c:99:26 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.200.11/24 scope global client2-veth

valid_lft forever preferred_lft forever

inet6 fe80::b4b0:6fff:fe3c:9926/64 scope link

valid_lft forever preferred_lft forever

root@debian101:~# ip netns exec server2 ip addr

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

8: server2-veth@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 62:a1:ef:0a:4b:c3 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.200.12/24 scope global server2-veth

valid_lft forever preferred_lft forever

inet6 fe80::60a1:efff:fe0a:4bc3/64 scope link

valid_lft forever preferred_lft forever

#client2 ping bridge2 通

ip netns exec client2 ping 192.168.200.1 -c 5

#server2 ping bridge2 通

ip netns exec server2 ping 192.168.200.1 -c 5

root@debian101:~# ping 192.168.200.11

PING 192.168.200.11 (192.168.200.11) 56(84) bytes of data.

64 bytes from 192.168.200.11: icmp_seq=1 ttl=64 time=0.061 ms

64 bytes from 192.168.200.11: icmp_seq=2 ttl=64 time=0.061 ms

64 bytes from 192.168.200.11: icmp_seq=3 ttl=64 time=0.067 ms

^C

--- 192.168.200.11 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2056ms

rtt min/avg/max/mdev = 0.061/0.063/0.067/0.003 ms

root@debian101:~# ping 192.168.200.12

PING 192.168.200.12 (192.168.200.12) 56(84) bytes of data.

64 bytes from 192.168.200.12: icmp_seq=1 ttl=64 time=0.235 ms

64 bytes from 192.168.200.12: icmp_seq=2 ttl=64 time=0.077 ms

^C

#client2 ping server2 不通

ip netns exec client2 ping 192.168.200.12 -c 5

# server2 ping client2 不通

ip netns exec server2 ping 192.168.200.11 -c 5

#安装 路由跟踪

apt-get install traceroute

root@debian101:~# ip netns exec client2 traceroute 192.168.200.12

traceroute to 192.168.200.12 (192.168.200.12), 30 hops max, 60 byte packets

1 * * *

2 * * *

3 * * *

4 * * *^C

why?!!!!!!!!!!

# 查看本机路由

root@debian101:~# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.100.1 0.0.0.0 UG 0 0 0 enp0s3

169.254.0.0 0.0.0.0 255.255.0.0 U 1000 0 0 enp0s3

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

172.18.0.0 0.0.0.0 255.255.0.0 U 0 0 0 br-4fbb572e2d87

192.168.100.0 0.0.0.0 255.255.255.0 U 0 0 0 enp0s3

https://blog.csdn.net/guotianqing/article/details/82356096 访问外网

https://mp.weixin.qq.com/s/mMrNURlKys65DGynJ4ridw 手工模拟实现 Docker 容器网络

# 名称空间访问外网

# 添加默认路由

ip netns exec client2 ip route add default via 192.168.200.1

ip netns exec server2 ip route add default via 192.168.200.1

# 删除默认路由

# ip netns exec client2 ip route del default via 192.168.200.1

# ip netns exec server2 ip route del default via 192.168.200.1

官方文档 https://docs.docker.com/network/

查看当前的网络名称空间

ip netns list

查看client 名称空间ip 配置

ip netns exec client ip addr

9.1 none 网络

9.2 host 网络

9.2 birdge 网络

官方桥接网络文档

https://docs.docker.com/network/network-tutorial-standalone/

查看docker网络

root@debian101:~# docker network ls

NETWORK ID NAME DRIVER SCOPE

f4d2c009ffc1 bridge bridge local

b0b08cab320b host host local

de05eddb73d3 none null local

# alpine linux 镜像不到6M

# 创建2个 容器,用同一个 net

docker network create testnet1

root@debian101:~# docker network ls

NETWORK ID NAME DRIVER SCOPE

f4d2c009ffc1 bridge bridge local

b0b08cab320b host host local

de05eddb73d3 none null local

4fbb572e2d87 testnet1 bridge local

docker rm -f test1 test2

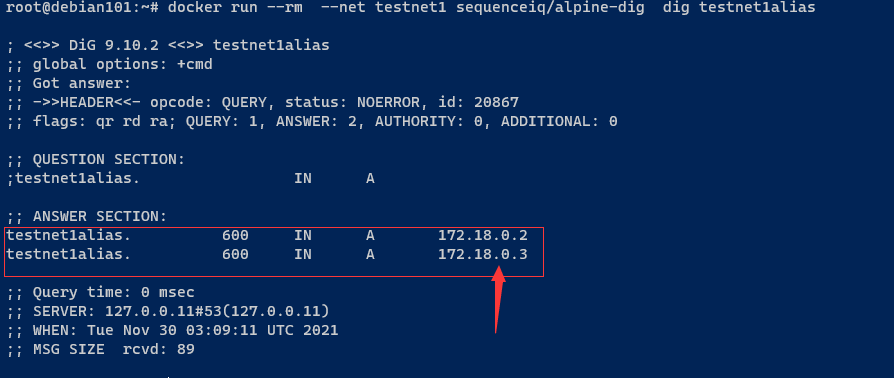

docker run -itd --name test1 --network-alias testnet1alias --net testnet1 alpine

docker run -itd --name test2 --network-alias testnet1alias --net testnet1 alpine

查看2个容器ip

docker inspect --format '{{.NetworkSettings.Networks.testnet1.IPAddress}}' test1

docker inspect --format '{{.NetworkSettings.Networks.testnet1.IPAddress}}' test2

#测试网络联通行

#docker run --rm --net testnet1 alpine ping -c 1 test1

#docker run --rm --net testnet1 alpine ping -c 1 test2

for i in test1 test2 ;\

do \

docker run --rm --net testnet1 alpine ping -c 1 $i ;\

done ;

#测试网络轮询负载均衡 ping testnet1alias,发现是轮询的

for((i=1;i<=10;i++)); \

do \

docker run --rm --net testnet1 alpine ping -c 1 testnet1alias ; \

done ;

======输出结果=====

PING testnet1alias (172.18.0.2): 56 data bytes

64 bytes from 172.18.0.2: seq=0 ttl=64 time=0.128 ms

--- testnet1alias ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.128/0.128/0.128 ms

PING testnet1alias (172.18.0.3): 56 data bytes #<<==============

64 bytes from 172.18.0.3: seq=0 ttl=64 time=0.220 ms

--- testnet1alias ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.220/0.220/0.220 ms

PING testnet1alias (172.18.0.2): 56 data bytes #<<==============

64 bytes from 172.18.0.2: seq=0 ttl=64 time=0.129 ms

#测试DNS解析,结果查看下图

docker run --rm --net testnet1 sequenceiq/alpine-dig dig testnet1alias

#删除网络

docker network rm testnet1

DNS 查询

自定义bridge网络并并把容器加入到当前网络

https://z.itpub.net/article/detail/5CE27E27E0331A3666F0E95EFD040495 有了这篇 Docker 网络原理,彻底爱了

9.3 container 网络

这类似k8s 中的pod 的初始容器 pause 只是建立网络空间

然后同一个pod 的网络内以localhost 访问

9.4 参考文档

一文理解 K8s 容器网络虚拟化 [https://mp.weixin.qq.com/s/rVf2_0yefEgAyLgJgq5-kw[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-PB1Fdmq0-1651736945277)()]

https://mp.weixin.qq.com/s/mMrNURlKys65DGynJ4ridw 手工模拟实现 Docker 容器网络

https://mp.weixin.qq.com/s/k9DhpXHmvA9M1eib_R-zIA 5 张图带你搞懂容器网络的工作原理

9.5 路由

C:\Users\xue>route PRINT -4

===========================================================================

接口列表

6...20 1a 06 bd 31 69 ......Qualcomm Atheros AR8172/8176/8178 PCI-E Fast Ethernet Controller (NDIS 6.30)

50...00 15 5d 8a f4 7e ......Hyper-V Virtual Ethernet Adapter

12...0a 00 27 00 00 0c ......VirtualBox Host-Only Ethernet Adapter

16...82 56 f2 f1 b0 51 ......Microsoft Wi-Fi Direct Virtual Adapter

3...82 56 f2 f1 b8 51 ......Microsoft Wi-Fi Direct Virtual Adapter #2

13...00 ff 79 83 14 58 ......Sangfor SSL VPN CS Support System VNIC

9...80 56 f2 f1 b0 51 ......Broadcom 802.11n Network Adapter

1...........................Software Loopback Interface 1

===========================================================================

IPv4 路由表

===========================================================================

活动路由:

网络目标 网络掩码 网关 接口 跃点数

0.0.0.0 0.0.0.0 192.168.1.1 192.168.1.105 55

127.0.0.0 255.0.0.0 在链路上 127.0.0.1 331

127.0.0.1 255.255.255.255 在链路上 127.0.0.1 331

127.255.255.255 255.255.255.255 在链路上 127.0.0.1 331

172.30.192.0 255.255.240.0 在链路上 172.30.192.1 271

172.30.192.1 255.255.255.255 在链路上 172.30.192.1 271

172.30.207.255 255.255.255.255 在链路上 172.30.192.1 271

192.168.1.0 255.255.255.0 在链路上 192.168.1.105 311

192.168.1.105 255.255.255.255 在链路上 192.168.1.105 311

192.168.1.255 255.255.255.255 在链路上 192.168.1.105 311

192.168.100.0 255.255.255.0 在链路上 192.168.100.1 281

192.168.100.1 255.255.255.255 在链路上 192.168.100.1 281

192.168.100.255 255.255.255.255 在链路上 192.168.100.1 281

224.0.0.0 240.0.0.0 在链路上 127.0.0.1 331

224.0.0.0 240.0.0.0 在链路上 192.168.100.1 281

224.0.0.0 240.0.0.0 在链路上 192.168.1.105 311

224.0.0.0 240.0.0.0 在链路上 172.30.192.1 271

255.255.255.255 255.255.255.255 在链路上 127.0.0.1 331

255.255.255.255 255.255.255.255 在链路上 192.168.100.1 281

255.255.255.255 255.255.255.255 在链路上 192.168.1.105 311

255.255.255.255 255.255.255.255 在链路上 172.30.192.1 271

===========================================================================