Ceph版本:17.2.6

系统:centos 8.2

硬件要求:每台主机至少2个硬盘(这里是2个硬盘)

根据官方的说法是不支持centos8,但是由于只能在centos8上部署所以记录一下手动部署的步骤。(由于kubernetes部署ceph不会,只能使用这个方法)

主机名 | IP | ceph角色 |

ceph-node171 | 192.168.188.171 | ceph-mon、ceph-osd、ceph-mgr、ceph-mds |

ceph-node172 | 192.168.188.172 | ceph-mon、ceph-osd、ceph-mgr、ceph-mds |

ceph-node173 | 192.168.188.173 | ceph-mon、ceph-osd、ceph-mgr、ceph-mds |

1.添加ceph的repo

cat > /etc/yum.repos.d/ceph.repo <<EOF

[ceph]

name=ceph

baseurl=http://mirrors.bclinux.org/ceph/rpm-17.2.6/el8/x86_64/

gpgcheck=0

enabled=1

[ceph-noarch]

name=cephnoarch

baseurl=http://mirrors.bclinux.org/ceph/rpm-17.2.6/el8/noarch/

gpgcheck=0

enabled=1

EOF2.关闭selinux和firewalld

systemctl disable --now firewalld

sed -i "s/SELINUX=.*$/SELINUX=disabled/g" /etc/selinux/config3.ssh免密

ssh-keygen -t rsa -P "" -f ~/.ssh/id_rsa

export 123456

for i in ceph-node171 ceph-node172 ceph-node173 ;do

echo ${i}

sshpass -e ssh-copy-id ${i}

done4.安装ceph及依赖(如果系统不是lvm部署的还需要安装lvm2的包)

yum install -y ceph ceph-volume cryptsetup lvm25.生成配置文件,将下面的

rm -rf cephinstall

mkdir -p cephinstall

cd cephinstall

CLUSTERID=$(uuidgen)

cat>ceph.conf<<EOF

[global]

fsid = ${CLUSTERID}

mon_initial_members = ceph-node171,ceph-node172,ceph-node173

mon_host = 192.168.188.171,192.168.188.172,192.168.188.173

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

osd pool default size = 2

osd max object name len = 256

osd max object namespace len = 64

mon_pg_warn_max_per_osd = 2000

mon clock drift allowed = 30

mon clock drift warn backoff = 30

rbd cache writethrough until flush = false

public network = 192.168.188.0/24

mds_cache_size = 500000

mds_bal_fragment_size_max=10000000

[osd]

filestore xattr use omap = true

filestore min sync interval = 10

filestore max sync interval = 15

filestore queue max ops = 25000

filestore queue max bytes = 1048576000

filestore queue committing max ops = 50000

filestore queue committing max bytes = 10485760000

filestore op threads = 32

[mds.ceph-node171]

host = ceph-node171

[mds.ceph-node172]

host = ceph-node172

[mds.ceph-node173]

host = ceph-node173

EOF6.生成ceph集群需要的key

#Create a keyring for your cluster and generate a monitor secret key

ceph-authtool --create-keyring ceph.mon.keyring --gen-key -n mon. --cap mon 'allow *'

#Generate an administrator keyring, generate a client.admin user and add the user to the keyring

ceph-authtool --create-keyring ceph.client.admin.keyring --gen-key -n client.admin --cap mon 'allow *' --cap osd 'allow *' --cap mds 'allow *' --cap mgr 'allow *'

#Generate a bootstrap-osd keyring, generate a client.bootstrap-osd user and add the user to the keyring

ceph-authtool --create-keyring ceph.keyring --gen-key -n client.bootstrap-osd --cap mon 'profile bootstrap-osd' --cap mgr 'allow r'

#Add the generated keys to the ceph.mon.keyring

ceph-authtool ceph.mon.keyring --import-keyring ceph.client.admin.keyring

ceph-authtool ceph.mon.keyring --import-keyring ceph.keyring

#Change the owner for ceph.mon.keyring

chown ceph:ceph ceph.mon.keyring7.部署ceph-mon

ADDMONS=""

for i in ceph-node171 ceph-node172 ceph-node173;do

IP=$(grep $i /etc/hosts|sort|uniq|awk '{print $1}')

COMMANDP=" --add ${i} ${IP} "

ADDMONS=${ADDMONS}${COMMANDP}

done

echo ${ADDMONS}

monmaptool --create ${ADDMONS} --fsid ${CLUSTERID} monmap

for i in ceph-node171 ceph-node172 ceph-node173;do

ssh ${i} "systemctl stop ceph-mon@${i}"

scp monmap ${i}:/tmp

scp ceph.conf ${i}:/etc/ceph

scp ceph.client.admin.keyring ${i}:/etc/ceph

A=$(ssh ${i} mount |grep ceph|awk '{print $3}')

if [ "$A" != "" ];then

OSDID=$(echo $A|awk -F "-" '{print $NF}')

ssh ${i} "systemctl stop ceph-osd@${OSDID};umount $A"

fi

ssh ${i} "rm -rf /var/lib/ceph/*"

ssh ${i} "rm -f /tmp/ceph.mon.keyring"

scp ceph.mon.keyring ${i}:/tmp

ssh ${i} "chown ceph:ceph /tmp/ceph.mon.keyring /tmp/monmap"

ssh ${i} "sudo -u ceph mkdir -p /var/lib/ceph/mon/ceph-${i}"

ssh ${i} "sudo -u ceph ceph-mon --mkfs -i ${i} --monmap /tmp/monmap --keyring /tmp/ceph.mon.keyring"

#查看生成的文件

#ssh ${i} "ls /var/lib/ceph/mon/ceph-$i/"

ssh ${i} "systemctl stop ceph-mon@${i};systemctl enable ceph-mon@${i};sleep 2;systemctl start ceph-mon@${i}"

done8.部署ceph-mgr

for i in ceph-node171 ceph-node172 ceph-node173;do

ssh ${i} "mkdir -p /var/lib/ceph/mgr/ceph-${i}"

scp ceph.keyring ${i}:/var/lib/ceph/mgr/ceph-${i}/keyring

ssh ${i} "chown -R ceph:ceph /var/lib/ceph/mgr/"

ssh ${i} "ceph auth get-or-create mgr.${i} mon 'allow profile mgr' osd 'allow *' mds 'allow *'>/var/lib/ceph/mgr/ceph-${i}/keyring"

ssh ${i} "systemctl restart ceph-mgr@${i};systemctl enable ceph-mgr@${i}"

done9.部署ceph-osd

OSDID=0

for i in ceph-node171 ceph-node172 ceph-node173;do

ssh ${i} "sudo -u ceph mkdir -p /var/lib/ceph/bootstrap-osd"

ssh ${i} "sudo -u ceph mkdir -p /var/lib/ceph/osd/ceph-${OSDID}"

scp ceph.keyring ${i}:/var/lib/ceph/bootstrap-osd/

ssh ${i} "chown ceph:ceph -R /var/lib/ceph/bootstrap-osd/"

CURRENTDISK=$(ssh ${i} "lsblk|grep -E /boot|awk '{print \$1}'|awk -F '─' '{print \$NF}'|sed 's?[0-9]??g'")

OSDDISK=$(ssh ${i} "lsblk|grep disk|grep -vE \"${CURRENTDISK}|rom\"|awk 'NR==1{print \$1}'")

echo ${OSDDISK}

A=$(ssh ${i} vgdisplay|grep ceph|awk '{print $NF}')

if [ "$A" != "" ];then

ssh ${i} "systemctl stop ceph-osd@${OSDID}"

ODLOSDID=$(ssh ${i} "dmsetup status|grep ^ceph|awk -F ':' '{print \$1}'")

if [ "${ODLOSDID}" != "" ];then

ssh ${i} "dmsetup remove ${ODLOSDID}"

fi

ssh ${i} "dd if=/dev/zero of=/dev/${OSDDISK} bs=512k count=1"

fi

ssh ${i} "ceph-volume lvm create --data /dev/${OSDDISK}"

ssh ${i} "systemctl restart ceph-osd@${OSDID};systemctl enable ceph-osd@${OSDID}"

OSDID=$((OSDID+1))

done10.部署ceph-mds

for i in ceph-node171 ceph-node172 ceph-node173;do

ssh ${i} "sudo -u ceph mkdir -p /var/lib/ceph/mds/ceph-${i}"

ssh ${i} "ceph-authtool --create-keyring /var/lib/ceph/mds/ceph-${i}/keyring --gen-key -n mds.${i}"

ssh ${i} "chown ceph:ceph -R /var/lib/ceph/mds/ceph-${i}/"

ssh ${i} "ceph auth add mds.${i} osd 'allow rwx' mds 'allow' mon 'allow profile mds' -i /var/lib/ceph/mds/ceph-${i}/keyring"

#ssh ${i} "ceph auth get-or-create mds.${i} mon 'profile mds' mgr 'profile mds' mds 'allow *' osd 'allow *' > /var/lib/ceph/mds/ceph-${i}/keyring"

ssh ${i} "systemctl restart ceph-mds@${i};systemctl enable ceph-mds@${i}"

done11.修正没有开启ceph-mon的enable-msgr2

for i in ceph-node171 ceph-node172 ceph-node173;do

ssh ${i} "ceph mon enable-msgr2"

done12.修正 mons are allowing insecure global_id reclaim的错误

ceph config set mon auth_allow_insecure_global_id_reclaim false13.创建cephfs

OSDNUM=$(echo "ceph-node171 ceph-node172 ceph-node173"|sed 's? ?\n?g'|wc -l)

if [ ${OSDNUM} -ge 5 ];then

POOLNUM=512

else

POOLNUM=128

fi

##create ceph pool

ceph osd pool create fs_db_data ${POOLNUM}

ceph osd pool create fs_db_metadata ${POOLNUM}

ceph osd lspools

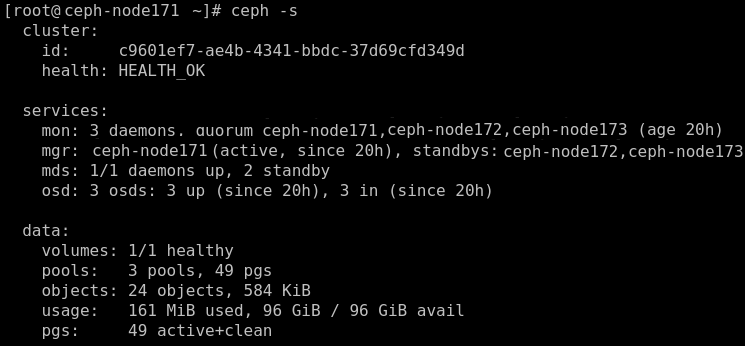

ceph fs new cephfs fs_db_metadata fs_db_data14.查看ceph集群状态

15.挂载cephfs

在ceph节点上

CEPH_SECRET=$(ceph auth get-key client.admin)

mount -t ceph 192.168.188.171:6789,192.168.188.172:6789,192.168.188.173:6789:/ -o name=admin,secret=${CEPH_SECRET}在非ceph的节点挂载

#复制ceph挂载需要的文件这里从ceph-node171上复制为例

mkdir -p /etc/ceph

scp ceph-node171:/etc/ceph/ceph.conf /etc/ceph/

scp ceph-node171:/etc/ceph/ceph.client.admin.keyring /etc/ceph/

CEPH_SECRET=$(ssh ceph-node171 ceph auth get-key client.admin)

mount -t ceph 192.168.188.171:6789,192.168.188.172:6789,192.168.188.173:6789:/ -o name=admin,secret=${CEPH_SECRET}

到此ceph部署完成,至于要开启dashboard之类请自行搜索后开启。从第5步开始到14步骤的脚本复制拼接后可以重复执行。

注意:只适用于ceph全新部署,如果是有数据的请自行备份相关的数据后再执行。