warpDrive是一个python库,目的是使用GPU并行运行多个仿真环境,现在支持的仿真环境还是比较简单和单一的,现在可以使用的仿真游戏为 tag 游戏。

同时,warpDrive提供了一个教程,其中第一部分的内容地址如下:

http://colab.research.google.com/github/salesforce/warp-drive/blob/master/tutorials/tutorial-1-warp_drive_basics.ipynb

由于上面的教程代码存在一定的问题,于是个人修改了一版:

import numpy as np

from warp_drive.managers.data_manager import CUDADataManager

from warp_drive.managers.function_manager import (

CUDAFunctionManager, CUDALogController, CUDASampler, CUDAEnvironmentReset

)

from warp_drive.utils.data_feed import DataFeed

source_code = """

// A function to demonstrate how to manipulate data on the GPU.

// This function increments each the random data array we pushed to the GPU before.

// Each index corresponding to (env_id, agent_id) in the array is incremented by "agent_id + env_id".

// Everything inside the if() loop runs in parallel for each agent and environment.

//

extern "C"{

__global__ void cuda_increment(

float* data,

int num_agents

)

{

int env_id = blockIdx.x;

int agent_id = threadIdx.x;

if (agent_id < num_agents){

int array_index = env_id * num_agents + agent_id;

int increment = env_id + agent_id;

data[array_index] += increment;

}

}

}

"""

from timeit import Timer

def push_random_data_and_increment_timer(

num_runs=1,

num_envs=2,

num_agents=3,

source_code=None

):

assert source_code is not None

def push_random_data(num_agents, num_envs):

# Initialize the CUDA data manager

cuda_data_manager = CUDADataManager(

num_agents=num_agents,

num_envs=num_envs,

episode_length=100

)

# Create random data

random_data = np.random.rand(num_envs, num_agents)

# Push data from host to device

data_feed = DataFeed()

data_feed.add_data(

name="random_data",

data=random_data,

)

data_feed.add_data(

name="num_agents",

data=num_agents

)

cuda_data_manager.push_data_to_device(data_feed)

return cuda_data_manager

# Initialize the CUDA function manager

def cuda_func_init():

cuda_function_manager = CUDAFunctionManager(

num_agents=num_agents, #cuda_data_manager.meta_info("n_agents"),

num_envs=num_envs #cuda_data_manager.meta_info("n_envs")

)

# Load source code and initialize function

cuda_function_manager.load_cuda_from_source_code(

source_code,

default_functions_included=False

)

cuda_function_manager.initialize_functions(["cuda_increment"])

increment_function = cuda_function_manager._get_function("cuda_increment")

return cuda_function_manager, increment_function

def increment_data(cuda_data_manager, cuda_function_manager, increment_function):

increment_function(

cuda_data_manager.device_data("random_data"),

cuda_data_manager.device_data("num_agents"),

block=cuda_function_manager.block,

grid=cuda_function_manager.grid

)

# set variable

# cuda_data_manager = push_random_data(num_agents, num_envs)

# cuda function init

# cuda_function_manager, increment_function = cuda_func_init()

# cuda function run

# increment_data(cuda_data_manager, cuda_function_manager, increment_function)

data_push_time = Timer(lambda: push_random_data(num_agents, num_envs)).timeit(number=num_runs)

cuda_data_manager = push_random_data(num_agents, num_envs)

cuda_function_manager, increment_function = cuda_func_init()

program_run_time = Timer(lambda: increment_data(cuda_data_manager, cuda_function_manager, increment_function)).timeit(number=num_runs)

print(cuda_data_manager.pull_data_from_device('random_data'))

return {

"data push times": data_push_time,

"code run time": program_run_time

}

num_runs = 1000

times = {}

for scenario in [

(1, 1),

(1, 100),

(1, 1000),

(100, 1000),

(1000, 1000)

]:

num_envs, num_agents = scenario

times.update(

{

f"envs={num_envs}, agents={num_agents}":

push_random_data_and_increment_timer(

num_runs,

num_envs,

num_agents,

source_code

)

}

)

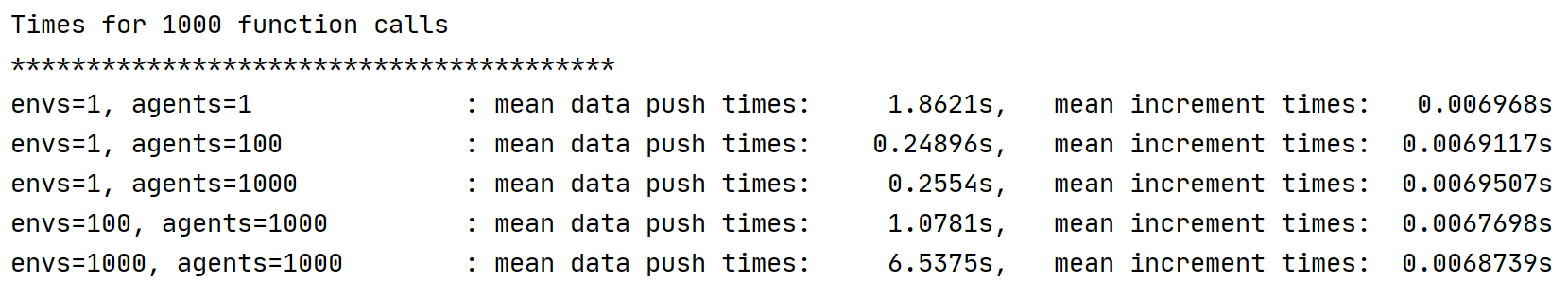

print(f"Times for {num_runs} function calls")

print("*"*40)

for key, value in times.items():

print(f"{key:30}: mean data push times: {value['data push times']:10.5}s,\t mean increment times: {value['code run time']:10.5}s")

'''

print(cuda_data_manager._meta_info)

print(cuda_data_manager._host_data)

print(cuda_data_manager._device_data_pointer)

print(cuda_data_manager._scalar_data_list)

print(cuda_data_manager._reset_data_list)

print(cuda_data_manager._log_data_list)

print(cuda_data_manager._device_data_via_torch)

print(cuda_data_manager._shared_constants)

print(cuda_data_manager._shape)

print(cuda_data_manager._dtype)

print(tensor_on_device)

time.sleep(300)

'''运行环境: gtx1060 显卡

WarpDrive 的github地址:

https://github.com/salesforce/warp-drive

gitee地址:

https://gitee.com/devilmaycry812839668/warp-drive